Freely Available British and Irish Public Legal Information

[Home] [Databases] [World Law] [Multidatabase Search] [Help] [Feedback]

England and Wales High Court (Patents Court) Decisions

You are here: BAILII >> Databases >> England and Wales High Court (Patents Court) Decisions >> Abbott Diabetes Care Inc & Ors v Dexcom Incorporated & Ors [2024] EWHC 36 (Pat) (15 January 2024)

URL: http://www.bailii.org/ew/cases/EWHC/Patents/2024/36.html

Cite as: [2024] EWHC 36 (Pat)

[New search] [Printable PDF version] [Help]

Neutral Citation Number: [2024] EWHC 36 (Pat)

Case No: HP-2021-000025 & HP-2021-000026

IN THE HIGH COURT OF JUSTICE

BUSINESS AND PROPERTY COURTS OF ENGLAND AND WALES

INTELLECTUAL PROPERTY LIST (ChD)

PATENTS COURT

Rolls Building, 7 Fetter Lane,

London, EC4A 1NL

Date: 15th January 2024

Before :

MR JUSTICE MELLOR

- - - - - - - - - - - - - - - - - - - - -

Between :

(1) ABBOTT DIABETES CARE INC.

(2) ABBOTT LABORATORIES VASCULAR ENTERPRISES LP

(3) ABBOTT IRELAND

(4) ABBOTT DIABETES CARE LIMITED

(5) ABBOTT DIAGNOSTICS GMBH

(6) ABBOTT LABORATORIES LIMITED

Claimants in HP-2021-000025

(1) DEXCOM INCORPORATED

(2) DEXCOM INTERNATIONAL LIMITED

(4) DEXCOM (UK) DISTRIBUTION LIMITED

Defendants in HP-2021-000025

- and –

ABBOTT LABORATORIES LIMITED

Claimant/Part 20 Defendant in HP-2021-000026

- and –

DEXCOM INCORPORATED

Defendant/Part 20 Claimant in HP-2021-000026

- and –

DEXCOM INTERNATIONAL LIMITED

Part 20 Claimant in HP-2021-000026

- and –

(1) ABBOTT DIABETES CARE LIMITED

(2) ABBOTT DIABETES CARE INC.

Part 20 Defendants in HP-2021-000026

- - - - - - - - - - - - - - - - - - - - -

- - - - - - - - - - - - - - - - - - - - -

Daniel Alexander KC, James Abrahams KC, Michael Conway and Jennifer Dixon (instructed by Taylor Wessing LLP) for the Abbott parties

Benet Brandreth KC and David Ivison (instructed by Bird & Bird LLP) for the Dexcom parties

Hearing dates: 30th November, 2nd, 5-7th, 12th-13th December 2022

- - - - - - - - - - - - - - - - - - - - -

Judgment Approved

Remote hand-down: This judgment will be handed down remotely by circulation to the parties or their representatives by email and release to The National Archives. The deemed date of hand down is 10.30 am on Monday 15th January 2024.

Mr Justice Mellor :

Nature of the "second indication". 39

Abbott's case on infringement 42

Dexcom's non-infringement points. 44

VALIDITY: NOVELTY & INVENTIVE STEP. 46

Abbott's arguments against anticipation. 48

THE TECHNICAL CONTEXT OF EP223. 54

Composition of the skilled team.. 56

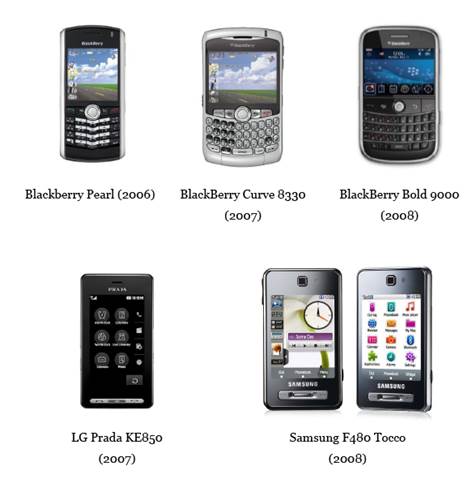

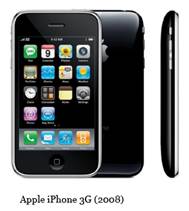

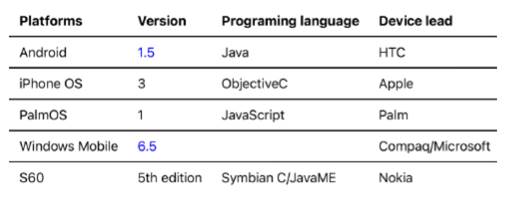

Mobile phones / health applications. 58

Regulatory considerations for medical device software. 61

'Safety critical application' 78

Integers 1.2(b) - (d) individually. 79

Integers 1.2(b) - (d) compendiously. 80

The finding of no infringement by the Mannheim Court. 91

Safety critical application?. 95

Dexcom's Gillette arguments. 104

The EP159 / 539 Skilled Addressee. 110

Level of consensus on blood glucose levels for hypoglycaemia. 111

The accuracy of CGM devices. 112

CONSTRUCTION - claim 1 of EP159. 115

CONSTRUCTION - claim 1 of EP539. 116

Applicable principles - Inventive Step. 126

Obviousness of a predictive fixed alert 131

Obviousness of a fixed predictive alert 133

Other features of the claims. 134

EP159, integer 1(d) - temperature correction. 134

EP539, integer 1(h) - different outputs for indicators. 134

EP539, integers 1(k)-1(l), visual target range. 134

Dexcom's arguments against obviousness. 135

INTRODUCTION

1. This is my judgment from Trial A in these proceedings, the first of three trials concerning various patents owned by Abbott and by Dexcom which have application in the field of Continuous Glucose Monitoring (CGM) devices. Originally there were five patents in issue for this trial, but shortly before trial Abbott withdrew its allegation of infringement of EP625, leaving four patents in issue, two owned by Abbott, EP627 and EP223 and two owned by Dexcom EP159 and EP539. Some prior art citations were also abandoned in the lead up to trial, but at trial, there remained 9 prior art citations to consider, as well as other validity attacks.

2. CGM devices are used by diabetic patients for the purpose of monitoring their blood glucose, with a view to taking action if their blood glucose becomes too high (hyperglycaemia - requiring an injection of insulin) or too low (hypoglycaemia - requiring consumption of carbohydrate). The patents which are in issue in this trial relate, broadly, to features which involve providing users with information about their blood glucose levels and certain other characteristics. The exception is EP223 which relates to a method of checking that the application that operates on the device is installed and functioning properly.

3. There are only a handful of companies in the world involved in making CGM devices of the kind in issue. Abbott and Dexcom are the leading ones. Each has various CGM devices which it sells or proposes to sell in the UK. In the case of Dexcom, the products in issue are the G6, G7 and Dexcom ONE ("D1") systems. These are similar in several respects. In the case of Abbott, the products in issue are the FreeStyle Libre 2 ("FSL2") and FreeStyle Libre 3 ("FSL3").

4. The basic technology for these products is similar and none of the patents relate to the core functionality: they are largely features or options for the user interfaces. Here I introduce each of the patents in order of their priority dates, none of which are challenged. I analyse the issues on each patent in turn below. In general, I have addressed relevant authorities in the context of the patent where the principle issue arose (e.g. obviousness in relation to EP159), but I have kept the relevant principles in mind throughout.

The Abbott patents

5. EP (UK) 2 146 627 ("EP627") is entitled "Method and Apparatus for Providing Data Processing and Control in Medical Communication System". Its priority date is 14 April 2007 (the "2007 Priority Date").

6. This patent describes various aspects of a CGM but claims only a subset of the functionality disclosed. The inventive concept of the claims is said to be a method of notifying a patient of a glucose condition without thereby interrupting a routine being performed on a user interface, by outputting a first "gentle" or passive indication during the execution of the routine, to allow its continued use, but then also notifying the patient of the condition by providing a second more pronounced notification after the routine has completed.

7. EP627 is contended to be infringed by Dexcom's G6, G7 and D1 systems. Dexcom contends it is invalid for lack of novelty and/or inventive step, insufficiency and lack of patentable subject matter.

8. EP (UK) 2 476 223 ("EP223") is entitled "Methods and Articles of Manufacture for Hosting a Safety Critical Application on an Uncontrolled Data Processing Device" and has a priority date of 8 September 2009 (the "2009 Priority Date"). EP223 is contended to be infringed by the G6, G7 and D1 systems. Dexcom contends it is invalid for lack of novelty and/or inventive step and insufficiency. There is a conditional application to amend claim 1 (opposed only on the ground that it does not cure the alleged invalidity).

The Dexcom patents

9. EP (UK) 2 914 159 ("EP159") is contended to be infringed by Abbott's FSL2 and FSL3 systems. Abbott contends it is invalid for lack of novelty and inventive step, and insufficiency.

10. EP (UK) 3 782 539 ("EP539") is accepted to be invalid as granted. Dexcom applies to amend it: the unconditional amendment is opposed on grounds of clarity and extension of scope. If the amendment is allowed, the scope of EP539 would become materially the same as EP159 and therefore the issues, and the final result, on EP539 is accepted to be the same as for EP159.

11. The priority date of both EP159 and EP539 is 30 October 2012 (the "2012 Priority Date").

The expert witnesses

12. At the first CMC, I was inclined to and did limit the number of expert witnesses for which the parties requested permission, but at a later hearing I was persuaded to give permission for up to three experts on each side. At trial, Abbott called a single expert - Dr Cesar Palerm, and Dexcom called two - Professor Nick Oliver and Dr Vlad Stirbu.

13. Dr Palerm is an engineer who has spent the bulk of his career in the field of glucose monitoring and control. From 2004-2007, he conducted research at the University of California Santa Barbara (UCSB) including investigations using several different CGM devices including Abbott's FreeStyle Navigator device and Dexcom's STS-7. From 2007 - 2016, Dr Palerm was a Principal Scientist and later a Senior Principal Scientist at Medtronic Diabetes where he was involved in the design and product development of Medtronic's CGM devices and insulin pumps, such as the MiniMed 640G and MiniMed 670G (the first hybrid closed-loop infusion pump). From 2016-2021 Dr Palerm worked at Bigfoot Biomedical, a start-up developing a closed-loop system to regulate glucose for people with type 1 diabetes.

14. Prof Oliver is both a clinician and an engineer. He is Wynn Professor of Human Metabolism at Imperial College, London and a consultant physician in Diabetes and Endocrinology at Imperial College Healthcare NHS Trust. He has expertise in CGM design, implementation and engineering. As he explained in his first report:

"I have been a clinician specialising in diabetes for almost 20 years. During that time, and mostly in the last 16 years, I have combined my role as a clinician with my role and work within the Department of Biomedical Engineering and the Centre for Bio- inspired Technology at Imperial College London focussing on, among other things, the development and design of CGM systems."

15. Dr Stirbu is a software engineer and consultant. For much of his career he was employed by Nokia. That work included development of remote patient monitoring by smart phone app.

16. As will appear below, in relation to all three experts, I have accepted some of their evidence and rejected other parts. Various criticisms were levelled at them and their evidence but these are best considered in context, below. Generally, I found all of their evidence informative and helpful and I am grateful to them for their assistance.

Common general knowledge

17. Although the parties prepared a single CGK Statement which identified what was agreed and disputed at all three priority dates, I prefer to separate out the CGK relating to EP223 (covering 'Mobile phone technology in 2009' and 'Regulatory considerations for medical device software') because it is self-contained and relevant only to that Patent. It is set out in the section addressing EP223 below.

18. As for the other Patents, the CGK falls into two broad categories: first, knowledge of CGM devices which existed in 2007 (for EP627) and in 2012 (for EP159 and EP539); second, knowledge of diabetes and its treatment in 2012 because it is of primary relevance to EP159 and EP539 (though much of it is background to the other Patents, even with their earlier Priority Dates). Neither expert identified any specific matters of CGK relevant to EP627 beyond knowledge of the CGM devices that existed in 2007.

19. Accordingly, what follows covers:

i) Knowledge of diabetes and its treatment/management.

ii) Measuring blood glucose.

iii) Hyper- and hypoglycaemia.

iv) Glycaemic variability in patients.

v) CGM devices and their key features, including alarms and alerts.

vi) The CGK as to specific CGM devices marketed from 1999 through to 2012.

20. As the parties emphasised, this is a summary of the CGK and more detail was supplied in the experts' reports.

Diabetes

Background to Diabetes

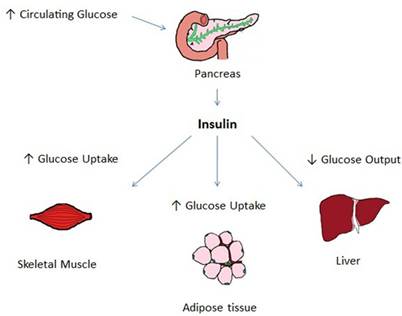

22. Glucose is the primary source of energy for the body under normal conditions. In people without diabetes, blood glucose levels are typically maintained within relatively narrow limits by the balance between glucose entry into the circulation (from stored glycogen in the liver and from intestinal absorption), and glucose uptake from the circulation into the peripheral tissues to be utilised as fuel.

23. Hormones, including insulin, maintain the balance between glucose entry into the circulation and glucose uptake by tissues, thereby ensuring glucose homeostasis. Insulin lowers blood glucose levels by: (i) suppressing glucose output from the liver, by inhibiting both the breakdown of stored glycogen in the liver into glucose (known as glycogenolysis) and the formation of new glucose (known as gluconeogenesis); and (ii) increasing glucose uptake into peripheral tissues, by increasing the number of glucose transporter proteins at the cell surface. In people without diabetes, insulin is secreted at a low basal level throughout the day, with increased levels following mealtimes.

24. Diabetes (also called diabetes mellitus) is one of the most prevalent diseases in the world. It is a collection of chronic metabolic disorders, the characteristic feature of which is high blood glucose concentrations, resulting from an absolute or relative deficiency of insulin. People with diabetes have increased morbidity, increased risk of depression, and reduced life expectancy.

25. In 1999, the World Health Organisation ("WHO") published a new classification framework for diabetes which encompassed clinical stages and aetiological types of diabetes. It divided diabetes into the following types:

i) Type 1 diabetes (previously known as insulin dependent diabetes);

ii) Type 2 diabetes (previously known as non-insulin dependent diabetes);

iii) Other specific types, including inter alia genetic defects of insulin action, genetic defects of beta cell function, diseases of the exocrine pancreas, endocrinopathies, and drug- or chemical-induced diabetes; and

iv) Gestational diabetes.

26. In type 1 diabetes, an absolute deficiency of insulin occurs due to autoimmune destruction of the insulin-secreting beta cells of the Islets of Langerhans in the pancreas. Type 1 diabetes is generally of rapid onset, typically in childhood or adolescence (although it can occur at any age). At present, there is no cure for type 1 diabetes. As such, people with type 1 diabetes need to not only learn how to self-manage their condition but must also maintain the motivation to self-manage their condition throughout their lifetime. The management of type 1 diabetes relies on exogenous insulin delivery (to mimic physiological insulin production as far as possible) and adherence to a group of self-care behaviours, such as estimating dietary carbohydrate and exercise. Insulin may be delivered by a regimen of multiple daily injections ("MDI") or insulin pump therapy (also known as "continuous subcutaneous insulin infusion"). The principles of self-management of type 1 diabetes are the same regardless of whether MDI or insulin pump therapy is relied on. The majority of people with diabetes rely on MDI therapy. The primary objective of type 1 diabetes self-management is to prevent immediate adverse glycaemic events; in effect constantly walking the tightrope between high glucose (hyperglycaemia) and low glucose (hypoglycaemia), as well as minimizing the risk of long-term diabetes complications.

27. In type 2 diabetes, circulating insulin cannot be utilised due to peripheral resistance to insulin at the receptor level. An insulin secretion deficit often occurs in parallel with insulin resistance. Type 2 diabetes occurs largely in adults and older people, caused by a combination of genetic and environmental factors. In the early stages of type 2 diabetes, people do not experience the extreme glycaemic variability seen in type 1 diabetes. This means that individuals can often manage their diabetes through lifestyle modification (such as weight loss through diet and exercise) as well as oral agents (tablets to either increase secretion of insulin or increase sensitivity to insulin). However, type 2 diabetes is a progressive disease. Over time, glycaemic variability increases, and the majority of people living with type 2 diabetes require exogenous insulin to manage their blood glucose levels after around 10 years, as in type 1 diabetes.

Blood Glucose Values

28. Blood glucose values are measured in units of either mg/dL or mmol/L. The unit used usually depends on the country; the preferred unit in the UK is mmol/L, whereas in the US it is mg/dL.

29. Blood glucose values may be specified as being "arterialised" (also known as "arterialised venous"), "capillary" or "venous", reflecting the type of blood vessel from which the sample was taken (arteries, capillaries, or veins respectively). At any point in time, an arterialised measurement will tend to be higher than a capillary measurement and higher again than a venous measurement. Further variability in blood glucose measurements can be introduced by measuring the glucose levels in the whole blood sample (which is typical of at-home testing) versus in the plasma component of blood only (which is typical of research settings).

30. Blood glucose values can also be estimated from measuring glucose levels in the interstitial fluid, although it should be noted that this is an estimation only due to a concentration gradient between blood and interstitial fluid, and a lag time in equilibration. The physiological lag time will vary depending on factors such as sample site and the rate of change of glucose levels. Measurement delays are also common in CGM sensors and result from filtering and processing delays in the electronic components, as well as the time required for glucose to diffuse across the outer membranes of the sensor to be in equilibrium at the enzyme layer, which can vary over time (e.g., due to biofouling or scar-tissue encapsulation of the sensor).

Hyperglycaemia

31. Hyperglycaemia, or high blood sugar, is the characteristic feature of diabetes. At the 2012 Priority Date there was no agreed definition of hyperglycaemia, but it was typically considered by most clinicians to mean a blood glucose concentration from somewhere between 10 to 15 mmol/L upwards. In the short-term, symptoms of hyperglycaemia may include feeling thirsty, peeing frequently and overnight, feeling weak or tired, and blurred vision. If untreated, hyperglycaemia can progress to diabetic ketoacidosis ("DKA"), a life-threatening condition requiring hospital treatment. The mainstay of treatment of DKA is insulin with supplementary fluid and supportive treatments to address electrolyte abnormalities and the underlying cause of the DKA (which may include infection as well as insulin omission). DKA predominantly occurs in type 1 diabetes where there is an absolute insulin deficiency. In developed countries, DKA has a low overall mortality rate of around <1-5% (but a much higher mortality rate in the elderly). In the medium-long term, hyperglycaemia can lead to complications of the vascular system.

Hypoglycaemia

32. Hypoglycaemia, or low blood sugar, is a serious side effect of insulin therapy. Hypoglycaemia is the leading cause of death in people with diabetes under the age of 40. It is also associated with "dead in bed" syndrome, where an individual is found dead in an undisturbed bed. For these reasons, and others, fear of hypoglycaemia is a widespread phenomenon in people with diabetes.

33. At the 2012 Priority Date, there was no universally agreed definition for all purposes of the threshold for hypoglycaemia below which a patient may be said definitively to be hypoglycaemic, although guidance and recommendations had been issued by bodies such as the European Medicines Agency (EMA) and the American Diabetes Association (ADA). However, it was universally recognised that a level of (at least) <3.0 mmol/L should be avoided and may be dangerous to at least some patients.

34. In 2002, the EMA published a "Note for guidance on clinical investigation of medicinal products in the treatment of diabetes mellitus". This suggested using a value of <3.0 mmol/L to define hypoglycaemia when assessing hypoglycaemic risk of new treatments for regulatory purposes.

35. In 2005, the ADA sought to define hypoglycaemia as an event accompanied by a measured plasma glucose concentration of ≤3.9 mmol/L (the "ADA Report"). The ADA Report comments that this threshold is the threshold at which glucose counter-regulation (i.e., the body's response to prevent or rapidly correct hypoglycaemia) is activated in people without diabetes, and that exposure to antecedent plasma glucose concentrations of ≤3.9 mmol/L leads to subsequent hypoglycaemic unawareness. In January 2009, a Position Statement of the American Diabetes Association recommended treating below a threshold of 70mg/dL (3.9mmol/L).

36. Around 2007, Diabetes UK, a charity aimed at patient safety, introduced the phrase "Four is the floor" suggesting people with diabetes should not let their blood glucose drop below 4 mmol/L [1].

37. The second edition of the Oxford Textbook of Endocrinology and Diabetes (published in July 2011) contains a chapter on hypoglycaemia. It recorded that, despite its importance, "definitions of hypoglycaemia remain controversial".

38. It then summarised the position on the biochemical threshold as follows:

"Hypoglycaemia can also be defined biochemically when the blood glucose falls below a certain level. Frequency will then be dependent upon frequency of monitoring. There is no universal threshold level for defining biochemical hypoglycaemia. The use of capillary, venous, venous arterialized (sampling from a distal venous canula in a heated hand) or arterial samples will introduce variability between studies, as will the subsequent measurement of either whole blood or plasma values. Experimental studies show that evidence of cortical dysfunction can be detected in people irrespective of their recent glycaemic experience, at a plasma glucose concentration of 3 mmol/l or less (3); the original reports of the ability to induce counterregulatory deficits and loss of subjective awareness of hypoglycaemia used a 2-h antecedent exposure to 3 mmol/l (4), and early reports of the restoration of subjective awareness to the hypoglycaemia unaware by strict hypoglycaemia avoidance used avoidance of exposure to 3 mmol/l or less (5). Such data make restricting definition of hypoglycaemia to a glucose concentration of 3mmol/l or less very robust. The European Medicines Agency uses this level to define significant hypoglycaemia in assessing new medications, although pointing out that for this purpose 'the definition needs to be more rigorous than in clinical practice' (6). At the other extreme, the American Diabetes Association suggests anything less than 3.9 mmol/l be considered hypoglycaemia (2), on the basis that in health evidence of counterregulation (reduced endogenous insulin and increased glucagon secretion) is detectable at this level and artificial exposure to 3.9 mmol/l induces defects in some other aspects of the counterregulatory response to immediate subsequent hypoglycaemia in health. However, as neither insulin nor glucagon responses are useful defences against hypoglycaemia in the insulin-deficient patient with diabetes and subjective awareness to subsequent hypoglycaemia is not affected in the experimental setting just described (7), this definition, which includes glucose concentrations often seen in health, is considered by many authorities to be over rigorous, although most would acknowledge that the lower limit to goals for adjusting diabetes therapy should be at least this high. A clinically useful compromise has been to define hypoglycaemia as a plasma glucose concentration of less than 3.5 mmol/l, and certainly, in practice, this is the concentration at which patients must take corrective action. It forms a useful cut-off for defining frequency."

39. In May 2012, the EMA published its "Guideline on clinical investigation of medicinal products in the treatment or prevention of diabetes mellitus". It recommended that the definitions of hypoglycaemia should be standardised and stated that "[o]ne recommended approach for such standardization is to use classifications of severity from well-accepted sources, such as the ADA for adults and ISPAD for children" including categorising symptomatic and asymptomatic hypoglycaemia as measured plasma glucose concentrations less than or equal to 3.9 mmol/L.

Glycaemic variability

40. The management of diabetes has to be tailored to the individual with diabetes in order for it to be effective. The term "glycaemic variability" or "glucose variability" refers to the swings in blood glucose seen in people with diabetes. Each swing up or down is referred to as a glucose "excursion". These terms have been used in the field since the 1970s, such as in the metric MAGE (Mean Amplitude of Glucose Excursions). Living with diabetes means living in a state of constant flux in glucose levels requiring frequent treatment decisions (also known as "interventions"), such as injecting insulin or eating carbohydrates.

41. At a high level, swings in glucose levels can be attributed to a mismatch between the available glucose and circulating insulin. If there is more insulin than glucose, blood glucose levels will fall. The magnitude of glucose-insulin mismatch will determine the rate, and magnitude, of fall. Conversely, if there is more glucose than insulin, blood glucose levels will increase. The magnitude of the mismatch will determine the rate, and magnitude, of increase. Glucose levels are also affected by numerous other factors such as the dawn effect, caffeine, emotional responses, meals, snacking, exercise, alcohol, nocturnal hypoglycaemia, menstrual cycles, menopause, weight, fasting, illness and temperature.

42. There is also significant interpersonal variation in how blood glucose levels respond to different factors, for example if two individuals with diabetes were to eat the exact same meal, or carry out the exact same exercise routine, it will affect their glucose levels in different ways. The result is that there is no "one size fits all" approach to diabetes management.

43. Individuals also have different preferences as to whether they want to maintain their blood glucose levels at the higher end of the normal range or the lower end of the normal range.

44. People also advocated for different hypoglycaemia thresholds for children. The developing brain is more susceptible to neuroglycopenia and children may be unable to express feelings or independently self-manage impending hypoglycaemia, meaning that higher hypoglycaemia thresholds for children may be more appropriate.

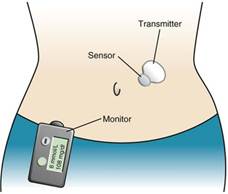

CGM devices

46. CGM devices provide continuous information about changes in glucose concentration in the interstitial fluid, which is present in subcutaneous tissue. CGM devices consist of a sensor, which generally remains at least partially in the subcutaneous tissue (typically the abdomen), and a monitor, which connects wirelessly via a wireless transmitter attached to the sensor, or by a cable to the sensor. The monitor may be a dedicated device or integrated into an insulin pump, or running on another device (e.g., computer).

CGM implementation

47. At the 2007 and 2012 Priority Dates, CGM implementation fell into one of two subsets:

i) "Real-time" CGM devices, where the user would receive real-time glucose readings on their CGM monitor.

ii) "Retrospective" CGM devices (also known as "blinded" CGM devices), where the user would have to plug their monitor into a computer and download their glucose readings from the last few days (which was typically done at the clinic). The clinician and person with diabetes would then retrospectively analyse the data. This could assist with identifying patterns in glucose levels, which could aid positive changes to treatment regimes. For example, if glucose levels were always high after lunch, the person with diabetes could modify their diet to eat less carbohydrates at lunch.

Specific CGM devices

48. A summary of CGM devices available prior to the 2007 Priority Date and the 2012 Priority Date are included in the section below.

49. All of the CGM devices available prior to the 2012 Priority Date were indicated for use as an adjunctive device only. As such, any glucose readings needed to be confirmed with a fingerprick test before an intervention was taken.

CGM glucose alarms or alerts

50. Indicators of hypoglycaemia and hyperglycaemia include notifications provided to the user, typically known as alerts and alarms. Companies use these terms differently; for example, Dexcom CGMs tend to use the term "alarm" for a more serious condition and "alert" for a less serious condition, whereas Abbott CGMs tend to use the term "alarm" for automatic notifications that call the attention of the user to a serious condition and "alert" for notifications that will only be seen by a user actively checking their CGM display. Alarms and alerts, in general, were features of some of the earliest CGM devices and were well established as an important part of CGM devices in 2012.

53. Glucose alerts were of three general types: (i) detection of current glucose crossing a threshold (often referred to as "current" or "threshold" alarms), and (ii) using recent glucose data with one or more algorithms to predict glucose crossing a threshold within a time period or prediction horizon (often referred to as "predictive" or "projected" alarms), and (iii) a "rate of change alert" that alerts that the user's glucose levels are rising very rapidly or falling very rapidly, regardless of the absolute value.

54. Predictive alarms are generally based on time series forecasting, which is the use of mathematical models and algorithms to forecast, predict or extrapolate future values based on past data. The aim of predictive alarms was to give the user greater warning time of a hypoglycaemic or hyperglycaemic event, thereby allowing enough time for the patient to take the necessary precautions for hypoglycaemia or hyperglycaemia mitigation and possible avoidance (e.g., consuming carbohydrates or injecting insulin). Predictive alarms were more inaccurate than threshold alarms. Shorter prediction horizons were known to increase accuracy, while longer horizons allow more time for the necessary intervention.

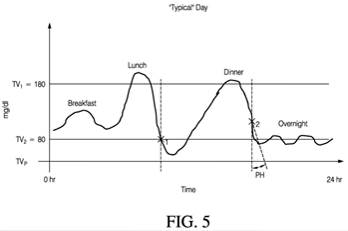

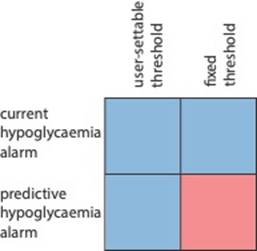

55. All commercially available CGM devices as of 2012 had some form of hypoglycaemia alarm. Several had multiple alarms, and combinations of current glucose alarms and predictive glucose alarms were also well known and already in use in CGM devices in 2012. The features of the CGM devices that were in use or had been released before 2012 are summarised in the next section. By way of an overview, the different types and combinations of glucose alarms in these devices is summarised in the table below, although the alarms in the devices differed in other aspects that are not captured in the table, such as how the alarms are displayed. The table refers to low glucose alarms only, although most devices also had high glucose alarms. The thresholds for the current alarms in these devices (i.e., the glucose value that triggers the alarm) could be changed by the user (i.e. "user settable" alarms) or not changed by the user (for example, factory determined, i.e. "fixed" alarms). The system set the threshold for the predictive alarms to be the same as for the current glucose alarm.

|

Devices |

Year of release |

Current alarms |

Predictive alarm | ||

|

Y/N |

Fixed or user settable |

Y/N |

Fixed or user settable | ||

|

CGK relevant to 2007 Priority Date: | |||||

|

Medtronic MiniMed CGMS |

1999 |

N |

N/A |

N |

N/A |

|

GlucoWatch Biographer |

2002 |

Y |

User settable Fixed at 40 mg/dL |

Y |

Same threshold as set by the user for the user-settable current alarm |

|

Medtronic MiniMed Guardian RT |

2005 |

Y |

User settable |

N |

N/A |

|

Medtronic Paradigm Real-Time |

2006 |

Y |

User settable |

N |

N/A |

|

DexCom STS |

2006 |

Y |

(1) User settable (2) Fixed at 55 mg/dL |

N |

N/A |

|

Medtronic MiniMed Guardian Real-Time

|

2006 |

Y |

User settable |

Y |

Same threshold as set by the user for the current alarm |

|

Additional CGK relevant to 2012 Priority Date: | |||||

|

Abbott Freestyle Navigator |

2007 |

Y |

User settable |

Y |

Same threshold as set by the user for the current alarm

|

|

DexCom STS-7 |

2007 |

Y |

(1) User settable (2) Fixed at 55 mg/dL |

N |

N/A |

|

Medtronic iPro |

2007 |

N |

N/A |

N |

N/A |

|

DexCom Seven Plus |

2009 |

Y |

(1) User settable (2) Fixed at 55 mg/dL |

N |

N/A |

|

Medtronic iPro2 |

2011 |

N |

N/A |

N |

N/A |

|

Medtronic Paradigm Real-Time Revel |

2010 |

Y |

User settable |

Y |

Same threshold as set by the user for the current alarm

|

|

Abbott Freestyle Navigator 2 |

2011 |

Y |

User settable |

Y |

Same threshold as set by the user for the current alarm |

|

Dexcom G4 Platinum |

2012 |

Y |

(1) User settable (2) Fixed at 55 mg/dL |

N |

N/A |

56. Of the main devices in the market as of 2012, there were two distinct approaches taken to the design of the overall alarm system exemplified by Medtronic, Abbott and Dexcom devices.

57. In the case of the Medtronic and Abbott devices, there was a single low current alarm (with one aspect being predictive) for hypoglycaemia. This threshold was user-settable. In these devices, once the user acknowledged the initial alarm, if the condition was still present sometime later (e.g., 20 minutes), the alarm would trigger again and would continue doing so until the glucose level rose above the threshold. These two systems also included predictive alarms that used a threshold set by the system to be the same as the user-settable current glucose threshold and that allowed the user to choose to get an alarm if they could be reaching this threshold in the near future.

58. In the case of Dexcom, there were two low alarms (Dexcom differentiated between these by calling one an alert and the other an alarm). The first one (the alert) was a threshold alert for hypoglycaemia with a user-settable threshold. In the Dexcom devices, once this alert was acknowledged, it would not trigger again even if the hypoglycaemic condition persisted (i.e., glucose levels must have risen above the threshold to re-set this alert). The design approach taken by Dexcom was to incorporate a second alarm with a fixed threshold at 55 mg/dL (3.1 mmol/L). This second threshold alarm thus complemented the user-settable alert. This fixed-threshold alarm was also not optional.

Display of glucose data

59. In addition to providing alarms, some CGMs could display a user's current glucose level (or most recent measured value) and historic glucose levels within a given window (typically selectable, between 1 to 24 hours). The Dexcom STS was the first CGM device to have a display able to show a graphical representation of historic glucose levels. This information was helpful for a patient to visualise trends in their glucose levels, the amount of time spent outside of target levels, and when they might be experiencing unwanted highs or lows (e.g., after meals or during exercise). In this regard, it was also known to show a user a target range alongside their actual glucose data, to allow easy visualisation of when they were (or had been) above or below a defined range. This was a feature of several CGM devices as of 2012.

Temperature correction

60. At the 2007 and 2012 Priority Dates it was known that temperature affects sensor readings and that incorporating a temperature sensor and a method to correct for it would improve the accuracy of glucose measurements in a CGM device to a degree. One reason for this is that temperature affects the rate of the enzyme-catalysed reaction, which generates the electrical signals that are ultimately converted into glucose readings. At higher temperatures, the rate of this reaction increases, so for the same amount of glucose in the reaction medium, the magnitude of the electrical current generated increases, and therefore the measured glucose value will appear to be higher. The opposite effect may occur at lower temperatures. Another reason is that temperature can affect the rate of glucose diffusion across the sensor membrane.

61. At both the 2007 and 2012 Priority Dates, there were significant concerns over the accuracy of CGM devices.

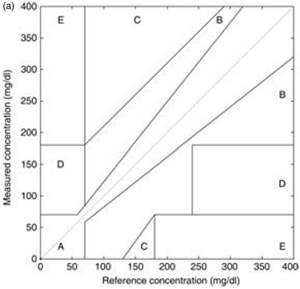

62. In contrast to self-monitoring of blood glucose (SMBG) meters, there is no applicable ISO Standard for assessing the accuracy of CGM devices. Instead, a CGM device's accuracy can be assessed using a variety of metrics, each with their own advantages and disadvantages. Two of the most frequently used metrics for assessing the accuracy of CGM devices are the Clarke Error Grid and mean absolute relative difference (MARD).

63. The Clarke Error Grid, developed in the 1980s, was (and still is) frequently used in assessing CGM accuracy (as well as the accuracy of other glucose sensors such as fingerprick tests). The Clarke Error Grid assesses a monitor's performance on the y axis against reference glucose on the x axis, assigning a clinical risk to any glucose sensor error. Results fall into one of five risk zones:

Zone A - clinically accurate;

Zone B - benign (i.e., clinically acceptable);

Zone C - overcorrect (i.e., results that may prompt overcorrection leading to hypo- or hyperglycaemia);

Zone D - failure to detect (i.e., a failure to detect hypo- or hyper-glycaemia);

Zone E - erroneous.

64. Reporting the percentage of values falling in Zones A + B of the Clarke Error Grid (representing clinically acceptable results) is a commonly used way to assess CGM accuracy; a higher percentage corresponds to higher accuracy.

65. Another way to assess accuracy was (and still is) to calculate the MARD value for a CGM device. MARD is a measure of the absolute difference between the sensor glucose value and the reference value (usually a venous blood sample), divided by the sensor glucose value and expressed as a percentage. A MARD value indicates how accurate the CGM reading of estimated blood glucose is compared against the user's real glucose value at any one time. A lower MARD value corresponds to higher accuracy.

66. The MARD value is mostly dependent on the particular CGM device being used, in particular the software algorithms, however it is also influenced by how the device is used, for example how it is calibrated as well as interference at the sensor.

Summary of CGM Devices

MiniMed CGMS (1999)

Figure A1.1: Photograph of the MiniMed CGMS

67. The MiniMed CGMS was the first CGM device to come to market, having been approved by the FDA in 1999. The MiniMed CGMS was a retrospective device that recorded glucose data for the physician to review at a later time. The user would wear the CGM device for a set period, typically for 3 days (as this was the lifetime of the sensor), and would then bring the monitor into clinic to be plugged into a computer. The clinician would then download the user's glucose data for retrospective analysis. It did not provide real-time glucose measurements to the user nor have any alerts related to glucose levels. An updated version of the device (CGMS Gold) was introduced in 2003. A further version called Guardian CGMS was released in 2004, which included high and low current glucose alarms.

GlucoWatch Biographer (2002)

Figure A1.2: Photographs of the GlucoWatch

69. Figure A1.2 shows the display of this device. The user could scroll through the recent measurements, and an up or down arrow presented the current trend. The glucose measurements were updated every ten minutes.

Medtronic MiniMed Guardian RT (2005)

Figure A1.3: Photographs of the Medtronic MiniMed Guardian RT

72. The MiniMed Guardian RT was the first real-time CGM system from Medtronic that presented current glucose values to the user on its display. It was released in around 2005.

73. The Guardian RT was semi-wired as the sensor had a short connecting wire to a transmitter.

Dexcom STS (2006)

Figure A1.4: Photograph of Dexcom STS

80. The STS was the first CGM device from Dexcom. It was launched in around 2006. The STS was a real-time CGM device and provided the user with glucose measurements every 5 minutes for up to 3 days (72 hours).

81. As seen in Figure A1.4, the STS showed the current glucose value together with a graph and an indication in a text (under the current glucose value) of what the time range in the graph covered. The dotted lines shown represent the thresholds of the high and low glucose alerts.

86. The STS device also had a visual notification ("---" alongside the Y antenna) for when "noisy" glucose readings were received. Users receiving this notification were advised to check the placement of the STS Sensor to make sure it was still adhered to their skin properly and that nothing was rubbing the STS Sensor (i.e. clothing, seat belts). The "noisy" glucose readings could also occur due to rapid increases or decreases in the user's glucose levels.

87. The STS also had alerts to notify how much time the user had remaining until the 3-day sensor session was complete. The Expiration Screen will appear 6 hours and 2 hours before the 3-day session ends. At the 30 minute and 0 hour Expiration Screen, the STS Receiver will display the Expiration Screen and will also vibrate. The user can clear all of these screens by pressing any button on the STS Receiver. In the period prior to sensor expiration, the glucose readings are still being taken as normal. Upon expiration, the 0 hour Screen appears and no more glucose readings are read. The user then knows to remove their STS Sensor.

88. At a general level, the principle of warning users of expiry is part of any medical device design; it is considered a safety feature. The medical device should have an indication of remaining life span in order to be safe, useable and effective, whilst not interrupting its main function (which in the case of a CGM device is the measuring of the glucose level). For example, in the context of CGM devices, if a user receives an alert in the evening that their sensor will expire in 6 hours, it allows them to replace it early to ensure they have a functioning sensor overnight.

Medtronic MiniMed Guardian Real-Time (2006)

Figure A1.5: Photographs of Medtronic MiniMed Guardian Real-Time

89. The Guardian Real-Time was launched in around 2006. The Guardian Real-Time provided the user with glucose values in real time.

90. As seen in Figure A1.5, the Guardian Real-Time presented the historical glucose values in a graph (with the user being able to select between time windows of 3, 6, 12 and 24 hours). The display showed the current time and glucose value and trend arrows. The trend arrow in this device included one or two arrows to not only indicate the direction of change but the magnitude of the change (one arrow if the magnitude of the rate was 1.0–2.0 mg/dL/min; two arrows if the magnitude was 2.0 mg/dL/min or greater; no arrow if the magnitude of the rate was less than 1.0 mg/dL/min).

94. The Guardian Real-Time device provided the following glucose alerts:

iii) Falling and rising rate alerts.

102. The Guardian Real-Time also had a Sensor Error alert: the reason for the Sensor Error alert being that the sensor signal is either too high or too low. If this alert was repeated the user was instructed to "make sure that the sensor is inserted properly, that the sensor and transmitter are connected properly, and that there is no moisture at the connection".

103. Like all early CGM devices, the Guardian Real-Time had regulatory approval for use as an adjunctive device only. Therefore, the real time data that it was providing on glucose values or alerts could not be relied upon to make an intervention decision. Instead, the data was intended to provide an indication of when a fingerprick test may be required. This message was in the Guardian Real-Time user guide, which also strongly recommended that the user work closely with their healthcare professional when using the device.

Dexcom STS-7 (2007)

Figure A1.6: Photograph of Dexcom STS-7

104. The STS-7 was the second generation of Dexcom's CGM. It was launched in around 2007. The STS-7 expanded the use of a single sensor from three days to seven (thus the seven in the name of the device).

107. Other system alerts and notifications were also unchanged from the behaviour of the STS device.

Abbott FreeStyle Navigator (2007)

Figure A1.7: Photograph of the Abbott FreeStyle Navigator

108. The Navigator was the first CGM system from Abbott Laboratories. It was released in around 2007.

109. As seen in Figure A1.7, the main screen of the Navigator did not show a graphical representation of past glucose values. However, the user could display such a graphical representation through the report function. The Navigator included trend arrows, which indicated direction and magnitude. An arrow at a 45-degree angle (up or down) represented a magnitude between 1.0–2.0 mg/dL/min, an arrow straight up or down for a magnitude greater than 2.0 mg/dL/min, and a flat arrow pointing right if the magnitude was less than 1 mg/dL/min. When viewing their glucose level history through the reports function, the user could view a line graph of their glucose data alongside a shaded region representing a target range. This range was separate from the high and low glucose alarms discussed below.

Medtronic iPro (2007)

114. Medtronic released the iPro in around 2007. The iPro was the successor to Medtronic's CGMS Gold. It was another retrospective CGM device aimed at clinicians, but unlike the CGMS Gold it now had a wireless receiver. As the iPro was a retrospective CGM device, it did not have any alerts relating to glucose levels.

Dexcom Seven Plus (2009)

Figure A1.8: Photograph of Dexcom Seven Plus

115. This was an updated version of the STS-7, it received CE mark approval in 2009. As shown in Figure A1.8 above, it had a similar form factor to the STS-7 and STS and had very similar features.

116. As seen from Figure A1.8, the Dexcom Seven Plus (the "Seven Plus") UI displayed a graphical representation of a user's historical glucose data and a numerical indication of the user's most recent 5-minute reading. Like the STS-7, it showed the current time and the number of hours of glucose data shown as a graph of the recent glucose levels and dotted lines showing the thresholds for the low and high glucose alerts. New in the Seven Plus were the glucose trend arrows, which indicated the direction in which the user's glucose levels were heading.

Abbott Freestyle Navigator II (2011)

120. The FreeStyle Navigator II was released in around 2011. It was an updated version of the original FreeStyle Navigator. However, there were no changes to the glucose alerts described above between the original FreeStyle Navigator and the updated Navigator II.

Medtronic Paradigm Real-Time Revel

Figure A1.9: Photograph of Medtronic Paradigm Real-Time Revel

Medtronic iPro2 (2011)

Figure A1.10: Photograph of the Medtronic iPro2

122. Medtronic released the iPro2 in around 2011. Like the iPro it was a retrospective CGM device so did not have any alerts relating to glucose levels.

Dexcom G4

Figure A1.11: Photograph of Dexcom G4 Platinum

EP627

124. Almost all the issues which I have to decide on EP627 depend upon the correct interpretation of claim 1. Dexcom submit that the claim is expressed in deliberately broad terms and accused Abbott and their expert, Dr Palerm, of reading additional limitations into the claim which are not reflected in the language used. By contrast, Abbott submitted that Dexcom's approach ignored the context and purpose of the invention.

125. EP627 is entitled 'M ethod and apparatus for providing data processing and control in medical communication system' .

126. As will be seen below, the invention claimed in EP627 is described in a few short paragraphs of the specification and by reference to one of the figures. Since most of the issues in this action turn on the correct interpretation of claim 1, it is necessary to review some other parts of the specification to see if they provide any assistance.

127. The specification starts with three paragraphs under the heading 'Background'. These paragraphs start by talking about an analyte sensor but immediately address blood glucose as the analyte. After describing aspects of the sensor, which are not pertinent, the specification addresses the portion of the sensor which communicates readings to the transmitter unit which sends readings to a receiver/monitor unit where data processing takes place:

[0002] ....

The transmitter unit is configured to transmit the analyte levels detected by the sensor over a wireless communication link such as an RF (radio frequency) communication link to a re ceiver /monitor unit. The receiver/monitor unit performs data analysis, among others on the received analyte lev els to generate information pertaining to the monitored analyte levels. To provide flexibility in analyte sensor manufacturing and/or design, among others, tolerance of a larger range of the analyte sensor sensitivities for processing by the transmitter unit is desirable.

[0003] The state of the art is exemplified by the document US 5 791 344 A, which discloses a method com prising executing a predetermined routine associated with an operation of an analyte monitoring device; de tecting a predefined alarm condition associated with the analyte monitoring device; outputting a first indication associated with the detected predefined alarm condition during the execution of the predetermined routine; and outputting a second indication associated with the de tected predefined alarm condition.

128. By reference to that description of the state of the art, the invention is summarized in [0004]. It can be seen that [0003] describes the pre-characterising portion of claim 1 and [0004] describes the characterising portion:

[0004] Relative thereto the invention is defined in claim 1. In it the second indication is output after the execution of the predetermined routine; the predetermined routine is executed without interruption during the outputting of the first indication; and the first indication includes a temporary indicator and, further, the second indication includes a predetermined alarm associated with the detected predefined alarm condition; and the predetermined routine includes one or more processes that interface with a user interface of the analyte monitoring device.

129. Neither side made reference to or suggested that the acknowledged prior art US344A assisted on the issues of construction which arise.

130. Although the Detailed Description starts with the general statement that the patent provides a method and apparatus for providing data processing and control for use in a medical telemetry system, it immediately takes as its paradigm a CGM system and indeed it states in terms:

'The subject invention is further described primarily with respect to a glucose monitoring system for convenience and such description is in no way intended to limit the scope of the invention. It is to be understood that the analyte monitoring system may be configured to monitor a variety of analytes'.

131. A variety of analytes are set out in [0009], making it very clear that the invention applies well beyond CGM systems.

132. The specification then describes various aspects of a CGM system but none of the detail matters for present purposes.

The Invention

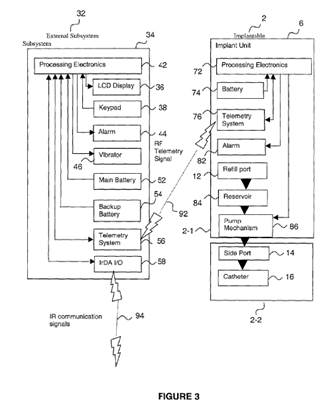

133. The invention is described at [0077] - [0081], by reference to figure 10 (shown below):

134. In summary, as Abbott submitted:

i) In this figure, the predetermined routine begins at box 1010 and continues until it is terminated in box 1040. Paragraph [0080] explains that the predetermined routine may include performing a finger stick blood glucose test "or any other processes that interface with the user interface". Several examples are given, including review of historical data such as glucose data, alarms and events, and visual displays of data. So these are routines which involve the user interacting with the user interface in some way, and are associated with analyte monitoring, but not for the monitoring itself.

ii) During execution of the predetermined routine, if an alarm condition is detected (box 1020), the user is notified by a "first indication" that alerts the user "substantially in real time" to the detected alarm condition, but "does not interrupt or otherwise disrupt the execution of the predetermined routine" (box 1030, [0078] - [0079]).

iii) Upon the user terminating the predetermined routine, a second indication associated with the alarm condition is output or displayed (box 1040, [0078]).

iv) The purpose of the process is that a detected alarm condition can be notified to the user immediately, but without immediately interrupting or disrupting an ongoing routine or process ([0081]).

135. Additional information regarding the first and second indications is provided at [0137] - [0152].

'[0137] A method in accordance with still yet another embodiment may include executing a predetermined routine associated with an operation of an analyte mon i toring device, detecting a predefined alarm condition associated with the analyte monitoring device, outputting a first indication associated with the detected predefined alarm condition during the execution of the predeter mined routine, outputting a second indication associated with the detected predefined alarm condition after the execution of the predetermined routine, where the predetermined routine is executed without interruption dur ing the outputting of the first indication.

[0138 ] In one aspect, the predetermined routine may include one or more processes associated with perform ing a blood glucose assay, one or more configuration settings, analyte related data review or analysis, data communication routine, calibration routine, or reviewing a higher priority alarm condition notification compared to the predetermined routine , or any other process or rou tine that requires the user interface.

[0139] Moreover, in one aspect, the first indication may include one or more of a visual, audible, or vibratory in dicators .

[0140] Further, the second indication may include one or more of a visual, audible, or vibratory indicators.

[0141] In one aspect, the first indication may include a temporary indicator, and further, and the second indica tion may include a predetermined alarm associated with detected predefined alarm condition.

[0142] In still another aspect, the first indication may be active during the execution of the predetermined rou tine, and may be inactive at the end of the predetermined routine .

[0143] Further, the second indication in a further as pect may be active at the end of the predetermined rou tine.

[0144] Moreover, each of the first indication and the second indication may include one or more of a visual text notification alert, a backlight indicator, a graphical notification, an audible notification, or a vibratory notifi cation.

[0145] The predetermined routine may be executed to completion without interruption.

[0146] An apparatus in accordance with still another embodiment may include a user interface, and a data processing unit operatively coupled to the user interface, the data processing unit configured to execute a predetermined routine associated with an operation of an analyte monitoring device, detect a predefined alarm con dition associated with the analyte monitoring device, output on the user interface a first indication associated with the detected predefined alarm condition during the exe cution of the predetermined routine , and output on the user interface a second indication associated with the detected predefined alarm condition after the execution of the predetermined routine , wherein the predetermined routine is executed without interruption during the out- putting of the first indication.

[0147 ] The predetermined routine may include one or more processes associated with performing a blood glucose assay, one or more configuration settings, analyte related data review or analysis, data communication rou tine, calibration routine, or reviewing a higher priority alarm condition notification compared to the predeter mined routine.

[0148] The first indication or the second indication or both, in one aspect may include one or more of a visual, audible, or vibratory indicators output on the user interface.

[0149] In addition, the first indication may include a temporary indicator, and further, wherein the second in dication includes a predetermined alarm associated with detected predefined alarm condition.

[0150] Also, the first indication may be output on the user interface during the execution of the predetermined routine , and is not output on the user interface at or prior to the end of the predetermined routine .

[0151] Additionally, the second indication may be ac tive at the end of the predetermined routine .

[0152] In another aspect, each of the first indication and the second indication may include a respective one or more of a visual text notification alert, a backlight in dicator , a graphical notification, an audible notification, or a vibratory notification, configured to output on the user interface.'

136. These paragraphs specify that the first and second indications may include one or more of a visual, audible or vibratory indicator ([0139] - [0140]), and that the first indication may include a temporary indicator ([0141]).

137. The specification therefore specifically contemplates that there will be an unobtrusive indication (which does not interrupt or otherwise disrupt) the user's use of the application and which may include a temporary indicator.

138. As will be apparent from the prior art Dexcom relies on in this case, this concept of holding back the "full" alert in favour of an unobtrusive indication, so as not to interrupt the user, and only providing the normal alert after they had completed the routine they were engaged in, was not a feature of any CGM devices. That is unsurprising, since notifying the user of a glucose condition through glucose alerts was one of the key functions of CGM devices.

THE SKILLED TEAM & CGK

139. There does not appear to be any dispute as to the core attributes of the engineer or engineers who both experts regard as the relevant skilled person/skilled team. They would be an engineer (such as a systems engineer, biomedical engineer or electrical engineer, but not necessarily from any specific engineering discipline) specialising in the design of medical devices. The engineer would have experience in CGM design, albeit their level of experience in CGM design is unlikely to affect any of the issues on EP627.

140. The only CGK of specific relevance to EP627 that either expert has identified is the skilled team's knowledge of existing CGM devices as of 2007 (see the summary of CGM devices at the Annex to the CGK Statement). This would include familiarity with their UIs and with provision/display of alarms, alerts and notifications.

CLAIMS / CONSTRUCTION

141. The principal claims in issue are claims 1 and 5 (method claims). Claims 8 and 12 are the equivalent apparatus claims, respectively, and it is not necessary to address them separately. There is no need to rehearse the applicable principles which are well-known. No issues of equivalents were raised so I must undertake a purposive construction of the claims: see e.g. Saab Seaeye Ltd v Atlas Electronik GmbH [2017] EWCA Civ 2175 per Floyd LJ at [18] & [19] and Icescape Ltd v Ice-World International BV [2018] EWCA Civ 2219 at [60].

142. By the time of closings, Abbott submitted that two issues of construction arose concerning 'predetermined routine' and (essentially) the nature of the second indication. However, as I have already indicated, most of the issues on EP627 turn on issues of construction. In the breakdown of claim 1 below, I have underlined those expressions which I consider to be in issue:

Claim 1

|

1 |

A method comprising |

|

1.1 |

executing, on a receiver unit (104, 106), a predetermined routine associated with an operation of an analyte monitoring device (1010); |

|

1.2 |

detecting a predefined alarm condition associated with the analyte monitoring device (1020); |

|

1.3 |

outputting, to a user interface of the receiver unit, a first indication associated with the detected predefined alarm condition during the execution of the predetermined routine (1030); and |

|

1.4 |

outputting, to the user interface of the receiver unit, a second indication associated with the detected predefined alarm condition (1040); wherein |

|

1.5 |

the second indication is output after the completion of the execution of the predetermined routine; |

|

1.6 |

the predetermined routine is executed without interruption during the outputting of the first indication; |

|

1.7 |

the first indication includes a temporary indicator and, |

|

1.8 |

further, the second indication includes a predetermined alarm associated with the detected predefined alarm condition; and |

|

1.9 |

the predetermined routine includes one or more processes that interface with the user interface of the receiver unit. |

143. Before I come to the issues, I found it helpful to set out the integers of the claim by reference, first, to the stated characteristics of the predetermined routine (PDR) and, second, the chronological sequence of events, and I use this structure below:

i) The PDR is executed on the receiver unit, which has a user interface.

ii) It must be associated with an operation of an analyte monitoring device.

iii) It must include one or more processes that interface with the user interface of the receiver unit.

iv) During the execution of the PDR, a first indication is output to the user interface associated with the detected predefined alarm condition (which alarm condition is associated with the analyte monitoring device).

v) The PDR must execute without interruption during the output of the first indication.

vi) The first indication (which (as above) is associated with the detected predefined alarm condition) includes a temporary indicator.

vii) After the completion of the execution of the PDR, the second indication is output, the second indication being associated with the detected predefined alarm condition.

viii) The second indication includes a predetermined alarm associated with the detected predefined alarm condition.

Interpretation of Claim 1

144. Abbott submitted that claim 1 is a claim to the method described above by reference to figure 10. In summary:

i) The method involves the user using their CGM receiver unit in some interactive way (a "predetermined routine" - e.g. retrieving historical CGM data – integers 1.1, 1.9).

ii) If an alarm condition is met (integer 1.2), the user is alerted in a manner which does not interrupt the predetermined routine (the "first indication" – integers 1.3, 1.6, 1.7).

iii) Then, once the user has completed what they were doing, a second indication will be output on the receiver unit - e.g. one which the user cannot ignore (the "second indication" - integers 1.4, 1.5, 1.8).

145. Abbott relied on this sentence in Dr Palerm's first report: "The aim is to ensure that the user is only momentarily disturbed while executing the routine, but once it is over, a more prominent "second indication" will appear."

146. Abbott also stressed that both the "predetermined routine" and "the alarm condition" must be "associated with ... the analyte monitoring device". This is true. They also submit that the invention is not about (i) suppressing alarms while the user is using some different application unrelated to the analyte monitoring device or (ii) suppressing alarms that are not related to the analyte monitoring device.

147. Abbott's submissions shared some ground with the way Dr Palerm characterised the inventive concept of EP627, and he made it clear that his discussion of EP627 was predicated on this understanding throughout:

In summary, the inventive concept claimed in EP627 is a method of notifying a patient of a glucose condition without interrupting a routine being performed on a user interface by outputting a first passive indication during the execution of the routine, but then also reminding the patient of the condition by providing a second more permanent indication after the routine has completed.

148. Dexcom attacked this, contending it was riddled with errors:

i) The claim is not limited to notifying a patient - it simply speaks of a "user", which Dr Palerm agreed could be a parent or doctor of a patient using a CGM. In fact, the claim is not even expressly limited to medical analytes.

ii) The claim is not limited to an alarm which concerns a glucose condition (by which Dr Palerm appeared to mean hyper- or hypoglycaemia) - it refers to "an alarm condition associated with an analyte monitoring device", which:

a) is not limited to a device monitoring glucose but includes any analyte monitoring device, as Dr Palerm accepted; and

b) is not limited to alarms associated with an analyte condition (i.e. significantly high or low levels of the analyte) but includes alarms such as system errors and battery level alarms, as Dr Palerm also accepted.

iii) There is no requirement that the "predetermined routine" is performed on a user interface - the claim simply requires that the predetermined routine is "associated with an operation of an analyte monitoring device" and includes "one or more processes that interface with the user interface of the receiver unit".

iv) The degree of permanence of the second indication is not specified - it is simply necessary that it "includes a predetermined alarm associated with the detected predefined alarm condition".

149. Moving to the individual terms used in claim 1, Abbott addressed two: the PDR and 'the nature of the second indication'. I will set out Abbott's submissions and, briefly, Dexcom's response.

"Predetermined routine"

150. The additional limitations which Abbott suggested should be interpreted into this expression appear from their submissions which were as follows:

i) Paragraph [0080] describes predetermined routines in which the user is interacting with the user interface, such as "the configuration of device settings" and "review of historical data such as glucose data". Such processes are described as "processes that interface with the user interface". Integer 1.9 limits the claim to that sort of predetermined routine. So the "predetermined routine" of claim 1 is one involving the user interacting with the user interface.

ii) The word used in the claim is a "routine"; and integer 1.9 says that that involves one or more "processes". So that excludes something as simple as the standard screen display without any interaction from the user. (It is notable that Prof Oliver ignores integer 1.9 in his attempt to understand this part of the claim.)

iii) This is also clear as a matter of purposive construction. The whole point of the claim is that the routine is not immediately "interrupted or otherwise disrupted" while it is being executed, but instead an unobtrusive indication, including a temporary indication, is given to the user; but once complete, a second indication (an "alarm") is given to the user. This only makes sense if the routine is one that involves the user; and thus it is desirable to alert them (integer 1.3), but not interrupt what they are doing (integer 1.6); and also to set off an "alarm" once they have completed the routine.

iv) The patent gives specific examples of routines which fall within that definition.

151. By contrast, Dexcom submitted that 'a predetermined routine' is deliberately broad, but acknowledged the express limitations in the claim (which I set out below).

Nature of the "second indication"

152. Abbott submitted as follows:

i) EP627 does not specify the nature of the second indication beyond defining it as a "predetermined alarm associated with the detected alarm condition". Nevertheless, the experts agreed as to its nature and purpose. It is to provide a more prominent or permanent notification, in contrast to the initial less obtrusive (and in particular non-disruptive) notification of the first indication. Or in Prof Oliver's terms, "the user is then notified of the event again (in a more permanent manner)".

ii) There was some attempt by Dexcom in Dr Palerm's cross-examination to suggest that the purpose of the second notification is no more than an additional "reminder". Dr Palerm does describe the second notification as having that role (and it can do so in some cases) but that is neither a limitation in the claim nor of the inventive concept as it has been understood by both experts. The point is that the second notification is more "prominent" or "permanent" in the sense that it is one that the user is required to deal with. Having had the gentle "shoulder tap" of the first indication, the user is then presented with the second notification which requires some action or acknowledgement of the alarm condition that has been detected.

Analysis

153. In reality, there is a single point underpinning the proper interpretation of claim 1. For the most part, Abbott are trying to interpret claim 1 as restrictively as possible and by reference to the embodiment described in the Patent. By contrast, Dexcom point to the obvious generality of the wording used in claim 1, but Dexcom also seek to impose limits on the claim where it suits them.

154. As Floyd J. (as he then was) explained in Nokia v IPCom [2009] EWHC 3482 (Pat) at [41]:

"Where a patentee has used general language in a claim, but has described the invention by reference to a specific embodiment, it is not normally legitimate to write limitations into the claim corresponding to details of the specific embodiment, if the patentee has chosen not to do so. The specific embodiments are merely examples of what is claimed as the invention, and are often expressly, although superfluously, stated not to be 'limiting'. There is no general principle which requires the court to assume that the patentee intended to claim the most sophisticated embodiment of the invention. The skilled person understands that, in the claim, the patentee is stating the limits of the monopoly which it claims, not seeking to describe every detail of the manifold ways in which the invention may be put into effect."

155. With that principle in mind, I turn to consider the interpretation of various terms used in claim 1. Although they are set out in a certain order below, I emphasise that the process of interpretation is an iterative one, so that all considerations are taken into account. It is convenient to start with the elements which are somewhat in the background of the claim. I remind myself this is a method claim.

'analyte monitoring device'

156. It is clear that the monitoring device is not limited to monitoring glucose. Any analyte can be monitored.

157. There is an ability to detect a predefined alarm condition associated with the analyte monitoring device.

158. The first and the second indications are both associated with that (the same) predefined alarm condition.

'receiver unit'

159. The implication in the claim is that the analyte monitoring device communicates in some way (e.g. transmits data, including data indicating alarm conditions associated with the analyte monitoring device) to the receiver unit. Nothing turns on this, but it helps to make sense of the claim. The receiver unit has a user interface, and some sort of computer processing ability so the PDR is able to execute on the receiver unit.

160. The issue over the 'receiver unit' arises because of one of Dexcom's non-infringement arguments, but the argument confuses the distinction between the receiver unit (which has some computer processing ability) and routines which run on it.

'user interface'

161. The user interface has the ability to provide the first and second indications which are output to it. In terms of the provision of the first and second indications, the user interface could be very simple - for example the first and second indications could be indicated to the user via individual lights. It could (but need not) be a screen.

162. However, it is important to note that there are one or more processes in the PDR which interface with the user interface, the implication being that there is some output from the PDR on the user interface. So the user interface has (at least) a dual role.

163. It seems to me that apart from having these functional requirements, the receiver unit and its characteristics (including its user interface) are expressed in deliberately general terms.

'predetermined routine'

164. The claim requires the PDR:

i) to execute on the receiver unit.

ii) to be associated with an operation of an analyte monitoring device.

iii) to include one or more processes that interface with the user interface of the receiver unit.

iv) to execute without interruption during the output of the first indication.

v) to complete execution (before the output of the second indication).

165. At this point, I simply note that in the infringement case the second indication is caused by the user ending the routine by tapping the notification.

'first indication'

166. The claim requires the first indication:

i) to be associated with the detected predefined alarm condition (which alarm condition is associated with the analyte monitoring device).

ii) to be output to the user interface during the execution of the PDR.

iii) to include a temporary indicator.

167. It is implicit that the output of the first indication must end before the second indication is output to the user interface.

'second indication'

168. The claim requires the second indication:

i) to be associated with the detected predefined alarm condition (which alarm condition is associated with the analyte monitoring device).

ii) to be output to the user interface after the completion of the execution of the PDR.

iii) to include a predetermined alarm associated with the detected predefined alarm condition.

169. The issue over the second indication stems from one of Dexcom's non-infringement arguments. Dexcom submit that the second indication must be a reminder, but this is not a requirement of the claim.

Conclusions

170. I realise that in these paragraphs I have done little more than restate the express wording from the claim, but this exercise of setting out what is required of each element in the claim serves to highlight where the parties (but mostly Abbott) seek illegitimately to write in additional limitations.

171. Whilst it is essential to take account of all the requirements of claim 1, it is also important not to imply requirements which are not truly required by the claim. By way of an example, the second indication must include a predetermined alarm associated with the detected predefined alarm condition, but that does not mean that the first indication cannot include a predetermined alarm. Equally, the fact that the first indication must include a temporary indicator (and must end before the second indication is output) does not mean that the second indication cannot include a temporary indicator.

Claim 5

172. I will mention Claim 5 very briefly, although Abbott submitted that, as the arguments have developed, claim 5 does not appear to play a role in the arguments. Claim 5 is for:

|

5 |

The method of claim 1 wherein |

|

5.1 |

the second indication is active at the end of the predetermined routine. |

173. Abbott submitted that this requires that the second indication (the "alarm" of integers 1.4, 1.5, 1.8) is output more or less straight away after completion of the predetermined routine. I agree that claim 5 seeks to specify a sense of immediacy of the second indication appearing at the end of the PDR.

INFRINGEMENT

174. Three Dexcom devices are contended to infringe EP627: the G6, G7 and D1. The infringement case was explained by reference to the G6 and its PPD and it appeared that no separate issues were raised by the other devices.

Abbott's case on infringement

175. As Abbott submitted, the G6 comprises a sensor and transmitter unit which attaches to the body to sense glucose levels, and a display device which receives information from the sensor/transmitter and displays it to the user. The display device can be an iPhone or an Android phone on which a dedicated app (the "G6 App") has been installed.

176. The example used by Dr Palerm in his Annex 2 to illustrate the infringement was as follows.

i) The infringement involves the G6 App running on the device in the background while the user is using another related app, such as the Dexcom Clarity App, to look at glucose reports. "[R]eview of historical data such as glucose data" is an example of a predetermined routine of integers 1.1 and 1.9 given at [0080].