Freely Available British and Irish Public Legal Information

[Home] [Databases] [World Law] [Multidatabase Search] [Help] [Feedback]

England and Wales High Court (Patents Court) Decisions

You are here: BAILII >> Databases >> England and Wales High Court (Patents Court) Decisions >> Optis Cellular Technology LLC & Ors v Apple Retail UK Ltd & Ors [2021] EWHC 3121 (Pat) (25 November 2021)

URL: http://www.bailii.org/ew/cases/EWHC/Patents/2021/3121.html

Cite as: [2021] EWHC 3121 (Pat)

[New search] [Printable PDF version] [Help]

Neutral Citation Number: [2021] EWHC 3121 (Pat)

Case No. HP-2019-000006

IN THE HIGH COURT OF JUSTICE

BUSINESS AND PROPERTY COURTS OF ENGLAND AND WALES

INTELLECTUAL PROPERTY LIST (ChD)

PATENTS COURT

Rolls Building

Fetter Lane

London, EC4A 1NL

November 25th 2021

Before :

MR JUSTICE MEADE

- - - - - - - - - - - - - - - - - - - - -

Between :

|

|

(1) OPTIS CELLULAR TECHNOLOGY LLC (2) OPTIS WIRELESS TECHNOLOGY LLC (3) UNWIRED PLANET INTERNATIONAL LIMITED |

Claimants

|

|

|

- and - |

|

|

|

(1) APPLE RETAIL UK LIMITED (2) APPLE DISTRIBUTION INTERNATIONAL LIMITED (3) APPLE INC

|

Defendants

|

James Abrahams QC, James Whyte and Michael Conway (instructed by EIP Europe LLP and Osborne Clarke LLP) for the Claimants

Lindsay Lane QC and Adam Gamsa (instructed by WilmerHale LLP) for the Defendants

Hearing dates: 5-7 and 13-14 October 2021

- - - - - - - - - - - - - - - - - - - - -

Covid-19 Protocol: This Judgment was handed down remotely by circulation to the parties’ representatives by email and release to Bailii. The date for hand-down is deemed to be 25 November 2021.

Mr Justice Meade:

Optis’ expert, Ms Johanna Dwyer 5

Apple’s expert, Prof Angel Lozano. 7

The common general knowledge. 10

Agreed common general knowledge. 11

Background to LTE and RAN1. 11

Division of radio resources within a cellular network. 11

User data and control information. 13

Resource allocation in LTE.. 14

Other downlink physical channels in the control region. 17

Transmitting Downlink Control Information (DCI) on PDCCH.. 18

Search spaces and blind decoding of PDCCHs. 18

Knowledge of hashing functions and random number generation. 19

Technical Background - Collisions and Blocking. 20

Disputed common general knowledge. 22

Hashing functions v random number generators. 24

Recursive v. self-contained. 25

Claims of the Patent in issue. 28

Prejudice/lion in the path. 35

Technograph and number of steps. 36

Pozzoli question 4 - unspecified claims. 37

Assessment of the Ericsson function. 38

A specific point on mod C.. 48

Same parameters for all aggregation levels. 50

Assessment of the secondary evidence. 50

Alternative routes - overall assessment 53

Conclusion on obviousness of the unspecified claims from Ericsson. 54

Obviousness of the specified claims. 54

Insufficiency of the unspecified claims. 58

Introduction

1. This is “Trial C” in these proceedings. Trials A, B and F have already taken place and the parties and the general shape of the litigation need no further introduction.

2. There are three patents in issue at this trial, namely EP (UK) 2 093 953 B1, EP (UK) 2 464 065 B1 and EP (UK) 2 592 779 B1 (“the Patents”). They are all closely related and from the same family, and it is common ground that I can decide all the issues by consideration of claims 1 and 4 of EP (UK) 2 093 953 B1. I will refer to it hereafter as “the Patent” and references to paragraph numbers are to the paragraph numbers in its specification.

3. The Patent was originally applied for by LG Electronics Inc (“LGE”). Optis is the assignee. The Patent is declared essential to LTE. By the time of the PTR, Apple had conceded that the Patent is indeed essential and therefore infringed if valid. The concession was said to be for reasons of “procedural economy”; whether that was the actual motivation is irrelevant to this judgment.

4. Essentiality having been conceded, the issues at trial were in relation to validity only. At the PTR I directed, after argument, that Apple should open the case and call its evidence first.

Conduct of the trial

5. The trial was conducted in Court. All the oral evidence was given live. To mitigate the COVID risk, the number of representatives of the parties and their clients permitted at any one time was limited, and a live feed was made available for others, and for the public should they ask. I am grateful to the third-party providers engaged by the parties to make the technology work.

6. Mr Abrahams QC, Mr Whyte and Mr Conway appeared for Optis and Ms Lane QC and Mr Gamsa for Apple.

The issues

7. The remaining issues were:

i) The nature of the skilled person, where there was a major disagreement, although in the end I do not think it matters to my overall conclusion.

ii) The scope of the common general knowledge (“CGK”). There was significant dispute here too.

iii) Obviousness over Slides R1-081101 entitled “PDCCH Blind Decoding - Outcome of offline discussions” presented at a RAN1 meeting of 11-15 February 2008 (“Ericsson”), in conjunction with CGK.

iv) Obviousness over The Art of Computer Programming, Vol. 2 Seminumerical Algorithms, 2nd Ed (1981), Chapter 3 “Random Numbers”, pages 1-40 (“Knuth”) in conjunction with CGK.

v) The significance or otherwise of the secondary evidence of how RAN1 members were working at the time and how they reacted to Ericsson and to the alleged invention of the Patents.

vi) Two insufficiencies, run primarily as squeezes against obviousness (although Apple said that at least claim 1 was both obvious and insufficient).

8. Apple argued that both Ericsson and Knuth were CGK (or such that each would be found by routine research - see below), and its obviousness cases were essentially from Ericsson as a starting point and thence to Knuth, or from Knuth as a starting point and thence to Ericsson. The former was its primary case; the latter depended on a narrow definition of the skilled person so as to make Ericsson CGK. I return to this in more detail below.

9. In closing written submissions, Apple indicated that it wanted to reserve the right to argue that it ought to be entitled to mosaic Ericsson and Knuth even if neither was CGK and, as I understood the submission, the one would not be found by obvious research from the other. The basis for this submission was that UK law is out of step with the European Patent Office (“EPO”), and that in the EPO such a mosaic would be allowed by virtue of the problem-solution analysis. I merely note this indication; I was not asked to decide the argument or even to rule on whether it would be open to Apple to run it at all at such a late stage.

The witnesses

10. Each side called one expert witness. There was no fact evidence.

Optis’ expert, Ms Johanna Dwyer

11. Optis’ expert was Ms Johanna Dwyer. She gave evidence in Trial B as well, on which occasion she spoke to how ETSI worked and how its IPR Policy had developed. In my judgment on that Trial I described her career as follows:

“She worked for RIM/Blackberry for many years, and from 2005 until 2012 she was involved in various aspects of standards and IP. She participated in various 3GPP WGs and TSGs. She worked on IPR declarations and held senior positions in relation to system standards. Following an MBA in 2012 she has worked in more business-focused and consultancy roles, still very largely in cellular communications. She has given evidence in the Eastern District of Texas proceedings between the parties.”

12. Apple sought to suggest that Ms Dwyer was, by the priority date, not really engaged in technical work at all, but only on IP matters. This was based on the way she expressed things in her CV. I reject this criticism. Ms Dwyer plainly had and has very considerable technical expertise in telecoms. Her CV is a short one which she said was typical for Canada, where she lives, and did not seem to have been prepared for this or any other litigation, but to obtain work for her business. It therefore emphasises IP, since that is the expertise she now focuses on.

13. However, although that criticism was misplaced, Apple made much more headway in relation to whether Ms Dwyer’s technical knowledge and experience put her in a good position to give evidence on the specific issues in this case. I thought the following were important:

i) Ms Dwyer did not have any real, specific experience of RAN1. She was not an attendee of any meetings and she did not send any RAN1 emails (of which a repository exists).

ii) She had never done any RAN1 simulations and she was forbidden by Optis’ advisers from doing any for this litigation.

iii) She was not experienced with modular arithmetic. She had no practical experience before this litigation and had to look it up. She could not remember if she studied it at university.

iv) She made errors in modular arithmetic in her written evidence. Of course typographical errors happen to everyone and do not in themselves reflect on a witness, but she corrected one particular error without noticing that exactly the same mistake was repeated multiple times over adjacent pages.

v) She actively put forward ideas based on modular arithmetic which were wrong. In particular, she put forward two ways to turn the Ericsson function into an LCG which were wrong, the first being meaningless and the second still suffering from the C=16 problem in Ericsson (I explain below what these mean).

vi) She had a conception of what aspects of modular arithmetic would be CGK (or be found readily by the skilled person) which I found hard to make sense of: she said that the modulo function (which is just derivation of a remainder) would be CGK but that the distributive property of modular arithmetic (again explained below) would not. The latter is necessary to be able to see one of the problems with Ericsson, but it is not really very complicated.

14. Ultimately Ms Dwyer accepted that if the skilled person were someone that understands modular arithmetic to a greater degree than her, then she could not assist the Court with what the skilled person would do with a function that has modular arithmetic in it.

15. Ms Dwyer’s unfamiliarity with (1) RAN1, (2) simulations relevant to RAN1 and (3) modular arithmetic lead me to conclude that her evidence is of extremely limited help on the key issues in this case.

16. I also think that Ms Dwyer put far too much emphasis on the secondary evidence. Her first report, for example, had 49 pages about it. Often, both in written and oral evidence, she would address what the skilled person would do or think first and foremost by reference to what a specific person in RAN1 had done or said, without adequate caution about whether that person was representative of the skilled person, and without really addressing what the notional skilled person would do, or think.

17. None of this is to criticise in any way Ms Dwyer’s integrity or independence. Her answers were clear and direct and she did not, for example, try to avoid recognising where she lacked of familiarity, or her mistakes. I remain of the view that I formed in Trial B that she is a very good expert in terms of her personal qualities. It is just that in this trial she was materially outside her area of expertise.

Apple’s expert, Prof Angel Lozano

18. Apple called Prof Angel Lozano. He is currently an academic, being a Full Professor at Universitat Pompeu Fabra. Following his doctorate in 1999 he was until 2008 a researcher at Bell Labs working on various wireless communications issues. In parallel he was an adjunct professor at Columbia University. From about 2006 he was commissioned to support the standardisation team of Lucent, Bell’s parent (later acquired by Nokia). As part of that he attended various 3GPP meetings as a RAN1 delegate in 2006/2007.

19. Apple thought that Optis was criticising Prof Lozano for being too academic, lacking real world experience. I do not think Optis was saying this, and if it was then it was unsustainable given his direct exposure to RAN1 meetings while working in industry at exactly the priority date.

20. Optis did say that Prof Lozano was afflicted by hindsight because he knew of the alleged invention from his real-world experience. I reject this. He merely said that he had some “minimum” familiarity with the PDCCH search space.

21. Optis also said that Prof Lozano was affected by hindsight in relation to the case of obviousness over Knuth because he approached it on the assumption that the reader knew about the problem to which the Patents are addressed in terms of PDCCH search space. I tend to agree with this and it is consistent with my reasons for rejecting the obviousness case starting from Knuth, but it is not relevant to the argument starting from Ericsson and there I think the professor’s approach was entirely appropriate.

22. Optis went on to make various more specific points which are addressed below. I did not think there was anything in them.

23. I found Prof Lozano overall to be an excellent witness. He was very clear in his explanations and short and direct in his answers on the whole. He had a practical approach too, as evidenced by the fact that in relation to some aspects of Knuth he said that the skilled person would not find it necessary to grapple with all the details of dense mathematical proofs but would instead undertake some simulations.

24. Prof Lozano was familiar with simulations and I think his approach to them was in line with what RAN1 workers would have done.

25. Overall therefore I found Prof Lozano a much more cogent witness than Ms Dwyer. Naturally, that does not mean that I should accept anything he said uncritically. In places, Counsel for Optis made some progress during a careful, detailed and sustained cross-examination, the attack starting from Knuth being the main one. But in general, where the issues are ones of balancing his views against those of Ms Dwyer, and in areas where the cross-examination did not dent his position, I prefer his evidence to Ms Dwyer’s on the basis that he was much better placed to explain what the skilled person would think, and to put himself in that person’s position.

26. I should say that this is not the same thing as trying to decide which of two well-qualified experts is in fact the closest approximation to the notional skilled person. That is not a legitimate way to approach patent cases. My findings are instead based on Prof Lozano being so much better able to put himself in the position of the skilled person by virtue of greater real-world familiarity and, rarely for a patent case, on Ms Dwyer lacking the minimum necessary understanding of the technical issues to be able to perform the same task. Prof Lozano also gave a much more appropriate degree of consideration to the secondary evidence.

27. Finally in relation to the experts, I should mention that Optis said that simulations that Prof Lozano had done for his reports were experiments and ought not to be permitted. Optis did not seek to have them excluded at the PTR, however, and instead took the approach that while they ought not to be excluded altogether they did not deserve to be given any weight. I reject this; Optis’ chance to have them excluded passed and if it wanted to undermine their weight then they should have been addressed during cross-examination, which they were not. However, their significance to my decision is modest.

The skilled person

28. Optis said that the skilled person would be a person engaged in work on RAN1. Apple said that the skilled person would be a person engaged in the more narrow field of the PDCCH specifically.

29. I considered the applicable law recently in Alcon v. Actavis [2021] EWHC 1026 (Pat), drawing heavily on the decision of Birss J, as he then was, in Illumina v. Latvia [2021] EWHC 57 (Pat). The particularly relevant passages are [68]-[70] in Illumina and [31] in Alcon.

30. At [68] in Illumina Birss J provided the following approach:

“68. I conclude that in a case in which it is necessary to define the skilled person for the purposes of obviousness in a different way from the skilled person to whom the patent is addressed, the approach to take, bringing Schlumberger and Medimmune together, is:

i) To start by asking what problem does the invention aim to solve?

ii) That leads one in turn to consider what the established field which existed was, in which the problem in fact can be located.

iii) It is the notional person or team in that established field which is the relevant team making up the person skilled in the art.”

31. And in Alcon at [31] I said:

“31. I intend to apply that approach. I take particular note of:

i) The requirements not to be unfair to the patentee by allowing an artificially narrow definition, or unfair to the public (and the defendant) by going so broad as to “dilute” the CGK. Thus, as Counsel for Alcon accepted, there is an element of value judgment in the assessment.

ii) The fact that I must consider the real situation at the priority date, and in particular what teams existed.

iii) The need to look for an ‘established field’, which might be a research field or a field of manufacture.

iv) The starting point is the identification of the problem that the invention aims to solve.”

32. In the present case, the problem that the invention aims to solve is not in dispute: it is a narrow one of how to allocate PDCCH search spaces.

33. The established field in which this problem was in fact located was RAN1. The PDCCH was not a field in its own right. Prof Lozano accepted that no one would have had a scope of work that matched it. It was too narrow for that. There was no RAN1 sub-group or sub-plenary devoted to it.

34. Thus I reject Apple’s argument that the skilled person would have been a PDCCH person in the sense Apple meant that. It is a “blue Venezuelan razor blade” kind of argument (see [62] in Illumina), though not nearly as extreme in degree as that imaginary example.

35. Optis’ view of the skilled person has the benefit that RAN1 clearly was an established field, and that the problem that the invention aims to solve is within its scope.

36. However, in my view Optis treated the analysis that the skilled person is a RAN1 person as an opportunity to carry out some inappropriate dumbing-down through dilution, of the kind deprecated in Mayne v. Debiopharm [2006] EWHC 1123 (Pat) and cited by Birss J in Illumina and recognised by me in Alcon. RAN1 is a broad umbrella and probably no one real person had the knowledge, skills and experience to cover the whole of its field. One can see that by the number of people participating in the discussions, and by the fact that major companies had teams on RAN1, either attending as delegates or participating in the background.

37. Where this is of potential practical importance in the present case is in Optis’ contentions that the skilled person would not be comfortable with, for example and in particular, modular arithmetic, or hashing functions/random numbers. This was basically a submission that the skilled person would lack the basic tools to do the task which Ericsson set - to assess its function and then improve it if necessary. The submission was rather grounded in the idea of the skilled person being an individual spread so thin across RAN1 that their CGK on any particular aspect of it must be very shallow. For the reasons I have just given, I reject this as a matter of principle and on the facts.

38. My conclusion in this respect is supported by the principle expressed by Pumfrey J in Horne v. Reliance [2000] FSR 90 (also cited in Illumina) that the attributes of the skilled person may often be deduced from assumptions which the specification clearly makes about their abilities. In the present case the specification of the Patents gives the skilled person some parameters for use as A, B and D in the LCG of the claims, but it assumes that with only the modest amount of help that the specification gives, the skilled person would be able to find more options for the parameters if they wanted to.

39. Thus I conclude that the skilled person in this case is a “RAN1 person” of the kind attending meetings or providing back-up, with the aptitudes and CGK appropriate to the tasks that RAN1 would require of them. In real life, as I say, the organisations involved will have had multiple people to give this coverage, but in the present case I can refer in the singular to “the skilled person”.

40. While the “dilution” point is of potential general importance, the RAN1 v PDCCH point only matters for the case over Knuth, since Optis accepts that the RAN1 skilled person starting from Ericsson would know or look up the material relied on by Apple.

The common general knowledge

41. There was no dispute as to the applicable legal principles: to form part of the CGK, information must be generally known in the art, and regarded as a good basis for future action. It is not a requirement for CGK that the skilled person would have memorised it; CGK includes information that the skilled person would refer to as a matter of course.

42. In relation to obviousness, the Court also may have regard to information which the skilled person would acquire as a matter of routine if working on the problem in question. Information of that kind is not CGK as such (although the effect may be very similar) but rather may be taken into account because it is obvious to get it. See KCI v. Smith & Nephew [2010] EWHC 1487 (Pat) at [108]-[112], approved on appeal at [2010] ECWA Civ 1260.

43. As is now usual, the parties submitted a document setting out the agreed matters of CGK, which I have used as the basis for the next section of this judgment. Where I have removed material it is because I think it of low relevance, not because I disagree with it.

44. The section on “Collisions and Blocking” was produced during trial after I asked what the position was on the state of CGK on that topic. Its contents are not accepted by Optis as being CGK because Optis (successfully) disputed that the skilled person is a PDCCH person (see above), but Optis does accept that its contents would be “apparent” to a RAN1 person reading Ericsson or “otherwise tasked specifically with the problem of control signalling on the PDCCH”.

45. I take this to mean that the contents of the section can by agreement be treated as CGK for the practical purposes of the case starting from Ericsson, and that is how the argument proceeded. In some cases it could be important that information found by routine research would not be known to the skilled person right at the outset of their consideration of the prior art, but only after they had identified a problem. But in the present case the skilled person would acquire the information about collisions and blocking straight away, since it is necessary to understanding Ericsson.

46. There was also a dispute about the relevant sources of CGK. Usually, CGK is proved by means of well-established textbooks and the like. In this field there was no textbook specific to LTE. But in any event, the dispute was really about the path from Ericsson to Knuth, and I deal with it in the context of obviousness. At this stage I merely observe that what one is considering is whether particular information was CGK; it is legitimate for a party to put forward materials as examples of how information would be obtained, without necessarily saying that those materials are themselves CGK.

Agreed common general knowledge

Background to LTE and RAN1

47. LTE stands for “Long Term Evolution” and is (or at least became) a “fourth generation” (4G) Radio Access Network (RAN). It succeeded the second and third generation systems (2G and 3G). It was driven by EU, US, Chinese, Japanese, South Korean and (to some extent) Indian initiatives.

48. In 3GPP, Technical Specification Group (TSG) RAN Working Group 1 (RAN1) is responsible for the physical layer (L1) specifications.

49. There was no actual LTE network at the Priority Date (19 February 2008). The first technical specifications defining LTE were published in 2007 as part of Release 8, which was the Release current at the Priority Date.

Division of radio resources within a cellular network

50. In a cellular communications system, a “resource” is a term used to refer to the way in which the radio spectrum is divided up and allocated so that different transmissions can be distinguished from one another. Resources can be defined in different ways, such as by a given period of time, at a particular frequency, or using particular codes.

51. In LTE, as with other cellular systems, the radio resource is divided between resources used for transmissions from UEs (i.e. mobiles) to the eNodeB (i.e. base station), which is referred to as the “uplink”, and resources used for transmissions from the eNodeB to UEs, referred to as the “downlink”.

52. In addition to the division between uplink and downlink transmissions, cellular networks also need a way of allocating resources between transmissions from and to different UEs, and between transmissions on different channels (e.g., those for sending control information or user data). These techniques are referred to as multiple access technologies.

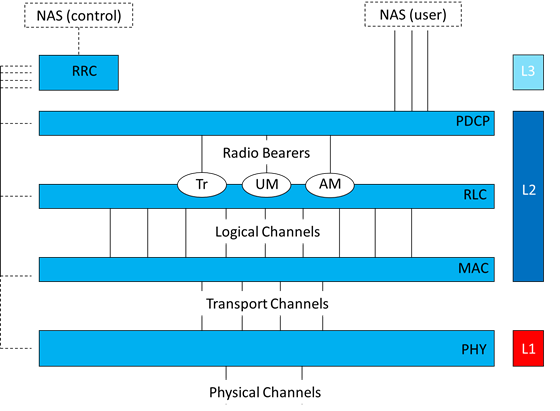

Protocols and Layers

53. A common way of conceptualizing mobile communications systems is the Open Systems Interconnection (OSI) model. The OSI model divides the processes by which data is transmitted and received into different protocol ‘layers’, in which each layer relates to particular functionality. A group of layers that communicate with each other to transmit and receive data is referred to as a protocol “stack”. When transmitting, data packets are passed from higher layers in the stack down to lower layers.

54. At each layer, data is operated on according to the protocols specified for that layer, e.g., header information may be added or removed, or data packets combined or separated, before being passed up or down the stack to the next layer. Each layer in the transmitting entity can be thought of as being in logical communication with its peer in the receiving entity.

55. A simplified version of the protocol layer architecture within LTE is shown in Figure 2 below.

Figure 2: Protocol layer architecture within LTE

56. Layer 1 (L1) is the Physical Layer (or PHY) which is responsible for the processes required to prepare data for transmission and transmitting it over the air interface (and on the receiving side, converting radio signals into digital format for passing up to higher layers).

57. Layer 2 (L2) is the data link layer. It comprises the Packet Data Convergence Protocol (PDCP), Radio Link Control (RLC) and the Medium Access Control (MAC) sub layers. In broad terms, Layer 2 is responsible for managing the flow of information between the UE and the Access Network. This involves, for example, data compression and decompression, combining and segmenting data packets, error detection and retransmission of data, resource management, and determining a suitable transport format to pass data on to the PHY.

58. Layer 3 (L3) is the network layer. This comprises the Radio Resource Control (RRC). The RRC is broadly responsible for the signaling required to set up, configure and take down connections between the UE and the network and for managing the protocols to be applied to different services.

User data and control information

59. Two types of information may be transmitted up and down the stack and over the air: namely, user plane data and control plane information. User plane data refers to data transferred between an application and its peer application at the other end of an end-to-end connection (e.g., voice or packet data between two mobile users). Control plane information comprises messages used to configure and manage the network, such as signaling to indicate whether a packet has been received accurately, or scheduling information.

Channels and PDCCH

60. A “channel” refers to a communication pathway used for a specific purpose or for sending information of a particular type, such as certain kinds of control information, or user data. Data sent on a given channel is configured in a particular way according to a set of rules specified by the standard.

61. In LTE, what defines a channel also depends on the level in the protocol stack. Between the RLC and MAC, “logical channels” are used to carry information for certain purposes. At the MAC layer, two or more logical channels may then be combined into a single “transport channel”, for onward transmission to the physical layer.

62. At the physical layer, transport channels are mapped to “physical channels”, which are configured to have a particular structure and to use a particular set of resources. LTE has some physical control channels which carry signaling necessary to configure transmissions on the physical layer, such as resource allocations. In the downlink, these include the PDCCH in LTE, described in more detail below. The PDCCH is one of seven different channel types that can carry control information. PDCCH may refer to a specific single control channel between the eNodeB and an individual UE, or refer to all PDCCH channels.

Resource allocation in LTE

63. Multiple possible channel bandwidths can be used in LTE, which are: 1.4 MHz, 3 MHz, 5 MHz, 10 MHz, 15 MHz, and 20 MHz. The channel bandwidth limits the resources available.

64. In LTE, radio resources are divided up according to a two-dimensional resource space in the time and frequency domains:

i) In the time domain, LTE uses units of 10 ms called a radio frame, where each radio frame is further divided into ten 1 ms subframes. Each 1 ms subframe is further divided into two “slots” of 0.5 ms duration.

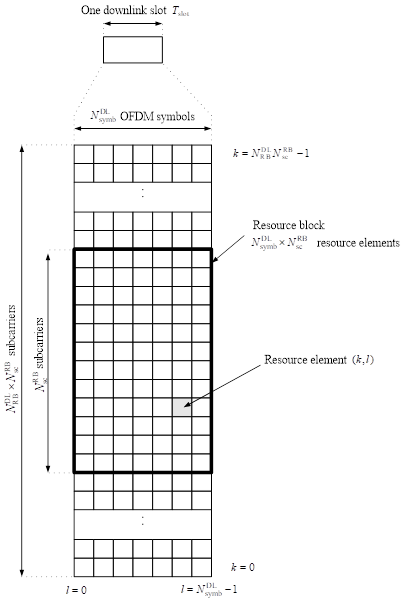

ii) In the frequency domain, the overall bandwidth is divided up into a number of evenly spaced narrow frequency bands called subcarriers. Data subcarriers in LTE span 15 kHz regardless of the channel bandwidth. The symbol time is the inverse of the subcarrier spacing, therefore the symbol time in LTE is 66.67 µs.

65. Resources in LTE are allocated in units called Resource Blocks (RBs). Each RB comprises one slot in time and spans twelve 15 kHz subcarriers in the frequency domain (180 kHz). In the time domain, each slot is divided into either six or seven OFDM symbols, depending on how it is configured, each of which spans the 12 subcarriers of the RB (Six symbols are used when the RB is configured to use a technique referred to as “extended cyclic prefix”. It is not necessary to describe this any further for the purposes of the issues in this case). An illustration of the resource grid in the downlink showing a resource block with seven symbols is shown in Figure 3, which is extracted from TS 36.211 (as Figure 6.2.2-1).

Figure 3: Downlink resource grid as depicted in Figure 6.2.2-1 of TS 36.211

66. As shown in Figure 3, the smallest unit in time and frequency is a Resource Element (RE), which consists of a single subcarrier in frequency and a single symbol in duration (time).

67. Within a given subframe (comprising two slots), some REs may be used for data (uplink or downlink) whereas other REs are reserved for particular purposes, for example control channels, broadcast channels, indicator channels, reference signals and synchronization signals. Depending on their location, these reserved portions of the subframe have an impact on the REs available for downlink or uplink data transmissions in a given RB, and on the total REs available for downlink control information.

Scheduling

68. LTE makes use of shared (amongst multiple UE) physical channels for both data and control signaling in the downlink and the uplink. The resource allocation provided in control messages from the eNodeB to the UEs within a cell indicates the resources in the downlink channel that contain downlink transmissions for a given UE, and which resources in the uplink channel are assigned to a given UE to make uplink transmissions. The choice of which resources are assigned to which UE is called “scheduling” and is managed by the eNodeB. The minimum resource unit for scheduling purposes is one RB. In addition to the resource allocation, the eNodeB specifies the parameters of the data transmissions sent in each scheduled RB. In LTE, this control signaling is handled in the physical layer.

69. Explicit control signaling indicating to the UE where to find the downlink data intended for it and the relevant parameters needed to successfully decode that downlink data avoids considerable additional complexity which would arise if UEs had to search for their data on the Physical Downlink Shared Channel (PDSCH) amongst all possible combinations of resource allocation, packet data size, and modulation and coding schemes.

70. When making scheduling decisions, the eNodeB takes into account various considerations, such as the amount of downlink data it needs to transmit to each UE, the amount of data each UE has to send in its uplink buffer, the quality of service requirements of that data, the signal quality for each UE, and what antennas are available. The scheduling algorithms used by eNodeBs are not specified in the standard and are left to the implementation.

Downlink control in LTE

72. The DCI is sent in PDCCHs in the control region at the start of the downlink subframe. The DCI contains critical information for the UE, because it informs the UE about its uplink resource allocation and where to find its information on the downlink. The control region may span from 1 to 3 symbols.

73. The design of the PDCCH included the UE procedure for determining its PDCCH assignment. Aspects of the PDCCH assignment procedure and how UEs would monitor the control region to find PDCCH for them to obtain DCI were still in development at the Priority Date. The following paragraphs set out the basic parameters of the PDCCH as far as it had been specified in the relevant TSs at the Priority Date.

74. The format of the PDCCH is set out in Section 6.8.1 of TS 36.211 v.8.1.0 (the version current at the Priority Date):

6.8.1 PDCCH formats

The physical downlink control channel carries scheduling assignments and other control information. A physical control channel is transmitted on an aggregation of one or several control channel elements (CCEs), where a control channel element corresponds to a set of resource elements. Multiple PDCCHs can be transmitted in a subframe.

The PDCCH supports multiple formats as listed in Table 6.8.1-1.

Table 6.8.1-1: Supported PDCCH formats

|

PDCCH format |

Number of CCEs |

Number of PDCCH bits |

|

0 |

1 |

|

|

1 |

2 |

|

|

2 |

4 |

|

|

3 |

8 |

|

75. It had been determined that control channels would be formed by the aggregation of control channel elements (CCEs), where a CCE corresponds to a set of REs. When the eNodeB determines that messages need to occupy multiple CCEs, they are sent by the eNodeB using CCE aggregation. Aggregation of the CCEs had to be done in a structured way. It had been decided that the PDCCH message could be in one of four formats (0, 1, 2 and 3), corresponding to CCE aggregations of 1, 2, 4 and 8, respectively. An aggregation level of 4, for instance, means that four consecutive CCEs are combined. The number of REs that each CCE would comprise (and therefore the number of bits in the different PDCCH formats), and whether this would be determined according to the system bandwidth, had not been specified in the relevant TS at this stage.

76. It had also been decided that the PDCCH would occupy a region at the beginning of each subframe, referred to as the “control region”. It had been decided that the region in time occupied by the control region could be the first one, two or three symbols, which could be altered dynamically by a Control Format Indicator (CFI), which is sent on the Physical Control Format Indicator Channel (PCFICH).

77. The UE would be required to monitor a set of candidate control channels in the control region as often as each subframe (see section 9.1 of TS 36.213 v.8.1.0):

9.1 UE procedure for determining physical downlink control channel assignment

A UE is required to monitor a set of control channel candidates as often as every sub-frame. The number of candidate control channels in the set and configuration of each candidate is configured by the higher layer signaling.

A UE determines the control region size to monitor in each subframe based on PCFICH which indicates the number of OFDM symbols (l) in the control region (l=1,2,or 3) and PHICH symbol duration (M) received from the P-BCH where ![]() . For unicast subframes M=1 or 3 while for MBSFN subframes M=1 or 2.

. For unicast subframes M=1 or 3 while for MBSFN subframes M=1 or 2.

Other downlink physical channels in the control region

78. In addition to PDCCHs, some of the REs in the control region are used for other purposes. The UE can determine for each subframe which REs have been allocated by the eNodeB for the PDCCH. It does so based on system characteristics and on parameters broadcast by the network:

Transmitting Downlink Control Information (DCI) on PDCCH

80. Different DCI formats for sending different kinds of control information were created. At the Priority Date, several different DCI formats had been defined.

81. The format of the DCI messages sent on the PDCCH is determined in the eNodeB. The DCI format used affects the size of the DCI message, and hence the minimum number of CCEs required to transmit it. A DCI message may be short enough to fit in a single CCE. For longer DCI messages, more than one CCE is needed. DCI formats that are larger in size can be sent in a PDCCH format with a higher CCE aggregation (e.g., PDCCH format 2 which aggregates 4 CCEs in a PDCCH, or PDCCH format 3 which aggregates 8 CCEs in a PDCCH). The CCE aggregation level required also depends on the level of coding redundancy required to provide robust signaling to a UE. When the channel conditions to a given UE are poor, for instance, the eNodeB will include more error correction information, resulting in a longer message. (The terms “quality” or “geometry” are sometimes used to describe channel conditions.) A DCI message sent at a higher coding rate, providing greater redundancy, requires more CCEs for the PDCCH than the same DCI message sent at a lower coding rate.

UE ID

82. Each UE in a cell is given a specific Radio Network Temporary Identifier (RNTI) for identification of that UE in that cell (the C-RNTI). The network issues the RNTI. The C-RNTI is 16-bits long; and theoretically could be a number ranging from 1 to 65535. It was referred to in the evidence and many documents at trial just as the “UE ID”.

Search spaces and blind decoding of PDCCHs

83. Once the DCI message is generated and channel coded according to the required DCI and PDCCH format, it is mapped onto CCEs in the control region. A PDCCH that may carry control information for a UE is described as a “PDCCH candidate” for that UE. Once the UE has ascertained which CCEs are allocated to the PDCCH, it needs to analyze the PDCCH to determine whether its content is directed to that UE (known as “blind decoding”, also referred to below). Section 9.1 of TS 36.213 v.8.1.0 specified that each UE monitors a set of PDCCH candidates within the control region to find its own control information.

84. The RNTI is used to scramble the CRC of the PDCCH. This process is also called “masking” the CRC with the UE ID.

85. To detect whether a PDCCH contains control information for a UE, the UE searches for its ID in the masked CRC of the PDCCH. This is referred to as “blind decoding”.

86. The number of PDCCH candidates at each aggregation level, and the number of different DCI formats that each PDCCH aggregation level might carry, determines the maximum number of blind decoding attempts required by a UE in a search space. As there are several different downlink control formats, and four different PDCCH formats (aggregation levels), the number of PDCCH candidates monitored may be large. Attempting to decode the entire control region, applying all the possible PDCCH formats and DCI formats would be a substantial burden on the UE especially when the control region spans 3 symbols. To reduce this burden, a UE would only be required to attempt to decode a subset of all possible PDCCH candidates. This subset was called a search space.

Knowledge of hashing functions and random number generation

88. A hashing function is any function that can be used to map data of potentially arbitrary size to fixed sized values. In other words, it is a function that allocates a large number of inputs to a small known number of outputs.

90. The skilled person knew that computer languages such as MATLAB, C and C++ have pseudo-random number generators built into them and would have used such generators.

91. This is one of a number of areas of the CGK where there was some agreement (which I have just set out) but also areas of disagreement. I return to the disputed aspects below. From here on I refer to “random” numbers as generated by computers, even though in fact they are deterministic and actually pseudo-random, as explained above. This usage was adopted pretty consistently at trial.

Simulations

92. MATLAB was one software package used for running simulations. There was a minor disagreement about simulations, which I cover below.

Processor word size

Modulo operation

95. The skilled person would be familiar with the modulo operation. This was the limited extent of agreement about the CGK on modular arithmetic; I cover the disagreement below.

Technical Background - Collisions and Blocking

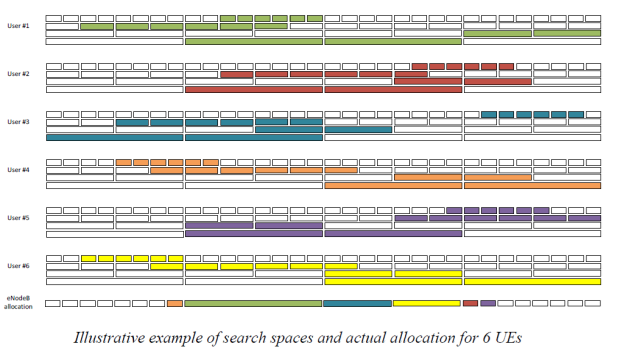

97. In the specific context of search spaces on the PDCCH, a “collision” refers to the search spaces completely overlapping (i.e., starting at the same location). To illustrate this concept, the example below shows, for the purpose of illustration, one way that the search space for several UEs might be arranged. The search space for each UE is shown in a different colour. In the diagram, the size of the search spaces at each aggregation level is 6 aggregations at each of aggregation levels 1 and 2, and 2 aggregations at each of levels 4 and 8.

98. For each subframe, the eNodeB chooses where within each UE’s search space to allocate DCI messages for that UE. One possible such choice is shown in the “eNodeB allocation” section of the diagram above. In this example, the eNodeB is sending one message for each UE. The eNodeB has chosen the aggregation level for each UE, and identified an arrangement for all the messages so that each UE’s message is within that UE’s search space at the appropriate aggregation level. This process is repeated in each subframe.

100. In other circumstances, search spaces can completely overlap - i.e. there is a collision - but there is no blocking. For instance, in the example in the previous paragraph, if the eNodeB only wanted to send messages at aggregation level 8, to two of the three UEs, it could do so.

101. Blocking may also arise because of the interaction between aggregation levels. In the diagram above, the eNodeB could send an aggregation level 8 message to each of UEs 1-4 but doing so blocks any messages to UEs 5 and 6.

102. If only two UEs are being considered and there are enough CCEs available, there will never be a situation where a message to one UE blocks a message being sent to the other. In practice, blocking arises because there are more than two UEs and/or there are too few CCEs. (This paragraph assumes that the search space at each aggregation level contains more than one CCE aggregation at that aggregation level.)

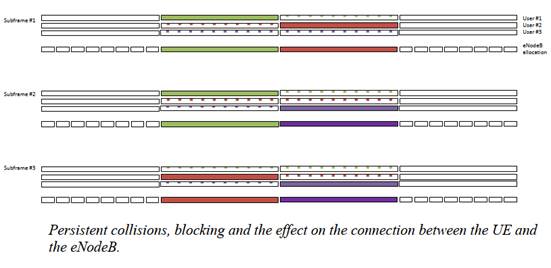

103. A collision is not the same as blocking. From a system viewpoint, it is blocking, not collisions, that is important. It would also not be correct to think of blocking as meaning that communication as a whole is prevented. In the example above, each of the three UEs can still receive a DCI message in two out of every three subframes. This is illustrated further in the figure below:

104. The figure shows three subframes with 32 CCEs for PDCCH in each, and illustrates the search spaces for three particular UEs at aggregation level 8. In this illustration, all other aggregation levels are omitted, and the CCEs forming part of the search space but not used for a DCI message are shown shaded. In this case, there is a persistent collision between the three UEs, meaning that each UE has the same search space in each of the three subframes (CCE#8 to 23, i.e., two aggregations at aggregation level 8). So the eNodeB cannot send a DCI message at aggregation level 8 to each of these UEs in each of these subframes. There is blocking; some communication is prevented. However, the eNodeB could still send DCI messages to each UE in two out of the three subframes, and the overall effect is therefore to degrade the connection between the eNodeB and each UE, without necessarily breaking it.

Disputed common general knowledge

105. I now turn to the areas where there was either a wholesale dispute about CGK, or where there was some agreement (set out above) and residual disagreement.

LCGs

106. The following information is not information that the skilled person would have at the outset of their consideration of Ericsson. It only comes in if they found a problem with the Ericsson function and looked to the literature in the way that Apple alleges. Since, for reasons that I explain below, I accept Apple’s submissions in that respect, the following information is relevant to obviousness though not CGK as such, and I set it out here for readability of the following sections of this judgment. I explain below when I deal with obviousness how the skilled person would get to the information and in particular to Knuth. I also deal there with the limitations of LCGs that the skilled person would become aware of in getting to Knuth, and from routine research generally. At this stage, I am just setting out the basics.

107. An LCG is a random number generator with the following form:

Xn+1 = (AXn + B) mod D

108. Various different letters are used in the evidence and exhibits for A, B and D. I am going to use A, B and D where possible because those are the letters used in the claims and in important parts of the evidence.

109. The form of LCG set out above shows how and why it is recursive. The LCG will generate a sequence of numbers in the following way (which I express in somewhat lay terms for present purposes):

i) You take the previous value in the sequence, which was Xn

ii) You multiply it by A. A is thus the multiplier.

iii) You add B. B is thus the increment.

iv) You take mod D of the result. D is the modulus.

v) The process is repeated.

110. The sequence has to start somewhere, and that is called the seed (or start value, or initial value, or similar).

111. Eventually the sequence will repeat. The number of iterations before repeating is called the period. The period cannot, for obvious reasons, exceed the modulus, but it may be less. Maximising the period depends on the parameters chosen. Poorer choices will lead to a shorter period.

Experience of RNGs/LCGs

112. This is perhaps more a point about the skilled person than CGK, but I will deal with it here because that is where Optis put it. The issue is over how much experience the skilled person would have of practically using random number generators (“RNGs”) within RAN1.

113. Prof Lozano gave a couple of examples of the use of RNGs in 3GPP. One was from RAN2 and left to implementation the specific RNG to be used. So it is not evidence in itself of any particular engineers in 3GPP using RNGs, but it provides some indirect evidence that it was expected that such engineers could conduct the necessary implementation.

114. The second example was of the use of an LCG, where the function appeared to have been taken (“stolen”) straight from a source called Numerical Recipes in C (“NRC”, which I discuss further below) without (Optis said) showing any assessment of the appropriateness of what was chosen. Optis argues that this shows that the skilled person could only perform uncomprehending lifting of RNGs/LCGs from the literature. I do not think it shows that that was all that they could do, just that that is all they did on that occasion. This conception of the skilled person as not understanding what they were doing is wrong in principle, in my view, and also inconsistent with the teaching in NRC and Knuth encouraging the reader to think and understand their choices, and with what the Patents expect of their reader.

115. So these examples do not help Optis. They provide some modest evidence of the actual use of RNGs/LCGs in the field. I accept that in RAN1 (or RAN2) the need to choose a random number generator arose relatively infrequently, but in itself that does not mean much.

116. In a similar vein, Optis argued that there were cases referred to in the documents such as NRC of skilled mathematicians botching LCG implementations. I accept that this would lead the skilled person to exercise care, which is what the law deems and requires to be used in any event, but I do not accept that it would deter the skilled person from using an LCG if it otherwise appeared suitable. Optis also said that the same considerations would lead the skilled address to stick to off the shelf solutions. I reject this for very similar reasons, but in any case it is clear that whatever the skilled person did, including with an off the shelf solution, they would have to check that they had made the right choice, and care would be needed then too. They would also want to think about whether the choice they made was overengineered for the application and hardware, and that too would require understanding.

117. All in all therefore I reject the argument that the skilled person would be someone who did not understand what they were doing with RNGs and/or thereby lacked confidence so that they only used off the shelf solutions.

Hashing functions v random number generators

118. This dispute was related to the dispute about self-contained v. recursive functions which I cover in connection with obviousness, below. Optis argued that it was CGK that hashing functions are necessarily self-contained while RNGs are necessarily recursive, and that for this and other reasons the two were regarded as quite separate and distinct concepts.

119. Indeed, Counsel for Optis began his closing submissions, Optis’ main oral argument in the case, by saying that “this is a case about hashing functions” and Apple wanted to “make this a case about random number generators”.

120. I think this was artificial; an attempt to create a conceptual difference that would not be seen by the skilled person to matter. My reasons include:

i) Counsel for Optis had opened the case by saying that “Hashing functions do involve randomisation as a means of achieving an even or uniform distribution, as your Lordship has obviously got”.

ii) The goal of a hashing function is to spread a large number of inputs evenly over a smaller number of outputs. The even spread may be achieved by mimicking a random spread.

iii) Ms Dwyer accepted that hashing functions that are uniform and random are good.

iv) The terms “hashing function” and “randomisation function” are used interchangeably in the art, including in the secondary evidence relied on by Optis, as Ms Dwyer also accepted. Ms Dwyer’s own written evidence in relation to Ericsson referred to the “randomization” and “randomness” of the hashing function.

v) Knuth’s chapter on hashing cross-refers to the chapter on random numbers. Optis sought to downplay this, but Ms Dwyer accepted that the direction was to look to the chapter on random numbers in connection with getting a hashing function whose overall output was random.

121. I therefore reject the argument that it was CGK (or would emerge from routine research) that hashing functions and random number generators were separate and distinct from one another.

Recursive v. self-contained

122. This was another point which bridged CGK and obviousness. It is convenient to deal with it here under CGK, as Optis did.

123. Optis argued that there was a fundamental difference between a recursive function (which an LCG is, because the production of each number uses the previous answer as an input as I have explained above) and a free-standing or “self-contained” function such as that in Ericsson, where the nth number in the sequence produced can be calculated directly without deriving the previous ones first.

124. Prof Lozano explained that it was possible to write a recursive function in self-contained form, and he showed how to do that for the LCG of the Patents’ claims. His approach assumed that C would be constant; although that it is true, I accept his evidence that it would be possible to provide a self-contained version for varying C.

125. However, the more basic point made by Ms Dwyer in response was that the self-contained form would require massive calculation that was not achievable in reality because it would involve raising A (which is for example 39827) to the power of 10 for the 10th subframe.

126. Prof Lozano retorted that it was not necessary to calculate 3982710 as such, because in modular arithmetic one could repeatedly multiply by 39827 and take mod D; the numbers would be kept much smaller.

127. In the last step of this debate in the written evidence, Ms Dwyer pointed out that Prof Lozano’s use of modular arithmetic just meant doing the recursion in question as part of the calculation of the purportedly self-contained form of the function. Following the oral evidence, I agree with her on this. So whether or not one actually calls it self-contained as a matter of semantics, Prof Lozano’s reformulation would still require the skilled person to use recursive methods of calculation.

128. For what it is worth, it is also possible to convert a self-contained function into a recursive form.

129. Although Ms Dwyer was therefore right, and there is a difference between self-contained and recursive functions, I did not think that there was any reason for the skilled person to care, or to be worried by using a recursive function, or to regard the difference as a radical or practically significant one. Working out the one millionth term of a recursive function might be computationally too intensive, but in the circumstances of the PDCCH all that would be needed would be to work out and store ten values for each aggregation level. That would not present any difficulty. Ms Dwyer ultimately accepted that there was no practical problem so as to put the skilled person off from using an LCG merely because it was recursive.

130. For what it is worth, the secondary evidence supports that view (two queries about the function being recursive were made but well met by LGE’s explanation that few iterations were needed), but I think it would be apparent anyway.

Simulations

131. I was unclear what dispute remained over this by the end of the trial. I find that it was CGK to use simulations with tools such as C, C++ or MATLAB to test proposals that were made during RAN1 work. The simulations were done at the meetings and outside meetings.

132. It is possible that there was some dispute about whether laptops of the priority date were powerful enough for all such simulations. I find that even if and to the extent they were not, it was CGK to use more powerful computers available as part of the “back room” support to RAN1 delegates.

Modular arithmetic

133. The experts agreed that some aspects of modular arithmetic would be CGK, in particular, as noted above, the “mod operation”. That is no more than the exercise of finding a remainder, so 17 mod 3 = 2, or 1000 mod 111 = 1.

134. However, the experts disagreed about whether the “distributive property” of modular arithmetic would be CGK.

135. The distributive property was explained by Prof Lozano as:

(X+Y) mod C = (X mod C + Y mod C) mod C.

136. This is not complicated. In the left hand side of the equation you add X and Y and take the remainder after dividing by C. In the right hand side of the equation you divide by C and take the remainder for X and Y separately and then add the remainders. But that might be greater than C, so you perform the mod C operation on the total. In each case you are just getting rid of all the multiples of C.

137. It is artificial to say that the mod operation would be CGK but this would not, and I accept Prof Lozano’s evidence that the distributive property would also be CGK.

138. Ms Dwyer also said that the skilled person could perform modular arithmetic to the level of plugging numbers into equations but could not work with modular relationships expressed in variables. I reject this too, as artificially hobbling the skilled person, and because it is inconsistent with what the Patents envisage they could do, and I again prefer Prof Lozano’s view. I thought there was also a tension between Ms Dwyer’s conception of the skilled person being limited in this way and her positive suggestions about what might be done from Ericsson, such as changing x, which I felt would require a degree of understanding of modular arithmetic in excess of what she envisaged.

139. Optis’ motive in denying that this particular bit of modular arithmetic - the distributive property - was CGK is that it is the tool needed to appreciate that the Ericsson function would not work properly over successive subframes. Optis sought to use the fact that (it says) some of the RAN1 participants did not spot that problem to argue that the skilled person would not have the characteristics as I have just indicated. I reject this. Of course it is not necessarily the case that the skilled person who had those characteristics would use them without hindsight to spot the problem with the Ericsson function, but that is a question in relation to obviousness rather than CGK.

Max hits

140. A metric that is used in the simulations presented in the Patents is maximum number of hits or “max hits”. Optis contended that it was not CGK.

141. I think Optis is right about that. Performing simulations was CGK, and it would be down to the skilled person performing simulations for a given purpose to choose appropriate metrics to assess the degree of success or failure of the function or system under test. The skilled person would not know as a matter of CGK that max hits was a metric to use. That does not mean that it was a good metric, or that they could not work out that they should use it in a given situation, but that is something that comes in at the obviousness stage of the analysis.

The Patent

142. The specification begins with some general teaching about LTE and the PDCCH, at [0004] to [0006]. Thereafter, it identifies Ericsson at [0007]. The specification then goes at length into the meaning and use of the function of claim 1 that I explain below. It involves the use of an LCG and a “mod C” operation.

143. From [0096] onwards, the specification starts to describe the choice of parameters for the LCG. It explains at [0099] that it will use the concept of number of “hits” (which essentially means collisions) as a criterion. At [0103] it explains that it will be looking at average number of hits and maximum number of hits, as well as whether the range 0 to C-1 is uniformly covered by the start positions generated, and the variance of probabilities that values between 0 and C-1 will be generated (another measure of uniformity).

144. Tables 2, 3 and 4 are then presented, giving values for those metrics for various combinations of the parameters A, B, C and D.

145. [0107] and [0108] give some guidance as to the choice of parameter D, which is the modulus. It refers to the size of D and to whether D is prime. At [0109] it recommends choosing D = 65537 when the UEID is a 16-bit number.

146. In written evidence which was essentially unchallenged, Prof Lozano said that [0107] and [0108] were unreliable, or at least very badly written, because (paraphrasing for simplicity) they misunderstand or mis-explain the importance of the size of D on the one hand, and whether it is prime on the other. I accept that evidence. [0107] and [0108] are not very clear or useful. They certainly give less good guidance about the choice of D than would be provided by Knuth (I make this observation since it is of potential relevance to Apple’s insufficiency squeezes).

147. [0112] recommends that B is set to zero. In that preferred situation, the LCG will be a multiplicative LCG (as to which, see below).

148. Prof Lozano also gave evidence that Tables 3 and 4 (in particular the latter) contain errors. A particular problem is that they do not specify C, with the result that they cannot be replicated. Prof Lozano attempted to work out what had been done, and did manage to verify that the max hits column in Table 4 would make sense if C were 16. But that would mean the other columns were wrong. I return to this in relation to the insufficiency allegations.

Claims of the Patent in issue

149. As I have already said, it was agreed the issues can be dealt with by consideration of claims 1 and 4 of the Patent, which are as follows (taken from Apple’s written opening submissions where reference letters for the claim features were added, though nothing turns on them).

150. Claim 1:

1[a] A method for a User Equipment, UE, to receive control information through a Physical Downlink Control Channel, PDCCH, the method comprising:

1[b] receiving control information from a base station through the PDCCH in units of Control Channel Element, CCE, aggregations, each of the CCE aggregations including at least one CCE in a control region of subframe 'i'; and

1[c] decoding the received control information in units of search space at subframe 'i',

1[d] characterized in that the search space at subframe 'i' starts from a position given based on a variable xi and a modulo 'C' operation, wherein 'C' is a variable given by: C = floor(NCCE /LCCE),

and wherein 'xi' is given by: xi = (A*xi-1 + B) mod D,

wherein A, B and D are predetermined constants, and x-1 is initialized as an identifier of the UE, and NCCE represents the total number of CCEs at subframe 'i', and LCCE is the number of CCEs included in the CCE aggregation, and floor(x) is a largest integer that is equal to or less than x.

151. Claim 4:

4[a] The method according to claim 1 or 2,

4[b] wherein D, A, and B are 65537, 39827, and 0, respectively.

152. Claim 1 was used as an exemplar of an “unspecified” claim, which refers to the fact that A, B and D are not assigned specific values. Others of the Patents also include unspecified claims, and it was agreed that my decision on claim 1 would determine those claims as well.

153. Claim 4 was used as an exemplar of a “specified” claim, since D, A and B are set. Again, it was agreed that my decision on claim 4 would allow determination of all the unspecified claims in all of the Patents.

154. As Optis pointed out, claim 1 of the Patent uses the notation xi, while the text of the specification refers to Yk. It does not make any substantive difference, but it needs to be borne in mind for one’s understanding. The “i” or “k” denotes the subframe number.

155. The claims do not make it easy to see what is going on, or to capture the inventive concept. Essentially, however, what claim 1 is saying is that for each subframe, the start position of a PDCCH search space is found using an LCG, with the output of the LCG being subjected to a modulo C operation.

156. For the initial “seed” value for the LCG, the UEID is used. For subsequent subframes the LCG works recursively, as explained above.

157. C is the number of possible start positions, and is found by taking the number of CCEs in the subframe and dividing by the aggregation level L (if CCE is not precisely divisible by L then the “floor” operation takes only the integer part). So if there are e.g. 64 CCEs and the aggregation level is 8, then there are 8 possible starting positions. Taking mod C will give a number from 0 to C-1.

158. It is incorrect to say, as Apple sometimes did, that claim 1 is just to the use of an LCG to find the start positions of the search space. The LCG is, as Ms Dwyer put it, “nested” with the mod C function. It is important not to lose sight of this, since one of Optis’ obviousness arguments relies on it.

The Prior Art

159. I will now set out the disclosure of Ericsson, and then of Knuth. They do not cross-refer to each other, and so it is only legitimate to read them together if they are shown to be CGK or if routine research from one would lead by obvious steps to the other.

Disclosure of Ericsson

160. Ericsson is a 7-page slide presentation.

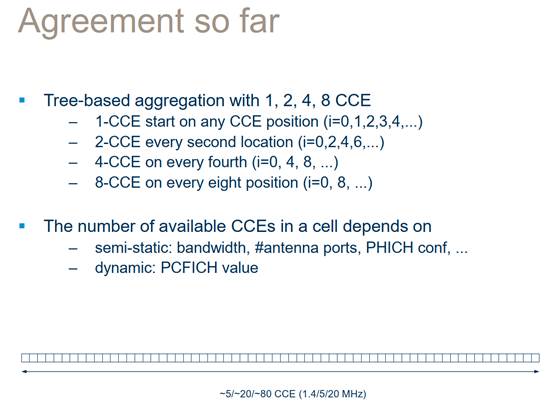

161. Page 1 set out the “Agreement so far” and is fairly self-explanatory once one understands the CGK:

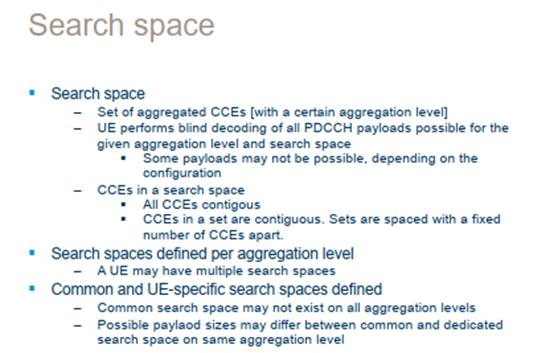

162. Page 2 explains about the search space (it was not yet finally agreed that CCEs in a set would be contiguous but that does not matter to the arguments):

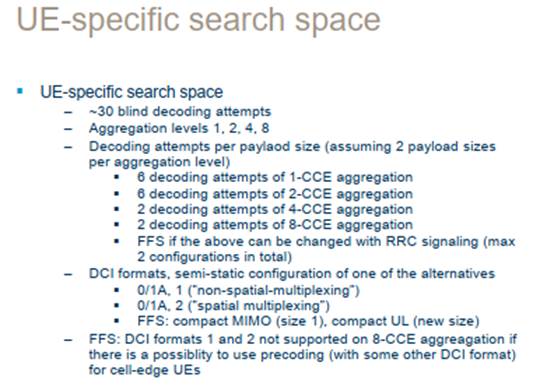

163. Page 5 gives some details of the UE-specific search space, which is the territory of this dispute:

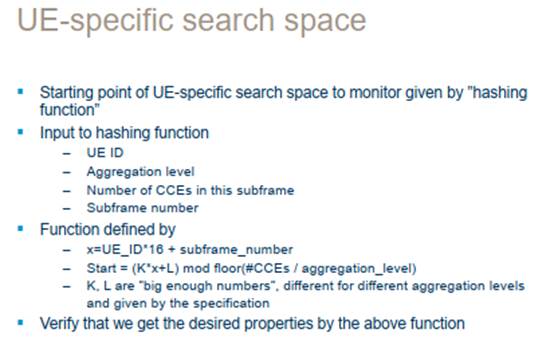

164. Down to this point there is little if any disagreement between the parties. Page 6 is the key page over which the obviousness dispute takes place:

Disclosure of Knuth

165. It is not practical to reproduce all the parts of Knuth relied on. I will summarise its main contents.

166. Apple relies on 40 pages from Chapter 3 of volume 2. Chapter 3 is entitled “Random Numbers”. Optis relies on other pages from that chapter and on the part of volume 3 (“Sorting and Searching”) which concerns hashing functions. In my view it would not be open to Apple to restrict the way in which the skilled person would view Knuth by artificially limiting consideration to selected pages, but I do not think it attempted to do that. It would have been impractical for it to cite the whole book, and no doubt if it had done so Optis would, rightly, have objected. Consideration of the 40 pages cited ought to take place in the context of the rest of the work that the skilled person, guided by CGK, would have considered relevant.

167. After a general introduction there follows section 3.2 “Generating Uniform Random Numbers”. The first method introduced is the class of LCGs, which are described as “[b]y far the most popular random number generators in use today”. After setting out the form of the LCG, Knuth observes that choosing the “magic numbers”, meaning A, B and D, appropriately will be covered later in the chapter. It explains that sequences from LCGs have a period and that “A useful sequence will have a relatively long period.”

168. At the top of page 10, Knuth says that “The special case c = 0 deserves explicit mention”. He uses c to denote the increment, so in terms of the A, B and D letters I am seeking to use, he is referring to B. He identifies that this case is referred to as “multiplicative”, and says that it is quicker but tends to reduce the period.

169. Thereafter, Knuth gives advice on the choice of modulus, which he says at 3.2.1.1 should be “rather large” and notes that its choice affects speed of generation. He deals with choosing a modulus when the word size of the computer in question is w (the word size is 2e for an e-bit binary processor) and discusses the case where the modulus is set to w+1 or w-1, providing Table 1 which gives the prime factorisations for various values of e. I will return to this below, in particular in relation to the specified claims.

170. Choice of multiplier is discussed from 3.2.1.2. Knuth explains that its intention is to show how to choose the multiplier to give maximum period, and that “we would hope that the period contains considerably more numbers than will ever be used in a single application”.

171. Further specific advice is given for particular cases over the following pages. For example, getting a long period with an increment of zero (the multiplicative case) is covered at page 19, and says that with an increment of zero an effectively maximum period can be achieved if the modulus is prime.

172. Some of the analysis and proofs are unquestionably complex, but I think Ms Dwyer overstated it when she called them “impenetrable”. It may well be that the skilled person would not feel the need to follow through the proofs, though.

173. Section 3.2.2 on page 25 introduces “Other Methods”, i.e. other than LCGs. This contains the caveat that a common fallacy is to think that a small modification to a “good” generator can make it even more random, when in fact it makes it much worse.

174. Section 3.3 from page 38 then introduces statistical tests to test if sequences produced are in fact random.

175. As I have said, Optis relied on other parts of Knuth. I deal below with its reliance on the part of volume 3 concerning hashing functions. The other material added, via Ms Dwyer’s evidence in her reply report (her fourth, her having put in two reports for Trial B), was the remainder of chapter 3.

176. When Ms Dwyer introduced the rest of the chapter she did so for the limited purpose of highlighting section 3.4.1 on generating small random numbers. But the main purpose for which the rest of the chapter was used at trial by Optis was to highlight the summary at section 3.6 from page 170, to which Ms Dwyer had not drawn attention. This gave recommendations for “a simple virtuous generator” and gave advice for the choice of the modulus, multiplier and increment. Optis’ position was that that was what the skilled person would use if they maintained an interest in LCGs. It recommends a modulus of at least 230.

Obviousness

177. I will deal with the legal principles first.

Legal principles

178. At one level there was no dispute about the basic principles. As in other recent decisions I was referred to Actavis v. ICOS [2019] UKSC at [52] - [73], with its endorsement at [62] of the statement of Kitchin J as he then was in Generics v. Lundbeck [2007] EWHC 1040 (Pat) at [72].

Existence of alternatives

179. Apple relied on Brugger v. Medicaid [1996] RPC 635 at 661, approved by the Supreme Court in Actavis v. ICOS, to the effect that an obvious route is not made less obvious by the existence of other obvious routes. This principle is of course often relied on by those attacking patents for obviousness, and it is valid as far as it goes, but it must not be overdone. Optis referred to Evalve v. Edwards [2020] EWHC 514 (Pat) at [256] - [258] where Birss J pointed out that what Brugger said is that the existence of alternatives does not itself rule out obviousness, but also that their existence may be one relevant factor, as Actavis v ICOS and Generics v Lundbeck spell out.

Secondary evidence

180. Optis relied to an unusually heavy degree on evidence of what others in the field - RAN1 participants - said or did, either pre-priority or post-priority in reaction to the alleged invention of the Patents.

181. Optis relied on this for two purposes, which I think were not entirely distinct.

182. The first was in connection with identifying the notional skilled person and their abilities and knowledge. This is certainly legitimate - see e.g. Unwired Planet v. Huawei [2017] EWCA Civ 266 at [113]-[114] where Floyd LJ said that it would be unreal for an expert not to seek to understand the 3GPP context. Indeed he said that an expert who did not try to understand the context would be falling short in their duties. Both sides used the historical RAN1 context in this broad sense, although Ms Dwyer gave much more attention to it.

183. The second was to contend that in particular respects RAN1 participants behaved in specific ways, and that it could be inferred that that is how the notional skilled person would behave. Optis contended that only a subset of RAN1 participants spotted problems with Ericsson and that despite coming up with proposed ways forward, none of them apart from LGE thought of the solution of the Patents.

184. This use of secondary evidence requires caution, as the authorities indicate. Laddie J in Pfizer’s Patent [2001] FSR 16 at [63] said the following in the relation to secondary evidence when there is more than one route to a desired goal:

“63. Of particular importance in this case, in view of the way that the issue has been developed by the parties, is the difference between the plodding unerring perceptiveness of all things obvious to the notional skilled man and the personal characteristics of real workers in the field. As noted above, the notional skilled man never misses the obvious nor sees the inventive. In this respect he is quite unlike most real people. The difference has a direct impact on the assessment of the evidence put before the court. If a genius in a field misses a particular development over a piece of prior art, it could be because he missed the obvious, as clever people sometimes do, or because it was inventive. Similarly credible evidence from him that he saw or would have seen the development may be attributable to the fact that it is obvious or that it was inventive and he is clever enough to have seen it. So evidence from him does not prove that the development is obvious or not. It may be valuable in that it will help the court to understand the technology and how it could or might lead to the development. Similarly evidence from an uninspiring worker in the field that he did think of a particular development does not prove obviousness either. He may just have had a rare moment of perceptiveness. This difference between the legal creation and the real worker in the field is particularly marked where there is more than one route to a desired goal. The hypothetical worker will see them all. A particular real individual at the time might not. Furthermore, a real worker in the field might, as a result of personal training, experience or taste, favour one route more than another. Furthermore, evidence from people in the art as to what they would or would not have done or thought if a particular piece of prior art had, contrary to the fact, been drawn to their attention at the priority date is, necessarily, more suspect. Caution must also be exercised where the evidence is being given by a worker who was not in the relevant field at the priority date but has tried to imagine what his reaction would have been had he been so.”

185. And there are many general statements in the authorities stressing that secondary evidence is, indeed, secondary. E.g. Molnlycke v Procter & Gamble [1994] RPC 49 at 112:

“Secondary evidence of this type has its place and the importance, or weight, to be attached to it will vary from case to case. However, such evidence must be kept firmly in its place. It must not be permitted, by reason of its volume and complexity, to obscure the fact that it is no more than an aid in assessing the primary evidence.”

186. Not infrequently, secondary evidence may be rejected simply because the workers in the field in question were not aware of the cited prior art (or it is unknown if they were aware of it). That does not apply here. The RAN1 workers in question were specifically aware of Ericsson and were working on it. So subject to the other caveats identified above, this is a case where the secondary evidence could be more likely than usual to play a role.

187. Another factor clearly established in the case law in relation to “why was it not done before” is the closeness in time between the prior art and the making of the invention. As Jacob LJ commented in Schlumberger v EMGS [2010] RPC 33 at [77]:

“[Secondary evidence] generally only comes into play when one is considering the question ‘if it was obvious, why was it not done before?’ That question itself can have many answers showing it was nothing to do with the invention, for instance that the prior art said to make the invention obvious was only published shortly before the date of the patent, or that the practical implementation of the patent required other technical developments.”

Prejudice/lion in the path

188. Apple characterised part of Optis’ case as being a “lion in the path” that was actually a “paper tiger”. What it meant was that Optis was relying on a perception that LCGs were flawed to the point of being useless. Apple said that Optis could not rely on such a perception unless the Patents overcame the prejudice by showing that LCGs were in fact valid for the purposes taught.

189. Apple relied on the well-known statement about prejudice by Jacob LJ in Pozzoli v BDMO [2007] EWCA Civ 588 at [28]:

“28. Where, however, the patentee merely patents an old idea thought not to work or to be practical and does not explain how or why, contrary to the prejudice, that it does work or is practical, things are different. Then his patent contributes nothing to human knowledge. The lion remains at least apparent (it may even be real) and the patent cannot be justified.”

190. Optis responded by citing the decision of Mann J in Buhler v. Spomax [2008] EWHC 823 (Ch). Mann J cited the above passage in Pozzoli, and also referred to what Jacob LJ had said when a judge at first instance in Union Carbide v. BP [1998] RPC 1, that invention can lie in “finding out that that which those in the art thought out not to be done, ought to be done.” Mann J went on to say that those dicta did not require in all cases that a patent must explain why the prejudice is wrong and how it works, in scientific language.