Freely Available British and Irish Public Legal Information

[Home] [Databases] [World Law] [Multidatabase Search] [Help] [Feedback]

England and Wales High Court (Patents Court) Decisions

You are here: BAILII >> Databases >> England and Wales High Court (Patents Court) Decisions >> Mitsubishi Electric Corporation & Anor v Archos SA & Ors [2021] EWHC 1639 (Pat) (16 June 2021)

URL: http://www.bailii.org/ew/cases/EWHC/Patents/2021/1639.html

Cite as: [2021] EWHC 1639 (Pat)

[New search] [Printable PDF version] [Help]

Neutral Citation Number: [2021] EWHC 1639 (Pat)

Case No: HP-2019-000014

IN THE HIGH COURT OF JUSTICE

BUSINESS AND PROPERTY COURTS OF ENGLAND AND WALES

INTELLECTUAL PROPERTY

PATENTS COURT

Rolls Building

Fetter Lane

London, EC4A 1NL

16th June 2021

Before :

THE HONOURABLE MR JUSTICE MELLOR

- - - - - - - - - - - - - - - - - - - - -

Between :

|

|

(1) MITSUBISHI ELECTRIC CORPORATION (2) SISVEL INTERNATIONAL SA |

Claimants |

|

|

- and - |

|

|

|

(4) ONEPLUS TECHNOLOGY (SHENZHEN) CO., LTD (5) OPLUS MOBILETECH UK LIMITED (6) REFLECTION INVESTMENT B.V. (7) GUANGDONG OPPO MOBILE TELECOMMUNICATIONS CORP, LTD (8) OPPO MOBILE UK LTD

(9) XIAOMI COMMUNICATIONS CO LTD (10) XIAOMI INC (11) XIAOMI TECHNOLOGY FRANCE SAS (12) XIAOMI TECHNOLOGY UK LIMITED |

Defendants |

- - - - - - - - - - - - - - - - - - - - -

- - - - - - - - - - - - - - - - - - - - -

Michael Tappin QC and Michael Conway (instructed by Bird & Bird LLP) for the Claimants

James Abrahams QC and Adam Gamsa (instructed by Taylor Wessing LLP) for the Fourth to Eighth Defendants and (instructed by Kirkland & Ellis International LLP) for the Ninth to Twelfth Defendants

Hearing dates: 19th, 22nd-26th, 29th-30th March 2021

- - - - - - - - - - - - - - - - - - - - -

Approved Judgment

I direct that pursuant to CPR PD 39A para 6.1 no official shorthand note shall be taken of this Judgment and that copies of this version as handed down may be treated as authentic.

COVID-19: This judgment was handed down remotely by circulation to the parties’ representatives by email. It will also be released for publication on BAILII and other websites. The date and time for hand-down is deemed to be 10am on Wednesday 16th June 2021.

.............................

THE HON MR JUSTICE MELLOR

Mr Justice Mellor:

1. This Judgment is structured as follows:

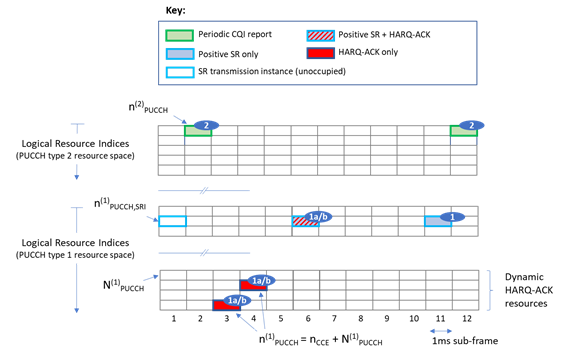

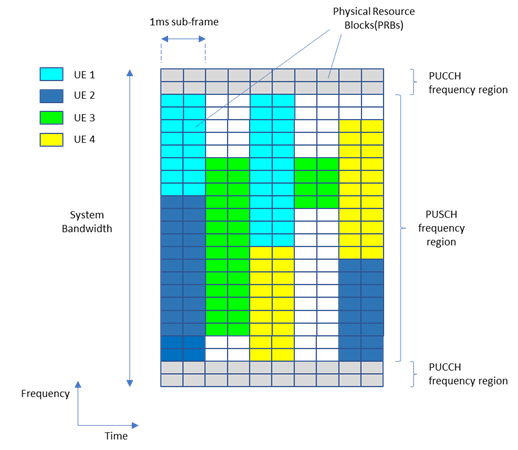

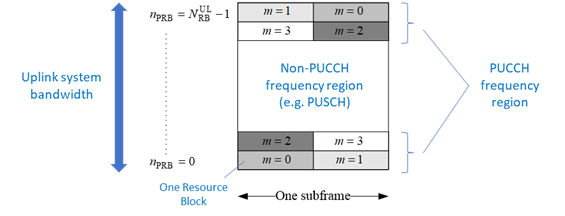

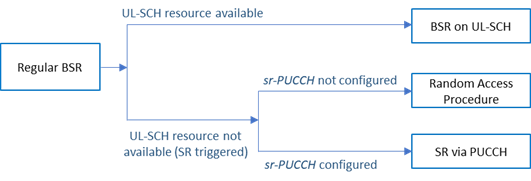

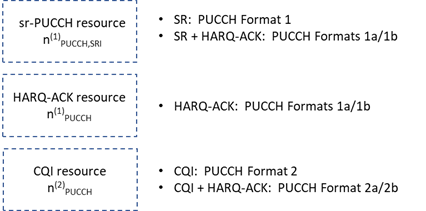

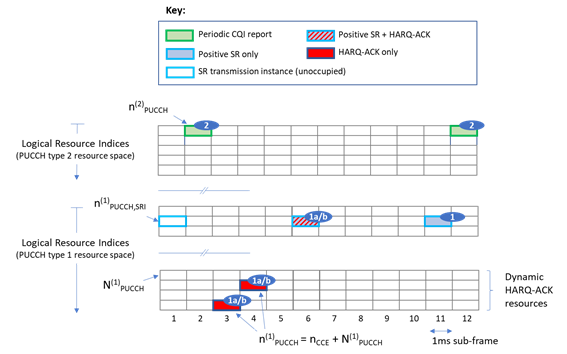

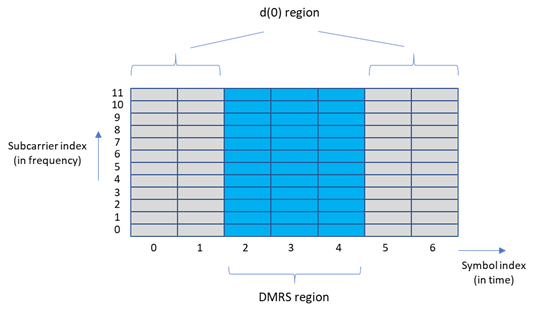

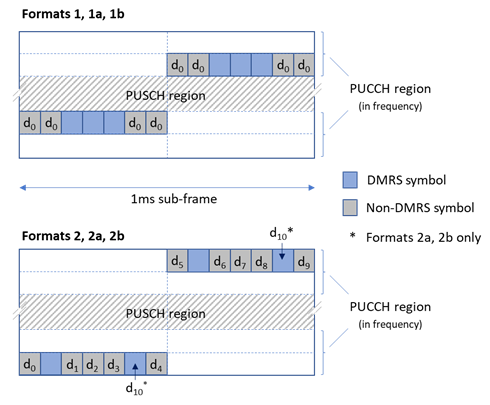

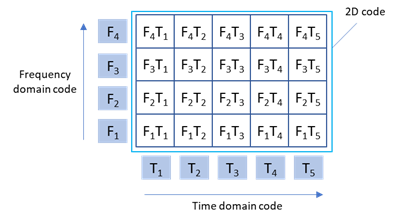

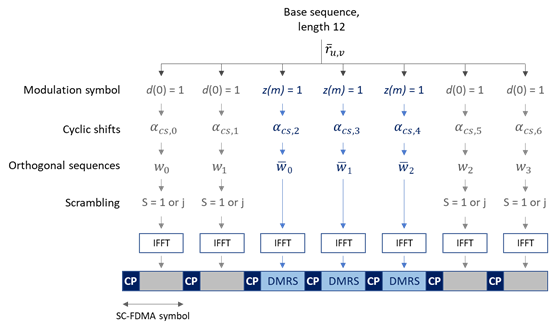

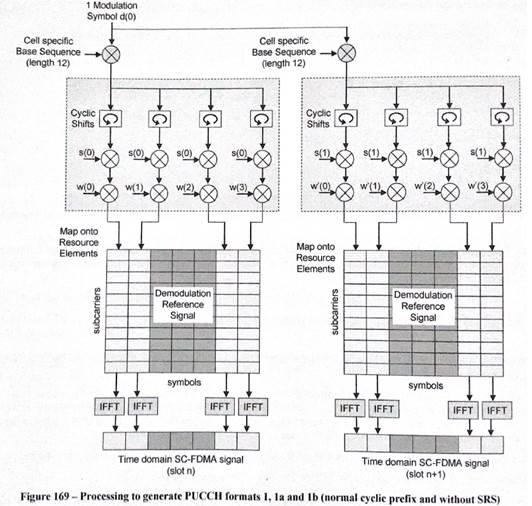

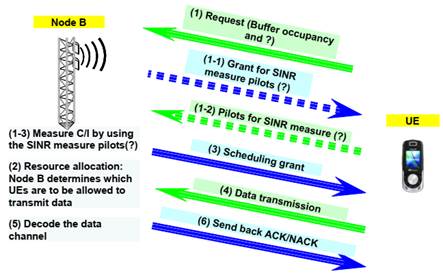

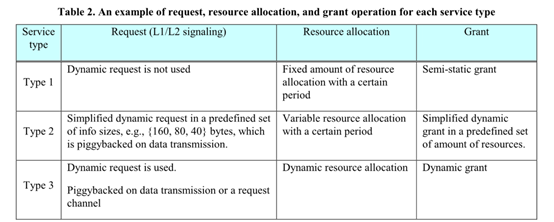

INTRODUCTION 2 The Expert Witnesses 7 The Skilled Person 12 Common General Knowledge 16 THE PATENTS 110 Construction/Scope of the Claims 143 'pilot symbol' 147 Analysis of the Defendants' arguments 156 'information indicating' 161 'transferring information indicating the [not] need' 162 'information based on pilot symbols provided by the base station' 167 ESSENTIALITY 171 Introduction and identification of the issues 171 Relevant Technical Background 196 Part 1 - Basic Concepts in LTE 196 Part 2 - Access to UL-SCH Resources 212 Part 3 - Signalling SR via PUCCH 216 Reference signals in LTE 234 Structure of a PUCCH format 1x signal 236 Code Domain Multiplexing of PUCCH formats 244 Configuration of sr-PUCCH 254 Part 4 - Allocation of UL-SCH resources to UEs 260 Interim conclusion on the SR procedure. 269 Part 5 - How PUCCH format 1x signals are constructed and transmitted. 270 Part 6 - The role of coherent modulation 275 Part 7 - The passages in TS 36.211 relied upon by the Defendants 279 The issues which remain to be resolved 289 First: the wrong sort of pilot 290 Second: no reference signals in the PUCCH format 1x signals 291 Analysis 293 PUCCH format 1 signal for signalling SR 301 PUCCH format 1a/1b signals for signalling SR 303 Third: the DMRS is sent when no resources are required. 321 Fourth: the DMRS is not sent at different powers 322 Fifth: what if the DMRS was sent alone, and no SR was sent? 323 Eighth: DMRS are provided by the base station but the d(0) symbols are not 324 Ninth: non-coherent modulation or 'on-off keying' 328 VALIDITY 334 KWON 334 What Kwon discloses 334 The issues raised on Kwon 344 The step (1) message 345 The step (1-2) pilots 346 The step (1) message sent on a 'dedicated request channel' 349 ADDED MATTER 360 (1) transferring information indicating no need of a wireless resource 362 (2) information 'based on' pilot symbols 364 Conclusion 367

INTRODUCTION

2. This case is about the use of pilot or reference signals which are sent in telecommunications networks for various purposes. The two patents in issue, EP 2,254,259 (‘EP259’ or ‘259’) and EP 1,903,689 (‘EP689’ or ‘689’), claim a new use for pilot signals. 689 is the parent of 259 and they share the same title “Method and device for transferring signals representative of a pilot symbol pattern” and priority date of 22 September 2006. Their descriptions are essentially the same, but their claims differ. It is common ground that I need only consider the essentiality and validity of claim 1 of each patent. Both patents are concerned with the use of pilot signals to request uplink resources in a telecommunications network e.g. a mobile phone network. EP259 claim 1 relates to the use of pilot signals to request for uplink resources, whereas claim 1 of EP689 relates to the use of information based on pilot signals to indicate the need (or not) for uplink resources.

3. This technical trial followed immediately after the trial concerning EP(UK) 1,925,142 (‘EP142’) on which I gave judgment on 26th April 2021 as [2021] EWHC 1048 Pat The Claimants own or administer the licensing of certain patents, including 259 and 689 which are owned by the First Claimant, which form part of a group of patents which are called the MCP Pool. In these proceedings, the Claimants seek to make the Defendants, as manufacturers of 4G handsets, take a license to the patents in the MCP Pool. A trial to consider the relief claimed by the Claimants, which will include a determination of the FRAND terms for licensing the MCP Pool, is set to be heard in October 2021. The Defendants which remain fall into two groups, the OnePlus Defendants (4-8) and the Xiaomi Defendants (9-12). Fortunately, the Defendants have joined forces for these technical trials. At this trial, the Claimants’ case was argued by Michael Tappin QC and Michael Conway and the Defendants’ case by James Abrahams QC and Adam Gamsa. I am grateful to Counsel and their supporting teams for their assistance. As with the trial of EP142, this trial took place as a fully remote trial on MS Teams, using CaseLines for the electronic bundles. Generally, the technology worked well, although we experienced some slight interference at times when Mr Bishop (the expert called by the Defendants) was giving his evidence.

4. At a high level, the issues for my determination following the trial are:

i) Are the patents essential to the aspect of the LTE (4G) standard concerned with transmission of a scheduling request (SR) to request uplink resources?

ii) Are the patents invalid:

a) because they are anticipated by or obvious over the prior art known as Kwon?

b) in the case of the 689 patent, for added matter?

5. As usual, there is a good amount of technical detail which I need to explain in order to address these issues, and many acronyms to absorb. The technical background falls into two areas. First, there is the CGK as at 22 September 2006. As I directed at the PTR, the parties produced a very useful document in which they reached agreement as to the CGK, save for one relatively small point. Second, there is the technical detail required to understand the arguments on essentiality. In this case, this is far more complex than the subject-matter of the CGK, largely because it concerns the details of signalling in LTE. Although ultimately there was little dispute as to this, in future where the infringement/essentiality/equivalents case involves complex technology, consideration should be given at the PTR stage to a direction that the parties serve a document or documents setting out not only (i) Agreed CGK, with a list of CGK issues in dispute but also (ii) Agreed technical background for the infringement/essentiality/equivalents case, again with a list of technical issues in dispute. In each case the issues in dispute should be cross-referenced to the relevant paragraphs in the experts’ reports. Documents of this type will be of great assistance to any trial judge.

6. The patents were written at a time when 3G technology was mature and the mobile phone industry had started initial steps towards developing new 4G standards.

The Expert Witnesses

7. Mr Nicholas Anderson was the expert witness called by the Claimants, with Mr Craig Bishop called by the Defendants.

8. Mr Anderson was subjected to cross-examination which was, at times, unnecessarily aggressive. At one point, counsel even responded to one of his answers by saying ‘I cannot believe that really is your evidence’. It is important for counsel to understand that what he or she thinks or believes is irrelevant. In their closing argument, the Defendants made some trenchant criticisms of Mr Anderson which culminated in the submission that ‘he saw the witness box as a platform to fight his corner.’

9. I reject all of the Defendants’ criticisms of Mr Anderson. In my view, Mr Anderson was precise and accurate, both in his written evidence and in cross-examination. I point out this is not a criticism at all, bearing in mind the technology involved and the issues in this case. He was careful not to overstate matters. He explained the technology very well indeed.

10. I also point out that ultimately I was unable to detect any serious disagreement between the experts on technical matters. This serves to emphasise the inappropriateness of the Defendants’ attack on Mr Anderson, because the Defendants’ own expert ultimately agreed with Mr Anderson.

11. Mr Bishop’s written evidence was more guarded than that of Mr Anderson. Both his written and oral evidence suffered somewhat from his adoption of the Defendants’ key arguments on essentiality which were founded on taking some statements in the LTE standard too literally and somewhat out of context. Whilst he attempted in cross-examination to stick to the party line, as I relate later (see paragraph 315 below), this ended up with him tying himself in knots. Ultimately, however, he very largely agreed with the points put to him and where he qualified his answers, his qualifications did not impact on the matters I have to decide. No doubt for those reasons the Claimants did not seek to criticise Mr Bishop. Overall, I am grateful to both experts for their assistance.

The Skilled Person

12. For convenience I will refer to the Skilled Person, whilst recognising that in reality this notional person might well have comprised a team of engineers. The experts were largely agreed as to the characteristics of the skilled person. He or she would be a systems engineer or architect working on radio access layers in the wireless communications industry, with an interest in improving the capabilities or efficiency of the radio access network. They would be likely to have a particular interest in methods for improving the allocation of resources in the system. They would require a detailed knowledge of the physical (‘PHY’) and medium access control (‘MAC’) layers and their components.

13. They would have an undergraduate degree or similar qualification in electronic engineering, maths, physics or computer science along with practical experience in the design, simulation or implementation of the MAC and PHY layers of wireless communications products.

14. Where the experts differed was as to the Skilled Person/Team’s knowledge of the detail of discussions at the meetings of the 3GPP RAN Working Groups 1 and 2. RAN WG1 was responsible for the specification of the physical layer of the radio interface for EU, UTRAN and E-UTRAN (see below). RAN WG2 was responsible for the radio interface architecture and protocols (MAC, RLC, PDCP), the specification of the RRC protocol, the strategies of Radio Resource Management and the services provided by the physical layer to the upper layers. Mr Bishop acknowledged that not all members of his team would attend all RAN WG1&2 meetings but maintained they would have an in-depth understanding of their specialist area and would have ready access to and knowledge of 3GPP deliverables as well as the meeting reports/contributions from colleagues who did attend.

15. For his part, Mr Anderson did not expect the skilled person to be familiar with the minutes of meetings of the working groups or of technical documents submitted to such meetings which had not been incorporated into approved specifications. He noted, correctly, that there is nothing in the patents to indicate they are focussed on 3GPP systems. He indicated that those with a practical interest in the patents would not have been limited to those engineers who regularly or even occasionally attended the meetings of the 3GPP RAN WG1&2. Although this mini-dispute did not appear to have any impact on anything I have to decide, I incline to Mr Anderson’s view because I do not consider the ordinary unimaginative skilled person needed to keep up to date with all the discussions being conducted in the relevant groups at a particular standards setting organisation (even one as influential as 3GPP) to carry out his or her job. They would be aware of the effort to develop a long term evolution of UMTS and would be aware that 3GPP was undertaking an exercise to study and evaluate candidate techniques. They could wait to find out what that organisation had adopted in its approved specifications, although, given a specific need to do so, they were well able to find meeting reports and contributions from the relevant working groups.

Common General Knowledge

16. What follows in this section is very largely based on the agreed CGK document, with some edits of my own. Everything in this section formed part of the Common General Knowledge.

Cellular networks

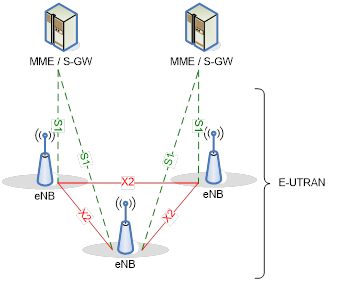

17. Mobile networks are based on cellular networks. In a cellular network, mobile devices (often referred to as User Equipment (“UE”)) communicate with the network via a fixed transceiver (often referred to as a base station (“BS”)) that operates over a particular area called a cell.

18. Cellular networks usually comprise a Core Network (“CN”) component, and a Radio Access Network (“RAN”) component. The CN provides network and connection management functions in addition to onward connectivity to other networks, such as the internet. The RAN comprises a number of base stations which provide connectivity between UEs and the Core Network.

19. Wireless communication may be circuit switched (“CS”) or packet switched (“PS”). This case only concerns PS. In PS systems, data is sent in units referred to as ‘packets’, which contain two parts: (i) a header, which primarily identifies the addresses of the sending entity and of the intended recipient entity; and (ii) the payload. The packets are then sent on a ‘hop-by-hop’ basis from one entity to the next based on this header information until they reach their destination.

20. Within a cell, UEs and the network communicate on radio “resources”, which may be defined in a number of different ways, including in one or more of time, frequency and code.

Layers in wireless architecture

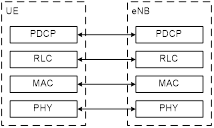

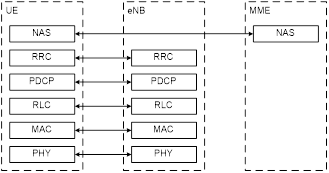

21. The Open Systems Interconnection (“OSI”) model is a common way of describing different conceptual parts of communication networks developed by the International Organisation for Standardisation (“ISO”). It describes seven layers that are typically present in a communications network in some form, of which only layers 1 - 3 have any bearing on this case.

22. A group of layers that cooperate to achieve an overall communication of information is referred to as a protocol “stack”. When transmitting information, each layer receives data packets from the layer above, processes them (which may involve for example adding new or different header information), and then passes them to the layer below. Data packets received from a higher layer are referred to as ‘service data units’ (“SDUs”) whilst data packets passed to the lower layer, following processing, are referred to as ‘protocol data units’ (“PDUs”).

23. Layer 1 is the Physical Layer (“PHY”). It provides the means for physically transferring bits over the air interface. It is responsible for encoding and modulating data in readiness for its transmission over the air interface (see below).

24. Layer 2 is referred to as the Data Link layer. It ensures there is a reliable flow of information between the UE and the Core Network and may comprise several components, including the Medium Access Control (“MAC”) and Radio Link Control (“RLC”). Functions of the RLC may include buffering of data awaiting transmission for separate data flows referred to as ‘logical channels’. The MAC’s functions may include multiplexing logical channels provided by the RLC onto transport channels at the PHY. In doing so, the MAC layer may also be responsible for managing the relative prioritization of the logical channels as part of the multiplexing process. The MAC may also be responsible for scheduling. It may also provide fast retransmissions via a process known as Hybrid Automatic Repeat Request (“HARQ”) and may assign or determine a suitable transport format to use for an upcoming transmission.

25. Layer 3 is the network layer. In addition to routing data in the Radio Access Network, it manages the setting up, modification and release of radio connections between the UE and the Core Network and configures the protocols to be used at MAC and RLC level in respect of different services.

Modulation

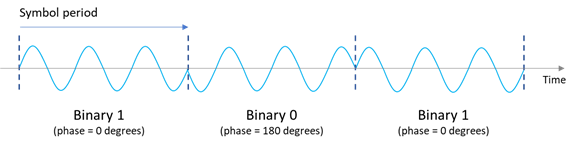

26. In a wireless communication system, information in a data signal cannot easily be transmitted and received in its native form. Therefore, it is converted into a waveform that is, in turn, used to multiply (or ‘modulate’) a ‘carrier’ wave (at the desired carrier frequency). This has the effect of modifying the amplitude, frequency and/or phase of the carrier wave depending on the type of modulation that is used. These modifications are detected at the receiver in order to recover the information of the data signal.

27. A variety of modulation approaches are possible. A popular approach is phase modulation.

28. In phase modulation, the phase (i.e. the point within a 360 degree sinusoidal cycle) of the carrier wave is modified by the information that is to be carried. The modification applies within a time duration called a ‘symbol period’. In a simple example, the phase modification may be 0 degrees throughout the symbol period to represent a binary “1”, and may be 180 degrees throughout the symbol period to represent a binary “0”. The waveform that modifies (or modulates) the carrier wave is then a square wave assuming values of +1 throughout a symbol period (to effect a 0 degree, or no, modification) and -1 (to effect a 180 degree modification). An example of phase modulation in which a series of 3 bits (represented by the sequence “1, 0, 1”) are to be conveyed is shown in Figure 1 below.

Figure 1 - Example of phase modulation of a carrier wave

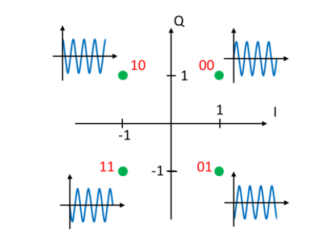

29. Phase modulation forms the basis of commonly used modulation schemes such as Binary Phase Shift Keying (“BPSK”) and Quadrature Phase Shift Keying (“QPSK”). In BPSK one bit is encoded per symbol by modulating the carrier wave through 180 degrees. In QPSK, two bits are encoded per symbol by modulating the carrier wave through multiples of 90 degrees, as shown below.

Figure 2 - Example of BPSK

Figure 3 - Example of QPSK

30. The transmitted waveforms are usually contained within a particular range of frequencies. The width of the frequency range is known as the ‘bandwidth’ of the signal, and the centre of the range is known as the ‘carrier frequency’. The use of different carrier frequencies for different signals allows these to be communicated at the same time without causing substantial interference to one another.

Environmental effects, multipath propagation/fading.

31. Radio propagation in a land mobile channel is characterised by reflections, diffractions and attenuation of energy caused by obstacles in the environment such as hills or buildings. This results in the signal travelling from transmitter to receiver by different paths which may have different lengths and/or may affect the strength of the signal. This is referred to as multipath propagation.

32. As a result of multipath propagation, the receiver may receive multiple copies or ‘echoes’ of a signal which may have a different delay or amplitude. These copies may interfere with one another. Constructive interference is when the peaks of two signals coincide, amplifying the signal at that point. Destructive interference is when the trough of one signal coincides with the peak of another, cancelling it out. Over time this interference can cause large variations in amplitude known as ‘fading’.

33. In some cases, a signal travelling by a longer path is delayed such that the receiver starts to receive one symbol from a signal on a shorter path while still receiving the previous symbol on the longer path. The two symbols therefore overlap at the receiver, causing a problem known as inter-symbol interference (“ISI”).

34. There is also the possibility of phase shift, for example when a transmitting mobile is moving. If the mobile moves the distance of half a wavelength of the carrier signal, then the phase of that signal changes by 180°. Given an operating frequency of 2.5GHz, half a wavelength is only 6cm. This phase change needs to be taken into account during demodulation if phase is used to encode information in the transmission.

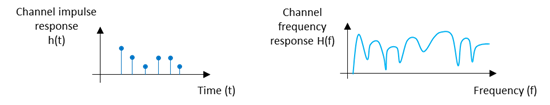

35. The overall effect of the environment on a signal is often referred to as a ‘propagation channel’. The propagation channel may be characterised by its ‘impulse response’. This is often denoted as a function h(t) of time ‘t’. The effect that such a time domain impulse response might have on individual frequencies ‘f’ is referred to as the channel frequency response H(f). H(f) is entirely defined by the corresponding time domain impulse response h(t), and therefore these are just two different ways to represent the same thing, i.e. the response of the propagation channel which characterises the relationship between the transmitted signal and the received signal. This channel response is determined simply by the set of transmission paths that exist within the environment between the transmitter and receiver. An example of the impulse response of a propagation channel as a function of time and frequency is shown below.

Figure 4 - Impulse response of a propagation channel

36. The impulse response of the propagation channel is determined by the position of the transmitter and receiver within the environment and may also change as other objects within the environment move. At carrier frequencies that are typical of many practical wireless systems (including for mobile phones), the impulse response may change appreciably within a few milliseconds.

37. The received signal may also be affected by other (potentially interfering) signals originating from other transmitters, in addition to so-called thermal noise.

38. One measure of a received signal’s quality is known as the Signal to Noise-plus-Interference Ratio (“SNIR”, also designated as SINR on occasion), which is a ratio of the power of the wanted signal to the power of interfering signals and noise.

39. Unless compensated for, the effects of the propagation channel (along with any interference and noise present in the received signal), degrade the ability of the receiver to correctly determine the information within the transmitted signal.

40. In wireless systems, these effects may be mitigated by employing certain techniques at the transmitter, at the receiver, or (more commonly) a combination of both.

Processes at the transmitter

41. The function of the transmitter is to convert data for transmission into signals or waveforms that are then radiated by the antenna. Typical processes carried out on the data at the transmitter include Forward Error Correction (“FEC”); modulation; and up-conversion and amplification.

42. Forward Error Correction involves encoding the message to expand the number of bits that are transmitted, such that an original data message of length ‘n’ bits is represented by a longer series of ‘n+k’ bits. This enhances robustness as even if the receiver does not correctly receive all the bits transmitted, it is able to use information obtained from the other bits to decode the original message. The ratio of ‘n’ data bits to ‘n+k’ code bits is called the ‘code rate’.

43. Modulation is described above. A variety of modulation schemes may be used which result in a different number of bits (‘m’) being encoded per modulation symbol. BPSK and QPSK (see above) are two examples where m=1 and m=2 respectively. Another is 16 Quadrature Amplitude (“16 QAM”), in which both phase and amplitude are modulated to encode a higher number of bits (m=4).

44. Up-conversion and amplification refers to the process following modulation, where the waveform is ‘upconverted’ (or translated in frequency) such that it occupies the desired carrier frequency and is amplified to the required power level prior to transmission via the antenna.

45. In general, modulation schemes with a lower value of m give greater robustness against adverse SNIR, while higher values of m result in higher data rates. Lower FEC code rates also give more robustness and tend to be used where the SNIR is low. Systems may use Adaptive Modulation and Coding (“AMC”), which involves adjusting the modulation scheme and FEC code rate according to the current SNIR. The transmitted power of the signal may also be adjusted to attempt to maintain a constant SNIR at the receiver.

Processes at the receiver

46. Typical processing functions carried out at the receiver include: down-conversion and filtering; channel estimation and equalisation; demodulation; and FEC decoding.

47. Down-conversion is the reverse process of up-conversion, referred to above. Filtering may be performed to remove (or attenuate) signals with frequencies outside the carrier frequency range, which could otherwise interfere with the desired signal.

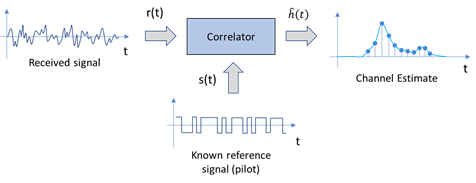

48. Equalisation is a process by which the receiver may reverse or ‘undo’ the effects of the propagation channel on the transmitted signal, such as ISI, and restore the correct phase and amplitude for each received symbol. To do so, the receiver must obtain an estimate of the propagation channel impulse response (h(t)) or its frequency domain equivalent (H(f)). This is called channel estimation. A common method of performing channel estimation is to correlate the received signal with a known reference signal (see below).

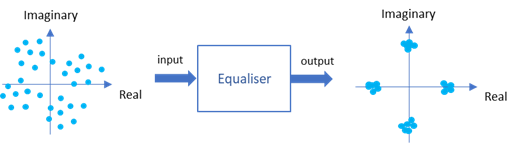

49. Once the correct symbols have been obtained following equalisation, demodulation refers to the process of determining the underlying sequence of bits that are carried by each modulation symbol.

50. FEC decoding is the process of decoding the receiver’s estimate of the FEC-encoded bit sequence to determine the underlying data.

Reference/pilot signals and their uses

Channel estimation/coherent demodulation

52. As explained above, a known reference signal may be used to perform channel estimation. Because the properties of the reference signal are known to the receiver, it has knowledge of the form of the signal as sent and as received, which can be used to determine the channel conditions.

Figure 5 - pilot assisted channel estimation

54. Once derived, the channel estimate may then be used by the equaliser, which attempts to restore the modulation symbols so they are no longer distorted. Figure 11 illustrates this basic principle for an example in which QPSK modulation is used.

Figure 6 - Restoration of modulation symbols by an equaliser

55. The use of a reference signal by a receiver to compensate for the phase shift introduced to the data symbols in this way is also referred to as “coherent demodulation”.

Channel quality determination

56. Reference signals may be used to determine the overall channel quality, for example by measuring the received power of the reference signal, or calculating the SNIR of the channel.

57. This may be done by post-processing the output of the channel estimator to obtain the overall energy of the signal.

58. Channel quality estimation may be used as an input to various features, such as AMC, power control, and channel-dependent scheduling.

Use in AMC

59. As noted above, AMC refers to adapting the modulation and coding scheme used according to the existing channel conditions. In systems that support AMC, the network scheduler may use knowledge of radio channel conditions (such as the achievable SNIR) in order to determine an appropriate modulation and coding scheme. This may be done as part of dynamic scheduling (see below).

Use in channel dependent scheduling

60. In systems that use dynamic scheduling (see below), the measured channel quality may be used as an input to the scheduler when allocating resources between users. For example, the system may allocate resources according to the point in time or particular frequency at which a given UE is experiencing advantageous channel conditions (either as compared with other UEs or compared with the average conditions for the UE in question).

61. However, channel dependent scheduling is not always used by networks. There were various approaches to uplink resource allocation which did not involve channel dependent scheduling (for example, link adaptation based on AMC, or non-adaptive / fixed-rate transmission (with or without power control)).

62. When channel dependent scheduling is used, the base station does not necessarily schedule mobiles with the best uplink channel quality, because such a scheme could result in UEs experiencing poorer channel quality being prevented from sending data for prolonged periods. Commonly, channel dependent scheduling involved allocating resources to UEs which are (at an instant in time) experiencing a channel quality that is higher than the average for that UE. This is sometimes referred to as multi-user diversity.

Use in power control

63. The system may use measured channel quality as an input in power control. For example, if a desired SNIR or Signal to Interference Ratio (“SIR”) is established at a base station for received uplink transmissions from mobiles, the SIR of known reference symbols can be measured and compared with the target SIR. This can then be used by the base station to adjust the transmit powers of mobiles.

Cell search and initial acquisition

Multiplexing of reference signals

66. As an example of this, with which the skilled person would have been familiar, was the Scheduling Information message in UMTS/HSPA (see paragraph 100 below). That message would be sent on uplink within a MAC-e PDU (either alone or multiplexed with other data) on the enhanced dedicated channel (E-DCH). The E-DCH transmission was also code multiplexed with a reference signal on the uplink Dedicated Physical Control CHannel (‘DPCCH’) to allow coherent demodulation of the Scheduling Information data sent on the E-DCH, which contained information on buffer occupancy and power headroom.

67. As is apparent from the preceding paragraphs, the experts agreed that reference signals were used for various purposes. A reference signal could not necessarily be used for all of those purposes. However, the skilled person was also aware that a given reference signal could be used for different purposes in different contexts. For example, a given reference signal could be used to support coherent demodulation in one context and for channel quality determination in another context.

68. Mr Bishop provided two examples of this, with which Mr Anderson agreed, (subject to certain clarifications on the details which do not matter for present purposes):

i) First, he explained that in GSM/GPRS, 'training sequence' bits were used both for synchronisation and for channel equalisation.

ii) Second, he explained that in UMTS/HSUPA, pilot bits on the uplink DPCCH were used for multiple purposes.

Duplexing and multiple access

69. Duplexing refers to dividing the radio resource between the “uplink” (transmissions from UEs to the network), and “downlink” (transmissions from the network to UEs).

70. Typically, mobile networks use either Frequency Division Duplexing (“FDD”) (using one carrier frequency for the uplink and another for the downlink) or Time Division Duplexing (“TDD”) (using different time periods of the same carrier frequency for the uplink and the downlink).

71. Multiple access allows two or more mobile stations connected to the network to share its capacity (in either the uplink or downlink directions) for transmission and reception of data. There are a variety of multiple access methods which allow the radio resource to be divided between different users so that their signals can be multiplexed (combined) over a shared medium and distinguished from each other at the receiver. Aspects of these methods may also be used in combination.

72. Frequency Division Multiple Access (“FDMA”) divides the available carrier frequencies between different UEs so that each has its own designated uplink and downlink carrier frequency. This was typically used in first generation analogue systems.

73. Time Division Multiple Access (“TDMA”) divides a given carrier frequency into time slots or “sub-frames” and allocates one or more time slots to a given UE. This is the scheme used in GSM.

74. Code Division Multiple Access (“CDMA”) allows transmissions from different users to be sent at the same time on the same frequency, by applying a spreading code that spreads the signal across a wider bandwidth. A form of CDMA called Wideband CDMA (“WCDMA”) is used in UMTS (see below). In WCDMA, channelisation codes (or Orthogonal Variable Spreading Factor “OVSF” codes) are used to spread the signal. A scrambling code is then applied to the spread signal. The combination of the channelisation/OVSF codes and the applied scrambling code can be arranged to be unique to each user. Thus transmissions to and from different UEs can be distinguished from each other at the receiver by “de-spreading” using the particular combination of the channelisation code and scrambling code. However, transmissions in the uplink are not orthogonal.

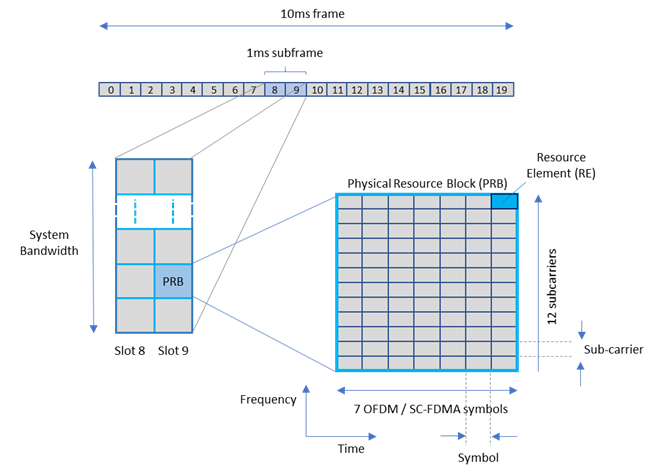

75. Orthogonal Frequency Division Multiple Access (“OFDMA”) is a form of FDMA where each UE is assigned (within a carrier) a number of subcarriers. Subcarriers are overlapping but orthogonal subdivisions of the available frequency band. During a unit of time referred to as an ‘OFDM symbol’, multiple modulation symbols (i.e. a block of modulation symbols) are transmitted in parallel, with each on a different sub-carrier.

76. Single Carrier FDMA (“SC-FDMA”) is closely related to OFDMA. Like OFDMA, each UE is given a unique allocation of orthogonal subcarriers on which to transmit a block of modulation symbols (during a unit of time referred to as an ‘SC-FDMA’ symbol). However, in SC-FDMA an additional step called a Discrete Fourier Transform (DFT) is applied. This spreads each modulation symbol across a group of subcarriers, so instead of each modulation symbol being transmitted on a single sub-carrier (i.e. a 1-to-1 mapping of modulation symbols to sub-carriers), each modulation symbol is conveyed by a group of sub-carriers, which may vary according to the allocation given to each UE.

77. OFDMA and SC-FDMA are used in the downlink and uplink, respectively, for LTE. The common general knowledge on LTE is addressed below.

Orthogonality

78. In telecommunications the term “orthogonal” has come to refer to signals that can be separated and distinguished from one another (e.g. when received by a receiver) in such a way that they do not interfere with one another. Orthogonality between signals can be achieved by separation in the time domain, frequency domain, or code domain. By means of an example of code-domain orthogonality, at zero offset (where the signals are aligned), the dot-product of the sequences [+1 +1 +1 +1] and [+1 +1 -1 -1] is equal to (1×1) + (1×1) + (1×-1) + (1×-1) = 0.

79. In all of the multiple access systems referred to above, it is important that the signals from different users can be distinguished from one another. This may be achieved by allocating resources that are orthogonal, but the fact that signals can be distinguished from one another does not mean they are orthogonal. In telecommunications, signals that are orthogonal do not interfere with each other.

Radio resource management and scheduling

80. Multiple access schemes require a mechanism for sharing resources among different users. Sharing mechanisms may be centralised or decentralised. In a decentralised approach, users contend for radio resources using an access protocol. In a centralised scheme, access to resources is controlled by a central network entity - commonly the base station. This is referred to as scheduling.

81. Resources may be assigned to a UE on a long-term basis (often referred to as a dedicated channel) or short-term basis.

82. Since transmission resources are scarce, any scheduling scheme needs to be designed to maximise the efficiency with which uplink transmission resources are assigned to and used by mobile stations so as to maximise the capacity of the cell in terms of data throughput while taking into account the data rate demands of the users of the system.

83. Dynamic scheduling is the process of providing short-term allocations of resources to users, typically lasting one to a few milliseconds.

84. In an uplink dynamic scheduling scheme, the network allocates resource to users as and when they are needed, based on their current traffic (i.e. the presence and/or arrival of data in the UE’s transmission buffer). The base station can dynamically allocate uplink resources to each user through the use of fast downlink control signalling, that carries information such as the location and quantity of the resource assigned at a particular instant in time. This allocation is generally referred to as a ‘grant’.

85. In systems that also support AMC, the network may use the fast downlink control signalling not only to dynamically allocate shared uplink channel resources, but also to indicate the modulation and coding scheme that is to be applied for the upcoming data transmission on those resources. Fast downlink signalling may also be used to control the transmission power of UEs to limit interference within the cell.

86. As referred to above, dynamic scheduling schemes may employ channel dependent scheduling in determining when to grant resources and what resources to grant to different users.

87. To operate dynamic scheduling effectively, the scheduler must know whether each user has traffic pending. In the uplink, the data pending for each UE is stored in its transmission buffers. This requires there to be means for each UE to communicate its buffer status to the network.

88. Systems using dynamic scheduling may therefore employ a buffer reporting mechanism. The buffer report may contain information such as the total number of bytes awaiting transmission, or the number of bytes in each of several queues that may be associated with different transmission priorities.

Standardisation

89. Standardisation enables interoperability of telecommunications networks. There have been three principal different digital wireless telecommunications standards, spanning 2nd, 3rd and 4th generation (2G, 3G and 4G) technologies. The first of these was originally developed by an industry-wide collaborative group known as ETSI (European Telecommunications Standards Institute). Another larger group called the Third Generation Partnership Project (3GPP), which includes ETSI, developed the 3rd and 4th generation technologies.

90. Standards are embodied in technical specifications (TS) which set out the functionality that is to be implemented by equipment in order to comply with the standard. There are many different TSs covering different aspects of functionality required by the standard. In 3GPP, TSs are produced by technical specification groups (TSGs) that each have responsibility for a different overall technical area. Within each TSG there are several smaller Working Groups that are each focused on different specific aspects of the technology for which the TSG is responsible. A standard may have several principal “releases” through which new features are introduced. Within each release, different versions of each TS may be produced, for example to correct errors or to improve the clarity of the specification. At the point where the overall functionality to be included into a particular release has been defined, the release is said to be functionally “frozen”: after that time no new features may be added (though corrections and clarifications may still be made by means of new TS versions within the same release).

System examples

GSM/GPRS

91. GSM is a second generation (2G) wireless telecommunications standard. It was initially developed to support circuit switched services. The General Packet Radio Services (GPRS) architecture was added later to support packet switched services.

92. Multiple access in GSM/GPRS is achieved through a combination of TDMA and FDMA.

93. Further details of GSM/GPRS were given by Mr Bishop, and were agreed to be CGK. The only point which it is necessary to bring out is the point I have already adverted to above, that in GSM and GPRS, known reference bits (called "training sequence" bits) were included in transmission bursts for synchronisation and channel equalization purposes.

UMTS/WCDMA

94. The Universal Mobile Telecommunications System (UMTS) is a third generation, or 3G, wireless telecommunications standard developed by 3GPP. The first UMTS release was Release 99, frozen in March 2000. Subsequently Release 5 introduced High-Speed Downlink Packet Access (“HSDPA”) and Release 6 introduced High-Speed Uplink Packet Access (“HSUPA”) - together “HSPA”.

95. UMTS supports both FDD and TDD modes and both modes employ CDMA. The FDD mode (often referred to as WCDMA - see above), is deployed more widely than the TDD mode.

96. In WCDMA, data from each user is spread across a wider bandwidth by multiplying each modulation symbol by a spreading sequence called an Orthogonal Variable Spreading Factor (“OVSF”) code or “channelisation code”, as described above.

97. Because transmissions from different UEs on the uplink are not orthogonal and may interfere with each other, the interference must be managed. In order to do so, the Node B controls the power of the transmissions from each UE.

HSPA

98. HSPA introduced several features designed to enable higher data rates. HSDPA used higher order modulation schemes and dynamic scheduling, with adaptive modulation and coding schemes, to increase the theoretical downlink data rate available to mobiles to up to 14.4 Mbps for short periods. It also introduced a shorter 2ms time interval over which resources could be allocated, called a Transmission Time Interval (“TTI”). HSUPA introduced the Enhanced Uplink Dedicated Channel (“E-DCH”) and gave control over resource allocation to the Node B. This increased efficiency by allowing dynamic scheduling by the Node B.

99. The Node B dynamically schedules uplink transmissions by providing UEs with a grant that determines the maximum transmission power that the UE may use for scheduled transmissions, which may be adjusted on a TTI-by-TTI basis.

Pilots in UMTS/HSPA

102. In UMTS a Common Pilot Channel (“CPICH”) was defined as a downlink reference. Known pilot bits were also included in the downlink and uplink Dedicated Physical Control Channels (“DPCCHs”).

103. Reference signals are used in UMTS/HSUPA for several purposes including coherent demodulation, SIR measurement and power control.

LTE

104. To ensure 3GPP radio access technology would remain competitive over a long period, it was necessary to consider a long-term evolution of the 3GPP system architecture, optimised for packet data, and potentially involving a new radio-access technology. Aspects that were identified to improve packet data performance included reduced latency, enabling more rapid access to necessary resources through flexible and traffic dependent scheduling, as well as the support of higher data rates.

105. The parties agree that the following happened as a matter of fact. The first high level study, which introduced the term “3G Long Term Evolution” was published in September 2003. A workshop took place at the end of 2004 which considered OFDM as a technology for the downlink. Subsequently, feasibility studies were begun and largely completed by June 2006. This resulted in the following technical reports (“TR”), each of which had been published and was available to the public to download at the priority date:

a. TR 25.813 v7.0.0 on E-UTRAN Protocol Architecture;

b. TR 25.814 v7.0.0 Physical Layer Aspects for Evolved UTRA;

c. TR 25.912 v7.0.0 Feasibility Study for Evolved UTRA and UTRAN.

106. As I touched on earlier, there remained a mini-dispute as to the extent to which the content of these TRs was CGK. The Claimants’ case is that it was CGK that a TR had been published which set out the core functionality proposed for the radio aspects of the proposed system (this was TR 25.912). The Claimants also accept that TR 25.912 (and the other two TRs) were available to be consulted for the current state of LTE development. The Claimants do not accept that this means that the contents of the three TRs was CGK.

107. The Defendants’ case is that it was CGK at the priority date that each of the three TRs existed and, to the extent that the details contained therein were not already known to the skilled person without consulting the document concerned, the documents themselves were available to be consulted for the current state of LTE development.

108. This dispute has no impact on any of the issues I have to decide, but I incline to the view that these TRs would have been consulted by the skilled person, given any reason to do so. In other words it would have been obvious for the skilled person to obtain the information, given any need to do so. This does not make the contents of these TRs CGK - see KCI v Smith & Nephew [2012] EWHC 1487 (Pat), [2010] FSR 31, Arnold J at [112].

Kwon

109. Although logically I should consider the disclosure of the prior art, Kwon, at this point, the Defendants’ arguments on Kwon are really only comprehensible once one has an understanding of the arguments on Essentiality. For that reason, I will consider Kwon after Essentiality.

THE PATENTS

110. The patents have a priority date of 22 September 2006, 689 being the parent and 259 a divisional application. The text of the two patents is largely and materially identical, the differences lying in the claims and in the corresponding consistory clauses. The parties were in agreement that it is best to look primarily at EP689 and I will do the same, noting the differences in EP259.

111. Although three issues of construction arise, by far the most important is concerned with the proper interpretation of the term ‘pilot symbol(s)’. The Defendants argue that in the context of these patents, the term ‘pilot symbol’ in the claims means a channel quality pilot, i.e. a pilot symbol which is used, at least, for channel quality determination. The argument depends on close analysis of certain early paragraphs in the specifications, so it is necessary to set them out in detail before I come to address construction.

112. As I mentioned, both patents are entitled ‘Method and device for transferring signals representative of a pilot symbol pattern’, wording which also features in [0001] in the context of a telecommunications system and the transfer of such signals to a telecommunications device.

113. Paragraph [0002] explains that in some telecommunications networks, the access by mobile terminals to the resources of the network is decided by the base station.

114. Paragraphs [0003] and [0004] describe a prior art uplink scheduling scheme, albeit one which neither expert recognised. In that scheme, when a mobile needs to transmit data, it requests the base station to allocate it a pilot symbol pattern. The base station allocates to each requesting mobile a pilot symbol pattern and a pilot allocation time (e.g. 20ms). Throughout that period, each mobile must periodically (e.g. every ms) transfer to the base station signals representative of the pilot symbol pattern it has been allocated. The base station determines the channel conditions which exist between itself and each mobile, using the signals received, and schedules mobiles for uplink transmission based on those channel conditions.

115. The experts were agreed that the reference to ‘signals representative of the pilot symbol pattern’ allocated to the mobile would be understood by the skilled person to refer to signals derived from the pilot symbol pattern allocated by the base station to that mobile and he or she would understand them to be pilot signals. Generally, the term ‘pilot symbol pattern’ is used throughout the specification but is not used in the claims or in the consistory clauses, which use the term ‘pilot symbol’(s) instead. The experts used the shorthand ‘pilot signals’ and I will do the same.

116. In [0005]-[0007], two issues with the prior art scheme are identified. First, resources may be allocated to the mobile at times when it has no data to transfer, so the resources of the system are used inefficiently. Secondly, the mobile wastes battery power ("electric power resources") by sending pilot signals at times when it has no data to transfer. The patents explain at [0008] that one possible solution is to shorten the pilot allocation time, but that leads to more messaging between the mobiles and the base station.

117. In [0009] there is a brief recognition of a prior art patent in which pilot signals are modulated by power control information. In [0010], it is noted that in the prior art, pilot symbol patterns ‘are transferred for the only purpose of channel conditions determination’, a phrase in which the Defendants are keen to stress the word ‘only’.

118. Then [0011] contains a statement of the aim of the invention. In this and the further paragraphs quoted from the Patent, I have underlined the expressions on which the Defendants place particular emphasis:

"The aim of the invention is therefore to propose methods and devices which allow an improvement of the above mentioned technique and which enable to use signals representative of a pilot symbol pattern for another purpose than channel conditions determination, in order to improve the use of the resources of the telecommunication system and to better use of the electric power resources."

119. Then, after two consistory clauses, EP689 continues at [0014]-[0018]

[0014] Thus, the signals representative of the pilot symbol pattern can be used for another purpose than channel conditions determination. As the transmission power of the signals representatives of the pilot symbol pattern is adjusted to information associated to data to be transferred to the first telecommunication device [i.e. the base station], the first telecommunication device is able to execute further process based on the signals representative of the pilot symbols patterns.

[0015] Furthermore, as the signals representative of pilot symbol patterns convey another information, the resources of the telecommunication network are used efficiently.

[0016] (absent from EP259) [According to a particular feature, the information associated to data to be transferred is the existence or not of data to be transferred and if no data to be transferred exist, the transmission power is adjusted to null value.]

[0017] Thus, the first telecommunication device is precisely informed if data has to be transferred or not by the second telecommunication device [i.e. the mobile].

[0018] Furthermore, if the second telecommunication device has no more data to transfer, it reduces its electric power resources consumption."

120. EP689 continues with further consistory clauses which I have considered but need not relate because (a) it is common ground that I need only consider claim 1 and (b) they shed no light on the key issue of the construction of ‘pilot symbol(s)’. Paragraph [0032] introduces the 6 figures and the remainder of the specification from [0033] to [0126] describes example embodiments by reference to those figures. The specification concludes in a familiar manner with the reminder in [0127] that ‘Naturally, many modifications can be made to the embodiments of the invention described above without departing from the scope of the present invention.’

121. The specification of EP259 is identical in [0001]-[0011]. Then, of course, the consistory clauses and the alleged advantages of other embodiments differ until the two patents resume identical text from the introduction to the figures, which occurs in [0025] in EP259, to the end of the specification, where [0120] in EP259 has the identical conclusion as [0127] in EP689.

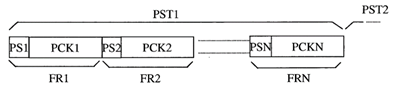

122. Of the figures, figures 1-3 show the basic elements of the telecommunications system, base station and mobile terminal. Figure 4 shows a time-domain overview of uplink resources:

123. In Figure 4, a time period corresponding to a first Pilot Allocation Time Duration or PST (PST1) is shown to comprise “N” time frames (labelled FR1 to FRN). Reverting to references to EP689, paragraph [0068] explains that each time frame is composed of two time slots: a pilot time slot portion (labelled in the patent as PS) and a data packet time slot portion (labelled in the patent as PCK).

124. The pilot time slots are used by the mobile terminal to transfer the pilot signal which has been allocated to the mobile terminal by the base station. Thus, in a PS time slot, the base station may receive pilot signals from multiple mobile terminals.

125. As explained in paragraph [0072], the pilot symbols allocated to a mobile terminal to send in PST1 may be equal to or different from those allocated to the same mobile terminal to send in PST2.

126. Paragraph [0069] explains that, within the described invention, the mobile terminal may convey information to the base station regarding the status of pending uplink data by means of adjusting the power of the pilot signals sent within the PS time slots of a PST: “The transmission power of the signals representatives [sic] of the pilot symbol pattern is adjusted according to information associated to data to be transferred”.

127. Paragraph [0069] does not explain further the detailed nature of how the “adjustment” is derived from the information to be transferred, which the skilled person would understand could take a number of forms. However, paragraph [0070] describes one approach in which it is explained that “if the transmission power of the signals representatives of the pilot symbol pattern is set to null value for a time slot PSn with 1 ≤ n ≤ N, the transmission of the signals representatives of the pilot symbol pattern can be also understood as a non-transmission of the signals in the time slot PSn.”. In other words, one type of adjustment is to not transfer the pilot signal at all (or to set the power to null, which is the same thing). From the description of Figure 5, and also for example from paragraph [0115] in respect of Figure 6, the skilled person would understand that this provides a binary indication as to whether or not there is pending data at the mobile terminal and that this information is conveyed by the presence or absence of the pilot signal.

128. An alternative (or additional) approach is where, in the case that the pilot signal is present (i.e. when it does not have a power set to zero) its power is adjusted according to a range of power levels. This could then provide further information, for example, on the Quality of Service (QoS) requirements of the pending data. These two approaches are expanded on in the context of Figure 5.

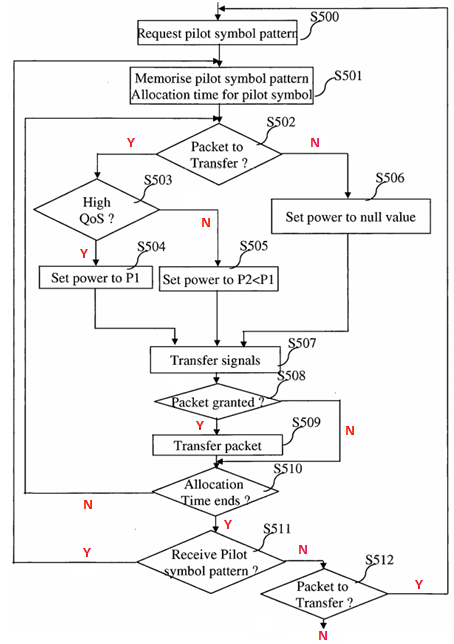

129. Figure 5 in each Patent shows the algorithm operated in the mobile terminal but omits the Y/N indications at each decision point. With those added, Figure 5 looks like this:

130. In steps S500 - S501, the mobile terminal requests and receives a pilot symbol pattern from the base station and an allocation time for transferring the pilot signal. In subsequent steps S502 - S506 (as described at paragraphs [0079] - [0085] and [0095] - [0096]) the power of the pilot signal is adjusted by the mobile terminal to one of a high value (P1), a lower value (P2) or to zero (a null value) in steps S504, S505 and S506 respectively, according to the decision flows at steps S502 and S503. If there is a packet to transfer at S502, the pilot signal power is set to a power, P1 or P2, depending on whether the required QoS is high or low (see paragraphs [0082] - [0085]). On the other hand, if the device determines at step S502 that there is no packet to transfer, then the mobile terminal moves to step S506 and the power of the pilot signal is set to zero. This is therefore implementing the approach introduced in paragraph [0070] and which is further mentioned in paragraph [0115] (which I describe below), wherein the presence or absence of the pilot signal indicates to the base station whether or not the mobile terminal has data packets to transfer (as I have noted, the 689 patent equates (at paragraph [0070]) the transmission of a pilot signal at zero power with a non-transmission of the pilot signal). This achieves the benefits of avoiding the inefficiencies associated with mobile devices sending pilot signals over the entire allocation period even when they have no data to transfer.

131. Following the power adjustment at steps S504 and S505 (or decision to set a null value at S506), at S507 the pilot signals are transferred in a PS time slot (or not transferred as the case may be). Thereafter, the mobile terminal checks (at S508) as to whether a message has been received from the base station authorising it to transfer a packet (paragraph [0088]) and if so, the packet is transmitted on the uplink in step S509.

132. A check is then made to determine whether the PST duration has ended (S510). If the PST is ongoing, the algorithm returns to S502 and iterates to set the pilot signal power for a subsequent pilot transmission opportunity (or pilot time slot, PSn). As described in paragraphs [0095] - [0098], if all the data has been transferred in step S509, the system will progress to step S506 and set the power of the pilot signal to a null value (in other words, it will stop transmitting the pilot signal) to indicate to the base station that it no longer has data to transmit. Thus the mobile terminal will continue to indicate whether or not it has data to transfer for the duration of the pilot allocation time, and in response may receive (or not) authorisation from the base station to transfer data. Such authorisations will cease when all data has been transmitted, because the mobile terminal will cease to transmit the pilot signal.

133. Conversely, if the PST has ended, the mobile terminal optionally checks (at S511) whether another PST has been allocated and if so, continues in a similar fashion within the new PST. If at S510 the PST had ended, the mobile terminal no longer transmits pilot signals on the PS time slots: it therefore proceeds to step S512, and if further uplink data is present, must instead first request another pilot symbol pattern by sending a message to the base station (S500). If, at this stage, no data is present, the mobile terminal ends the algorithm, which may then be initiated at a later point in time if further data arrives in the transmission queue (paragraph [0102]). As explained at paragraph [0103], the check at S511 may be left out, in which case once the PST is ended the mobile terminal moves straight to step S512.

134. Figure 6 of each patent illustrates a corresponding algorithm for the base station, which is described at paragraphs [0104] - [0126].

135. At the start of the algorithm, the base station (through the processor 200) receives messages from mobile terminals that need to transfer data, requesting an allocation of a pilot symbol pattern (S600). The base station identifies each device that sent a request message (see paragraph [0106]), and pilot symbol patterns are then allocated to each mobile terminal that did (S601). As described at paragraph [0109], the base station also activates the PST.

137. Within a PST, the base station receives (S602) pilot signals from the mobile terminals and analyses these at step S603. An example is provided in paragraph [0111] wherein the base station may use the pilot signals to determine channel conditions by means of measuring the received power for each of the pilot signals.

138. At step S604, the base station selects a mobile terminal “to which next uplink time frame is allocated”. By this the skilled person would understand the description to be referring to the allocation of resource in the next time frame for the mobile terminal to send data. This is said in paragraph [0112] to be done “using the channel conditions”, but paragraphs [0113] - [0114] provide some further detail by means of an example. In the example of the scheduling operation given in paragraph [0113], the mobile terminal with the highest received pilot signal power is selected.

139. Paragraph [0114] describes a further variant, wherein “if each second telecommunication device 20 sets the transmission power of the signals representative of a pilot symbol pattern according to the packet it has to transfer, the probability that the first telecommunication device 10 allocates the next time frame to a second telecommunication device 20 which has a packet which has an associated high quality of service is increased”. The skilled person would understand this to be referring to an alternative approach, as seen in Figure 5, wherein resources are preferentially allocated to those mobile terminals with data requiring a high quality of service (which again may be indicated by the mobile terminal increasing the power of the pilot signal transmission).

140. Paragraph [0115] then explains that: “as each second telecommunication device 20 sets the transmission power of the signals representative of a pilot symbol pattern to null value when no packets need to be transferred, the first telecommunication device 10 never allocates the next time frame to a second telecommunication device 20 which has no packet to transfer, optimizing then the resources of the wireless network 15”. This is the approach described in general terms in paragraph [0070] and again in the context of Figure 5, wherein the mobile terminal indicates whether it has any packets to transfer by the presence or absence of the pilot signal: if not, the mobile terminal indicates that to the base station by setting the power to a null value, which avoids the inefficiencies of unnecessary transmission of the pilot signal as I have described.

141. Paragraphs [0116] - [0125] then describe further ways in which the base station takes into account the presence or absence of a pilot signal (i.e. whether it had power set to a null value or not) and explains that this may be used to determine whether a given mobile terminal has data packets remaining to transfer at the end of the PST. In the scheme that is described, at step S605, the base station registers if a pilot signal has not been received, which occurs when a mobile terminal sets the transmission power to a null value. At step S606 the base station sets a binary value M(k) for each kth mobile terminal based on whether a pilot signal for that user was received or not. For those mobile terminals from which a pilot signal was received, the base station sets a value of M(k)=1 whilst for those mobile terminals for which a pilot signal was not received, the base station sets M(k) to a null value - i.e. M(k)=0. Paragraph [0120] explains that M(k) therefore indicates those devices that have no more data to send at the end of the PST (though as explained at paragraph [0115] receipt of a null value at an earlier point in time within the PST is used to ensure that the base station does not allocate resources to that mobile terminal while the PST is ongoing).

142. At step S607, if the PST has not yet ended the algorithm proceeds back to step S602. At the end of the PST, at step S608, M(k) is then used to determine those mobile terminals which still have data to send and therefore require another (follow-on) PST (see paragraph [0123]). There is a variant of this approach described in paragraph [0125] in which the base station counts the number of occasions on which a pilot signal (i.e. a non-null value) is received from each mobile terminal. The allocation of a further pilot symbol pattern at step S600 is then based on whether the number of such occasions for a given mobile terminal exceeded a threshold.

Construction/Scope of the Claims

143. This case has not involved any arguments as to equivalents, so my task is to undertake a ‘normal’ interpretation of the claims: see Eli Lilly v Actavis UK Ltd [2017] UKSC 48. This is a very familiar test, but I am reminded that it remains an exercise in purposive construction (Icescape Ltd v Ice-World International BV [2018] EWCA 2219 at [60] per Kitchin LJ (as he then was). It is an objective exercise and the question is always what a skilled person would have understood the patentee to be using the words of the claim to mean.

144. In this case, the terms in issue are underlined in what follows. Claim 1 of the 689 patent, broken down into integers, reads:

[A] Method for transferring of data from a mobile terminal to a base station once a wireless resource enabling the transfer of data from the mobile terminal to the base station has been allocated, the method comprises the steps executed by the mobile terminal of:

[B] - transferring to the base station information indicating the need or not of a wireless resource for the transfer of data from the mobile terminal to the base station based on pilot symbols provided by the base station,

[C] - receiving, in response to the transferred information, from the base station information indicating that a wireless resource is allocated to the mobile terminal,

[D] - transferring to the base station data in the wireless resource indicated as allocated to the mobile terminal.

145. Claim 1 of the 259 patent is as follows, again broken down into integers:

[A] Method for scheduling the transfer of data from a mobile terminal to a base station in a telecommunication system, characterised in that the method comprises the steps executed by the mobile terminal of:

[B] - determining if resource of the telecommunication system needs to be allocated to the mobile terminal for transferring data to the base station,

[C] - transferring a pilot symbol before the data transmission if resource of the telecommunication system needs to be allocated to the mobile terminal for transferring data to the base station.

146. As indicated above, the issues of construction arise on integer B for EP689 and on integer C for EP259. The Defendants say that ‘pilot symbol(s)’ has the same meaning in both patents. It is convenient to address the interpretation of that term in claim 1 of EP259 first.

‘pilot symbol’

147. As Mr Bishop pointed out, the specifications identify three purposes for pilot symbols:

i) First, to identify the mobile terminal with the best uplink radio channel and thereby efficiently allocate uplink resources for data transmission to mobile terminals that are best able to use them;

ii) Second, to indicate QoS. The mobile terminal selects the transmission power of the signals representative of a pilot symbol pattern based on the Quality of Service of the data it has to send;

iii) Third, as a request for uplink resources. The mobile terminal stops sending signals representative of a pilot symbol pattern when it no longer has data packets in the transmission queue, in order that the base station does not allocate resources unnecessarily to mobile terminals that no longer have data to send.

148. It seems to be common ground that the first of these purposes was a conventional purpose of such symbols (for channel dependent scheduling) at the priority date, whereas the second and third purposes are the additional ones presented by the patents (even though the claims 1 are only concerned with the third purpose).

The arguments

149. The Claimants’ argument is simple: the term ‘pilot symbol’ bears the ordinary meaning of a pilot or reference signal i.e. a pre-defined signal known to both transmitter and receiver (see paragraph 51 above). The Defendants professed a great deal of forensic bafflement as to what the Claimants’ position was, suggesting that the Claimants’ argument amounted, in effect, to saying that any signal can be a pilot and, similarly, that the Claimants’ interpretation included a mere request message (e.g. the step (1) message in Kwon). However, it is clear that the Skilled Person would have readily understood what the Patents were talking about when they referred to pilot or reference signals.

150. What a mere request message and a reference signal have in common is that the signal must be known to both transmitter and receiver (e.g. so that the receiver knows it has received a request for resources). At one level, both signals have some sort of predefined structure for that purpose, although the structure of a mere request message merely requires it to be recognised as such. However, in my view there remained a clear distinction in the mind of the Skilled Person between a mere request message for resources and a pilot or reference signal. A pilot or reference signal has a predefined structure (known to both transmitter and receiver) also designed to have good auto-correlation properties (i.e. to have zero or a very low correlation with itself at non-zero offsets). It is this latter characteristic which made the reference signal capable of carrying out the various purposes for which reference signals were used. A mere request message would not have that capability. I realise that the Agreed CGK was that reference signals ‘commonly’ had ideal auto-correlation properties (see paragraph 53 above) but, in the context of these Patents, the Skilled Person would expect the pilot or reference signals to have at least good auto-correlation properties.

151. The Defendants argue that, in the context of the teaching of the specification, the term must be understood as referring to something which is used not only as a request for uplink resources but also for its conventional purpose of channel quality determination.

152. The Defendants start from the agreed CGK meaning of pilot or reference signal (see paragraph 51 above). They place particular emphasis on ‘reference’ and say the signal must be used as a reference signal. In the context of the Patents, they say the use in question is as a channel quality pilot.

153. There are two main strands to this argument. First, the Defendants focus on the expressions ‘another purpose’ in [0011] and [0014], ‘further process’ also in [0014] and ‘another information’ in [0015]. They contrast this with the statement in [0010] and stress the word ‘only’ as I mentioned above. However, the skilled person would know it was not the case that pilot symbols were only used for channel quality determination. In effect, the Defendants say that ‘another/further’ means ‘additional’ as opposed to ‘different’. They point to the first purpose identified - a channel quality pilot. Hence they say that the pilot symbol of the claims is a channel quality pilot used for another or additional purpose of requesting uplink resources.

154. The Defendants also focus on the claimed advantages of the invention. They identify three:

i) Efficient use of network resources [0015] because the pilot signal is used to convey ‘another information’;

ii) The base station is precisely informed if data has to be transferred or not [0017]; and

iii) The mobile reduces its electric power resources consumption by not transmitting channel quality pilots when it has no data to transmit, relying on [0018].

155. They then say the first advantage arises from using the channel quality pilot both for that purpose and also for indicating a need for resources. They say the other two are not really benefits of the invention on the basis that any sensible system would feature them.

Analysis of the Defendants’ arguments

156. In my view, the Defendants’ arguments on the claimed advantages entail a rather selective reading of the specification, assuming the result they seek rather than independently indicating that is the right result.

157. In any event, the Defendants’ argument places far too much weight on the way that the patentee chose to introduce and describe his or her invention, distinguishing it from the particular prior art system described in the Patent. This is not quite the same vice as attempting to read into the claim a limitation which features only in an embodiment, but it is close to that. I recognise that the Defendants are relying on the general teaching about what the invention is. Nonetheless, once the description is understood, in my view it is clear that the claims do not require the use of a channel quality pilot being used for the stated purpose of requesting uplink resources. The distinction is narrow but real. The reference signal may be capable of being used as a channel quality pilot but the claims 1 do not require the reference signal to be so used. If the claims 1 were limited to channel quality pilots being used to request uplink resources, the skilled person would expect the claims to have said so. The fact that there is no hint at all in the claims to ‘channel quality pilot’ indicates to me that ‘pilot symbol’ in the claims is not being used in any special sense, as the Defendants argue, but simply in its ordinary sense of a pilot or reference signal.

158. It is common ground that the term ‘pilot symbol’ has the same meaning in claim 1 of EP689 as in claim 1 of EP259, but in any event my finding is reinforced by consideration of claim 1 of EP689. In that method, what is transferred from the mobile to the base station is ‘information….based on pilot symbols provided by the base station’. Nothing in this claimed method requires the pilot symbols to be ‘channel quality pilots’.

159. Accordingly, I reject the Defendants’ arguments on the meaning of ‘pilot symbol(s)’. I am left with the impression that the principal driver for those arguments was the desire to find a non-infringement point, as opposed to being based on an objective assessment of the claims.

160. There is one point to add. The experts agreed that the Patents proposed a new use for reference or pilot signals. At this point it is worth noting, principally because of the arguments I come to later on infringement/essentiality, that whereas traditionally reference or pilot signals did not convey information from higher layers, the new use proposed in the Patents is that reference signals are used to convey information from higher layers: specifically a need for uplink resources.

‘information indicating’

161. In their opening skeleton, the Defendants made a number of points about these words in claim 1 of 689, which the Claimants addressed in their oral opening. It is not entirely clear whether the Defendants maintain those points - they were not mentioned at all in the Defendants’ closing submissions - and they appear to have fallen away in the light of the evidence on Kwon.

‘transferring information indicating the [not] need’

163. The Defendants say that claim 1 of 689 requires information to be transferred which positively indicates the lack of need for uplink resources.

164. In this regard both parties rely on claim 3. Claim 3 of 689 claims a method according to claim 2 (itself dependent on claim 1) characterised in that information indicating the ‘not need’ of a wireless resource for the transfer of data from the mobile terminal to the base station is represented by a signal having a power set to null value.

165. The Defendants submit that the subsidiary claim 3 involves a limitation on claim 1, so the argument goes: claim 1 must be broader. Although subsidiary claims are very frequently narrower than the principal claim, this is not a universal rule. It depends on the circumstances. The Defendants’ construction of claim 1 would have very odd consequences. First, the example embodiment would fall outside claim 1. Second, it would also mean that claim 3 fell outside the scope of claim 1. Third, it would seem to defeat the purpose of the whole invention in which the mobile sends a pilot or reference signal to indicate a need for uplink resources, whereas (on the Defendants’ argument) when it does not need resources it has to transmit (presumably periodically) a positive message indicating no need for resources. This does not make technical sense.

166. The more likely construction is that, on this ‘not need’ point, claim 3 does not introduce an additional limitation to claim 1, and they mean the same thing. Even if I am wrong about this, the same result is achieved by claim 3, even if the ‘not’ need must be indicated by silence detected periodically at the base station, due to the dependence on claim 2. In relation to claim 1 of EP689 I find that the ‘not’ need can be indicated by silence.

‘information…based on pilot symbols provided by the base station’

168. In terms of ‘pilot symbols provided by the base station’, the Defendants accepted Mr Anderson’s explanation of this requirement:

‘The patent contemplates that the signal sent by the mobile terminal may be based on a pilot symbol pattern provided by the base station, or on information provided by the base station enabling the mobile terminal to identify the pilot symbol pattern (see paragraph [0053], which corresponds to [0046] of the 259 patent).’