Freely Available British and Irish Public Legal Information

[Home] [Databases] [World Law] [Multidatabase Search] [Help] [Feedback]

England and Wales High Court (Patents Court) Decisions

You are here: BAILII >> Databases >> England and Wales High Court (Patents Court) Decisions >> AIM Sport Vision AG v Supponor Ltd & Anor [2023] EWHC 164 (Pat) (30 January 2023)

URL: http://www.bailii.org/ew/cases/EWHC/Patents/2023/164.html

Cite as: [2023] EWHC 164 (Pat)

[New search] [Printable PDF version] [Help]

Neutral Citation Number: [2023] EWHC 164 (Pat)

Case No: HP-2020-000050

IN THE HIGH COURT OF JUSTICE

BUSINESS AND PROPERTY COURTS OF ENGLAND AND WALES

INTELLECTUAL PROPERTY LIST (ChD)

PATENTS COURT

The Rolls Building

7 Rolls Buildings

Fetter Lane

London EC4A 1NL

Before:

Mr. Justice Meade

Monday 30 January 2023

Between:

|

|

AIM SPORT VISION AG (incorporated under the laws of Switzerland) |

Claimant |

|

|

- and - |

|

|

|

(1) SUPPONOR LIMITED (2) SUPPONOR OY (incorporated under the laws of Finland) |

Defendants |

- - - - - - - - - - - - - - - - - - - - -

PIERS ACLAND KC and Edward Cronan (instructed by Powell Gilbert LLP) for the Claimant

BRIAN NICHOLSON KC and David Ivison (instructed by Ignition Law) for the Defendants

Hearing dates: 1-2 and 17 November 2022

- - - - - - - - - - - - - - - - - - - - -

JUDGMENT APPROVED

Remote hand-down: This judgment will be handed down remotely by circulation to the parties or their representatives by email and release to The National Archives. The deemed time and date of hand down is 10.30am on Monday 30 Jan 2023.

Mr Justice Meade:

The common general knowledge. 9

Virtual graphics for TV broadcasting. 10

The camera and lens calibration. 12

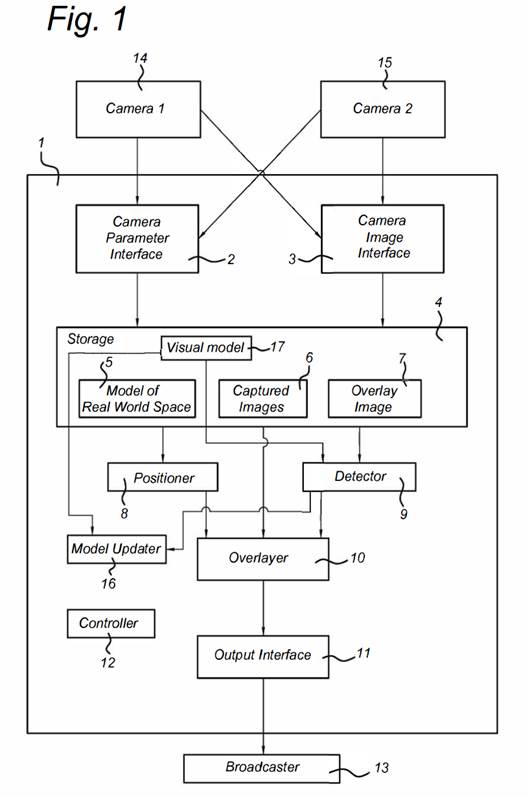

Tracking the position and orientation of the camera. 13

Spatial frequency techniques. 14

Cut and paste and binary masks. 14

Image Feature Identification. 16

Foreground Object Detection. 16

Camera motion and optical flow.. 17

Virtual Production around the Priority Date. 22

The Piero sports graphics system.. 22

‘1st and Ten’ and ‘First Down Line’ 22

Claim 12 - Revised Amendment 1 (conditional) 27

Claim 12 - Revised Amendment 2 (conditional) 28

Issue 1 - claim feature 12.5. 29

Issue 2 - claim feature 12.3. 33

The skilled person’s reaction to Nevatie. 39

Do the amendments make any difference?. 46

“Captured detection image”. 48

LED screen has a uniform, monotone distribution, occluding object is still visible 49

Introduction

1. The Claimant (“AIM”) alleges that the Defendants (together, “Supponor”, there being no need to distinguish between them) have infringed European Patent (UK) 3 295 663 B1 (“the Patent”).

2. The Patent concerns billboards of the sort seen at sporting venues, and which allow the superimposition on TV broadcasts by electronic means of different advertising material from that seen in the ground and/or different advertising material in transmissions of coverage of the event in different territories. It contains method and product claims, but as will appear below only a single method claim (claim 12) is now in issue.

3. The alleged infringement is part of the Supponor “SVB System”. There are several versions of the system, but for the purposes of this judgment there is no need to go into the details of how they differ and I will just refer to “the SVB System”.

4. There is a conditional application to amend the Patent which is resisted by Supponor. It is conditional because it is put forward on the basis that it gives the claim scope which AIM says is anyway the right one on a proper interpretation of the granted claims.

5. There are parallel proceedings in Germany in which AIM has been successful so far, both on validity and infringement. AIM relied on this somewhat tangentially in its written submissions but I was not actually taken to either of the German Courts’ decisions. Supponor’s main response was that (as sometimes happens in the bifurcated German system) AIM had argued for different claim scopes in the two proceedings. AIM made no answer to this. It does not mean that the German results are adverse to AIM but it limits any help I could get in terms of reaching a correct decision where I have to apply a single consistent claim scope to infringement and to validity. In that context, I do not intend to give any weight to the German decisions and will say no more about them, other than that in taking such an approach I intend no disrespect to the German courts and am not criticising their analyses.

Conduct of the trial

6. The trial was conducted live in Court and there were no COVID issues.

7. The trial took three days; two days almost exclusively taken up with the oral evidence and a third day of oral closing submissions. At the PTR, having read into the case somewhat, I had expressed my concern that that was likely to be too short. I was assured by both leading Counsel that the action was a simple one where there was no dispute about the disclosure of the prior art and that the case really turned on short points of claim interpretation. That was not so. There were a large number of issues and in total I received approaching 200 pages of dense written submissions which, I have to say, lacked much in the way of introduction or overview to help me to orient myself. Specifically because I had been told at the PTR that the case was a simple one, virtually no time was allowed for oral openings and this exacerbated the problems. I extended the length of the day for closing submissions but it was still a very challenging fit.

8. This was all unfortunate. It is a fact of life that trial estimates sometimes go wrong but where the trial judge is able to hear the PTR and specifically interrogates the time allowed and the timetable, the parties need to play their part and give the most accurate guidance that they can.

9. The problem was made worse by the parties keeping issues in play after their usefulness had expired or when they were just not really being run. In particular, Supponor’s skeletons maintained a large number of issues of claim interpretation, but it was only after I pressed their Counsel in closing that it was clarified that only two actually mattered.

10. During the brief oral openings I was given, by AIM, a long list of the issues that it perceived were live in the light of the written openings; this was not an agreed document. After written closings I was given a significantly shorter, but still substantial agreed list of the issue for decision, from which I have worked. It would have been welcome, and a good discipline, to have had this at the PTR, or at the start of trial.

The issues

11. At a relatively high level, the issues are:

i) Two points on claim interpretation.

ii) Whether the SVB System infringes. This depends entirely on claim interpretation.

iii) Obviousness over Patent Application WO 2013/186278 A1 “Nevatie”.

iv) A squeeze argument over Nevatie, in addition to the allegation that the Patent is obvious over it in any event. This arises from the fact that Nevatie was filed by Supponor, and a major theme of Supponor’s case was that the SVB System was an obvious development of Nevatie.

v) An insufficiency allegation run mainly as a squeeze.

vi) Whether the proposed amendments to the patent are allowable, the sub-issues being:

a) Whether they render the Patent valid in the event that it is invalid without them;

b) Clarity;

c) Added matter.

vii) An allegation by Supponor that the combined effect of two separate admissions by AIM is that AIM admitted that the remaining claim in issue, claim 12, is invalid. I will call this the “Promptu” point.

Overview

12. Given the issues and their interrelation, I think it will assist to give an overview. This is necessarily simplified.

13. LED display boards are used at sporting events to show advertisements. In the stadium the spectators might see an advertisement for, e.g. a beer from a local brewery. The advertisement might well be a moving picture. Advertising in the stadium brings in revenue itself, but there is also money to be made from selling advertising in a broadcast of the event.

14. It was known to be possible to show a different advertisement in the broadcast of an event, and which appeared as if it was on the board in the stadium, by processing the video feed appropriately in real time with computers. So a beer advertisement in the stadium might be replaced with a car advertisement on the same board in a broadcast.

15. An issue with this arose where there was something blocking the camera’s view of the LED display board. It might be a player, the ball, or a bird, for example. That is referred to in the Patent as an occluding object.

16. To give a full, accurate depiction of what is happening in the stadium the occluding object should be included, but its position may well change rapidly and it is a challenge to process the images from the TV cameras in real time while working out what is occluding object and what is advertisement from the LED board.

17. Nevatie, the prior art, addresses this issue (although its main focus is something else). It does so by having boards which emit infra-red (“IR”) light and a camera which detects IR. The system to which the camera is linked “knows” where the board is and thus which pixels in the camera image relate to it. Occluding objects block the IR light, allowing pixels relating to the board’s area but which are occluded to be identified. Then only the non-occluded pixels are overlaid with advertising by computer processing.

18. AIM says that the approach of the Patent is different. It says that instead of detecting the IR light from the board, the system in the patent detects light from the occluding object, and determines that it is indeed an occluding object by studying its “image property”. In particular, AIM says, the Patent relates to using a frequency-based filter to cut out light from the display board (which is arranged to be of known frequency), but to allow through the more varied radiation reflected by the occluding object.

19. So AIM says that Nevatie relates to dark occluding objects against a light board, the latter being detected, and the Patent relates to light occluding objects detected against a board which (because of the filtering) is dark in the relevant frequency range. I will refer for convenience to “light-on-dark” and “dark-on-light” in this judgment to reflect this, but I bear in mind that it is not the way the Patent’s claims express matters and that Supponor disputes the claim interpretation relevant to it.

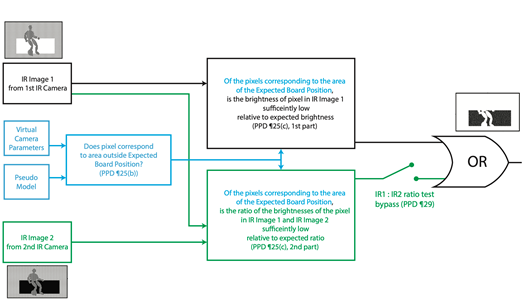

20. Supponor’s SVB System is more complicated. It uses two IR cameras (in fact two optical paths in what the lay person would call a single camera, but this does not matter).

21. The system works pixel by pixel for those pixels which are expected to be within the bounds of the display board.

22. One camera is used for a Nevatie-style dark-on-light approach. Low brightness (relative to a threshold) indicates a pixel where there is an occluding object. This is not said to infringe.

23. The other camera is sensitive to a different IR frequency from the first camera.

24. In some circumstances, when there is more ambient IR radiation, such as on a sunny day, the system looks at the ratio of the brightnesses of the pixel in the image from the first camera and in the image from the second camera. If there were a high ratio that would be consistent with no occluding object, and a lower ratio would be consistent with an occluding object. The Nevatie-style method is still used for the first camera, and the system concludes that there is an occluding object for a pixel where there is either low brightness in respect of the first camera, or a low ratio using both cameras’ results. AIM says there is infringement in these circumstances.

25. Supponor’s position is that the Patent’s claim 12 is not so broad as to cover what for the moment I will loosely call “mere brightness” of individual pixels. It says that the claim requires processing of what the occluding object (again loosely) “actually looks like”. This was referred to in a number of ways at trial (I will in general refer to “higher order” techniques or processing), and appropriate methods for it are described in the Patent. They generally involve consideration of multiple pixels together and which compare the image from a camera with the expected characteristics of things that might be observed. If that construction is right then, it is common ground, there is no infringement.

26. Supponor’s alternative position is that if the Patent’s claims are so broad as to cover mere brightness of individual pixels then they must include both light-on-dark and dark-on-light. On that basis they are obvious over Nevatie, Supponor says. For practical purposes this argument is one of pure construction, because if it is the right construction then the only other difference over Nevatie (displaying moving images on the board) is accepted to be obvious.

27. It is worth mentioning that Nevatie is not mentioned in the Patent and is not admissible as an aid to its construction.

28. AIM says that the Patent’s claims do cover mere brightness, but only light-on-dark. But it says that if it is wrong about that, it can amend to achieve that result. That is where the conditional amendments come in. AIM accepts that the Patent also covers higher order processing, but that is not its route to infringement.

29. On AIM’s construction, Supponor says it has two obviousness arguments.

i) The first is that it would be obvious to enhance Nevatie by adding a second “dark” IR channel, on a different frequency to the first, to do a better job. AIM accepts that that would hit the claim on its construction, but says that it was not obvious to do. This was called the Nevatie Plus argument.

ii) The second is that it would be obvious to do higher order processing on radiation from the occluding object. This was called the Nevatie-OD argument. For reasons I need not go into at the moment, this argument only matters (as I understand its position) if Supponor does not infringe. So it does not affect the overall result as between the parties.

30. I have already said that at trial AIM defended only a single method claim of the Patent, claim 12, and not the product claims (claim 1 and claim 13 being the main relevant ones).

31. AIM’s acceptance that claim 1 would not be defended came close to trial. At an earlier stage in the litigation, it had agreed that claims 1 and 13 would stand or fall together. Supponor says that the combined effect of these two admissions was that claim 12 was also invalid (and therefore the whole Patent) because its features, even though it is a method claim, match those of claim 13. This is what I have called the Promptu point. AIM says that it never admitted that claim 12 or the whole Patent was invalid, but if necessary would apply to withdraw the admissions. Supponor resists that. This point needs deciding even if I reject all the other attacks.

The witnesses

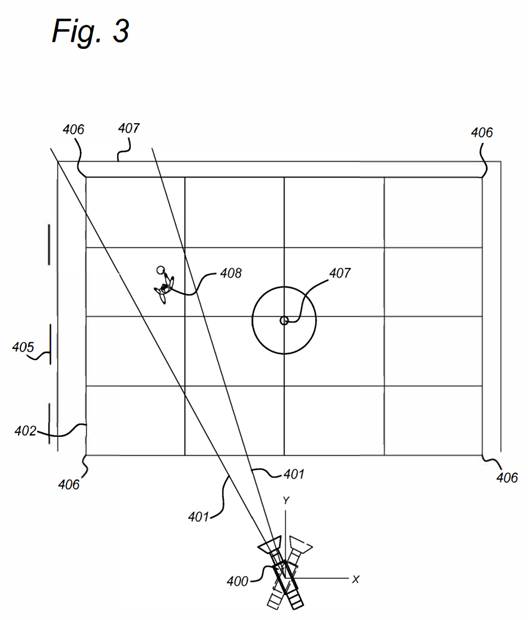

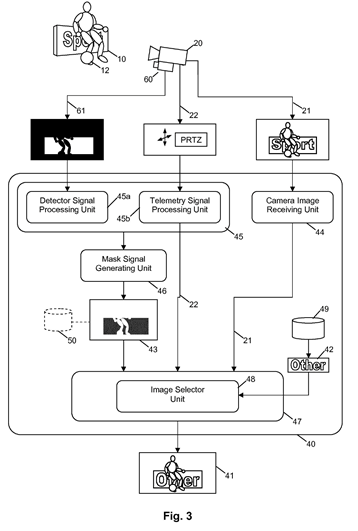

32. Each side called one expert. AIM’s expert was Dr Graham Thomas and Supponor’s was Prof Anthony Steed of UCL.

33. Supponor said that both experts were good witnesses. AIM on the other hand said that while Prof Steed had the necessary technical understanding to assist the Court, he was too academic in his background and approach.

34. I do not accept AIM’s criticism of Prof Steed. I agree that in fact his background is more in academia than Dr Thomas’, but in itself that is not material, and in any case he did have some industry experience from secondments and the like. AIM was also unable to point to matters where some lack of experience on Prof Steed’s part was significant. It pointed out in closing written submissions that he had not used a TV camera; it accepted that this was of no particular importance but said that there were “unknown unknowns” in the sense that one could not know which of Prof Steed’s opinions were affected by lack of relevant practical knowledge. I reject that. It is much too vague and anyway AIM had a full chance to test for the existence of such matters in cross-examination.

35. AIM also said that Prof Steed’s approach was overly abstract and “open-ended”. I do not accept this as a point directed at Prof Steed as a witness, but for reasons given below I think it is a valid criticism of the way that Supponor advanced its case.

36. I conclude that both witnesses were well-qualified and doing their best fairly to assist the Court. I found them to be good at explaining the technical issues and am grateful to them both.

The skilled person

37. The parties’ written opening submissions suggested that there was a dispute about the identity of the skilled person, but this faded away and the list of disputed issues did not include any point about it. Such point as there was seemed to boil down to whether the skilled person’s interest was only in live sports broadcasts where there was a need for overlaying advertisements, or also extended to studio applications. Prof Steed’s written evidence did include some matters concerned with studio-only applications but these were not part of the real arguments at trial or developed with Dr Thomas in cross-examination. Supponor’s written closing made clear that the cross-examination of Dr Thomas had been on the basis of his (Dr Thomas’) conception of the skilled person and that the studio point made no difference to the CGK, which in any event is not in dispute (see below).

38. So I can proceed on the basis that the skilled person is someone with an interest in cameras and associated displays and computer systems for overlaying advertisements at live events, in particular sporting events, and with relevant academic training, probably a computer science degree, and practical experience implementing computer graphics rendering and image processing. So far as it matters, the evidence was that this was a real field of work.

The common general knowledge

39. A joint document (the “ASCGK”), for which I am very grateful, showed the agreed CGK.

40. Although the parties said there was no CGK in dispute, the ASCGK contained two competing versions of the position on one topic, namely background removal, on the basis that although the experts agreed it was CGK, they did not agree about the extent to which the techniques available were regarded as a good basis for further action. So in a sense there was a dispute at that stage, but it faded away and by written closings no practical importance was attached to it.

41. In places the ASCGK went into more detail than is necessary as things have turned out, and in addition some aspects of the technology not relevant to the real disputes can be adequately understood without further underlying detail. So I have filleted out quite a lot of the document. What follows reproduces, with some editing, those parts which were important to the arguments, necessary for understanding, or the subject of significant discussion in the evidence at trial. The ASCGK used both past and present tenses (it frequently but not always used the present tense to describe a situation which existed at the priority date and has continued until the present). I have adjusted this in some but by no means all instances. I did not find it problematic and make no criticism; I mention it only in making it clear that what is referred to was CGK at the priority date whichever tense is used.

42. An important aspect of the argument on the Nevatie Plus obviousness attack was chroma-keying. This forms part of the agreed CGK, as set out below. I mention this because although there was no dispute about whether the technique was CGK, there was an important dispute about whether and how the skilled person would factor it into their thinking about Nevatie. By including the technique in the agreed CGK I am not prejudging that dispute.

Virtual graphics for TV broadcasting

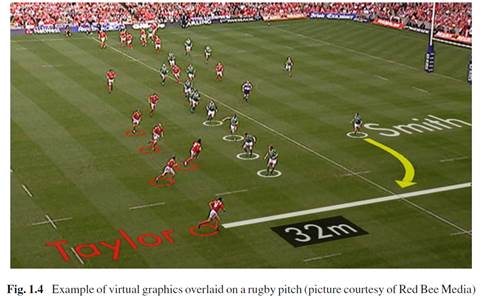

43. Virtual graphics were regularly used in TV programs and live broadcasting at the Priority Date. Virtual graphics are computer-generated graphics which are inserted into an image to appear to be part of the real scene. Applications ranged from simple overlays of the scores and time in a broadcast of a football match, or a newsfeed ribbon at the bottom of a television screen, to more complicated “virtual graphics” applications where the graphics appeared anchored to objects in the scene. Sports specific applications of virtual graphics were developed, for example to insert a first down line on an American football pitch or instant replays with sports analysis graphics overlaid. (Thomas 1 §37)

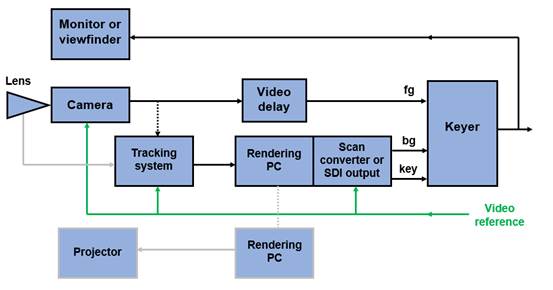

44. The skilled person would have a working knowledge of a typical live broadcasting system set-up used to insert virtual graphics in the television feed. A simplified flow diagram of a typical studio-based system is as follows:

Figure 1: The main elements of a virtual graphics system

45. The figure above illustrates a studio-based TV production system consisting of a single camera (in reality there might be multiple cameras). The camera is equipped with a zoom lens and a sensor to measure the zoom and focus length of the camera. In this example, the camera is fixed to a tracking system, which during runtime measures the position and orientation of the camera. The sensor transmits camera-positioning information to the “rendering PC”, which is essentially a PC with a graphics card running graphics rendering software. Rendering is the process by which graphics are produced ready to be later inserted into the image of the real scene to produce a composite image. The rendering PC renders the virtual graphics for the scene using the camera parameters measured by the sensor on the camera.

46. The rendered image is usually then converted to broadcast video format and output, and comprises two signals:

i) a signal with the graphics that will be inserted into the broadcast feed; and

ii) a “key” signal that indicates the areas in the image which are to be overlaid with the graphics in the broadcast feed. (Thomas 1 §41)

47. The purpose of the “video delay” is to ensure that the video signal from the camera accounts for the image processing undertaken.

48. The “keyer” is a piece of software or hardware that determines where the virtual graphics should appear in the image and combines the video signal and graphics into a single stream using the key signal. (Thomas 1 §44)

49. The use of virtual graphics in TV broadcasting was initially limited by the requirement for high-powered graphics rendering hardware, but by the Priority Date, much of the image-processing could be done on a conventional PC or laptop. (Thomas 1 §45)

Other relevant hardware

Filters

50. A filter for a camera is a material, e.g. coloured glass, that lets through only a subset of the wavelengths to which the camera sensor is sensitive. Such filters may be termed:

i) High-pass filter: a filter which lets through wavelengths over a given wavelength.

ii) Low-pass filter: a filter which lets through wavelengths under a under a given wavelength.

iii) Bandpass filter: a filter which only lets through a range (or band) of wavelengths. The range of wavelengths that is let through is referred to as the pass-band.

51. Such filters were commercially available with a range of properties. Filters were available which would block or transmit wavelengths of infrared and ultraviolet light as well as visible light. (Steed 1 §§66-67)

Electronic Displays

52. Liquid Crystal Display (“LCD”) panel displays display digital images by using the interaction of polarised light with liquid crystals to modulate the amount of light which can pass through individual pixels of the display.

53. Light Emitting Diode (“LED”) panel displays have been used for many years as large-format signage (for example, in train stations, stadiums, and sports events). Typical LEDs contain semiconductor material which emits light in a relatively narrow band of wavelengths (such as red, green or blue) when an electrical current flows. LED panel displays can display moving and still digital images. LED displays typically contain red, green, and blue (“RGB”) LEDs, which are adjusted in intensity to display images (Steed 1 §§160-161).

54. LEDs which emitted infrared light were available. (Steed 1 §246(b)(iii))

The camera and lens calibration

55. Before a broadcast TV camera is ready for broadcast, it must be calibrated to determine the geometric positioning of the camera used to produce the video feed. Camera calibration is an essential step in many computer vision applications as the camera positioning data is essential to understanding the relationship between the real-world and how virtual graphics are to be inserted into the image. (Thomas 1 §47)

56. Calibration of a zoom lens typically involves calibrating it at a range of different zoom and focus settings to obtain the necessary camera parameters. The broadcast cameras will have a sensor which measure the zoom and focus length of the camera. Calibration may be carried out by using images of the real-world scene with reference points at a known distance to calibrate the cameras or using a calibration data chart. (Thomas 1 §53)

57. Further camera calibration is undertaken to calculate parameters including the position and orientation of the camera in the scene. At the Priority Date, virtual graphics systems for sports broadcasting utilised features or objects in the scene in known or fixed positions to calibrate fixed or moving cameras. For example, the pitch lines in the football stadium are at fixed positions in the natural scene and could be used to calibrate the camera. Further calibration steps may be required during runtime. (Thomas 1 §54)

Tracking the position and orientation of the camera

58. As explained above, in order to understand the position of an object in an image and where the virtual graphics should be inserted, it is necessary to track the position and orientation of the camera in runtime. Camera tracking also ensures that the virtual graphics are synchronised with the camera motion and the correct orientation of the virtual object in each image frame of the video feed is maintained. Additionally, camera positioning sensors are integral in calculating the zoom length of the camera to ensure that the virtual graphics are to scale with the image in the frame. Tracking is the process by which the camera position parameters are estimated at runtime. This is achieved by measuring the camera position at the same time as each video frame is captured. (Thomas 1 §55)

59. Tracking could be done using mechanical or optical means.

Image Processing

60. The term ‘image’ is frequently used to refer to the data or information encoding or representing an image, rather than the viewable image itself. (Thomas 1 §42)

61. Digital images can be processed in a variety of ways. The first type is simple manipulation of the colours by scaling the values. These types of function are built-in to most image processing software. (Steed 1 §73)

62. A greyscale image can be converted to black and white by thresholding. All pixels with a level less than a threshold are set to black, and all pixels at or above the threshold are set to white. (Steed 1 §74)

Spatial frequency techniques

63. A wide variety of image processing operations use spatial filtering. Spatial frequency difference techniques are a type of image analysis. Spatial frequency is not related to the frequency of light, rather it is a measure of the fineness of detail, texture or pattern, in an image. The units of spatial frequency are cycles per pixel, measured in the image plane. An image with low spatial frequency would have gradual changing or widely spaced changes between light and dark regions, for example, gradually changing colours or wide stripes. On the other hand, narrow, high contrast stripes would have a high spatial frequency. (Thomas 1 §163-164)

64. Spatial frequency analysis techniques may utilise descriptors. A descriptor is a shorthand way of recording the properties of something. In this case, each pixel has a descriptor that is a multi-dimensional vector containing information that describes its local neighbourhood. For example, the descriptor may include information about whether the pixel is at or near an edge or a corner; whether it has a first order gradient; its shape, colour or texture; or motion information. An important characteristic of spatial frequency descriptors is that they take into account the neighbourhood of the pixel, rather than just the pixel itself. The descriptors should also be invariant to matters that are important for the application, such as rotation, orientation, change of scale and small changes in brightness. (Thomas 1 §166)

65. Spatial filtering techniques need to be used with care if the accuracy of the resulting image is important. Whilst these techniques are useful for generally cleaning up an image it cannot be guaranteed that important details will not be moved or lost. In many cases, it is not possible for the algorithms to distinguish between artefacts and features that are genuinely a part of the scene. (Thomas 2 §13)

Cut and paste and binary masks

66. Multiple digital images can be composed simply by copying pixels from one image into another (Steed 1 §69). To copy more complex shapes, a process known as binary masking is used. A mask is created which has only black and white pixels. Essentially, when copying any pixel from the overlaying image to the composite image the mask is queried and if it is black, the pixel is not copied, if it is white the pixel is copied.

67. In more complex copying an alpha mask is used. Sometimes, especially when operating on images from real cameras, objects overlap on pixels. Thus the colour of a pixel can contain a contribution from the colour of a foreground object and a contribution from the colour of a background object. If a binary mask was used, the sharp boundary would be noticeable. Thus in a process known as alpha-matting a foreground object can be identified by creating an alpha mask that indicates the proportion in the range [0,1] how much the pixel corresponds to background or foreground. That is 0 would be background, 1 foreground and 0.5, half foreground and half background (Steed 1 §71)

68. In practice creating the alpha mask can be a complex exercise. Tools to create alpha masks are common in offline image-editing and video-editing tools. It is also possible to approximate an alpha mask. One way would be to first identify all pixels that are definitely background and all pixels that are definitely foreground. This leaves some unknown pixels, which can simply be estimated by the ratio of the distance, in pixel space, from foreground to background. (Steed 1 §72).

Background Removal

69. Background removal is a common problem in image processing. There is a wide variety of techniques depending on the situation. A relatively easy case is if there is a static background and a reference image can be taken of the background without the foreground object.

70. In practice, the background image might be changing slowly. Thus a common technique would be to keep a sequence of images of the background, and then estimate the background as an average or median of the video value of pixels at each position. Then the foreground might be defined by distance of the colour of the current colour of the image from the estimated background.

71. Distance between two colours in a colour space can be defined in a number of different ways, but a simple one is the Euclidean distance, which is the square root of the sum of the differences squared. That is if two colours are R1G1B1 and R2G2B2 then, the distance is ![]() In practice, distance might be estimated in a different colour space.

In practice, distance might be estimated in a different colour space.

72. More sophisticated models of colour estimation might use gaussian models to estimate the colour. Thus there is an expected distribution of colours modelled by a mean and standard deviation. A gaussian mixture model might be used if there is a background that changes between different colours: different gaussian distributions cover different parts of the colour space.

73. If the camera is moving, then there are a variety of background estimation processes, some of which are based on tracking the camera and thus estimating how previous images can be distorted to fit the expected view, see below.

Object Identification

74. Object identification is a common operation in computer vision. It is an unsolved problem for general objects under general viewing conditions. That is, given a particular object, it may not be possible to identify it given any camera move or object illumination. This problem can be made practically impossible if there is not sufficient data about the object in the image (e.g. attempting to identify a face if there are only 4 pixels of that face in the image). However, even if the object is represented by a significant number of pixels in the image, there can be a lot of ambiguity because objects can look very different from different angles (e.g. consider the different images of a mug as the camera moves around the object), and many objects are very similar in overall appearance (e.g. faces). (Steed 1 §115)

75. In some computer vision systems, the object to be detected is a light source or other source that will appear bright in the image and so can be detected by thresholding at an appropriate level. Alternatively if the object has certain colour properties the computer vision system can be programmed with, or can be taught, the colours that it should seek. (Steed 1 §116)

76. Optical tracking technology can be used to track objects in a scene over which virtual graphics can be inserted. This technology usually involves an object with a set of markers, which enables the camera to identify the location and the orientation of the object in a scene. The camera is designed to identify those markers. (Thomas 1 §59)

77. More complex identification might rely on other features such as the presence of lines or corners in the image. A large class of schemes for matching an object to a template are model-based, where a model exists of the object.

78. Whilst object tracking is fairly straightforward in principle, in practice it can be harder, especially if highly reliable results are needed in real-time in a fairly unconstrained situation. For example, the key features on the object being tracked may become occluded, disappear from view, or not appear with sufficient contrast from the background. Sometimes the background might contain a pattern that can be confused with the object being tracked. (Thomas 2 §27)

Image Feature Identification

79. A large class of algorithms focuses on tracking specific image features. Image features are characteristic statistics of spatial regions of the image. Features might include regions that have certain frequencies of change in colour, e.g. it contains a pattern that repeats. The latter is sometimes called a texture feature. (Steed 1 §133)

80. Features can be attributed to whole regions (sometimes called blobs), areas around lines or curves in the image, or an area around a specific pixel in the image. The last of these is very commonly used in tracking applications: if a pixel has identifiable or perhaps even unique characteristics in one image, then it might be identifiable in another image. (Steed 1 §134)

Foreground Object Detection

81. Some feature detectors can detect features of objects, but not whole objects. Ideally one would be able to identify whole objects, potentially for the purposes of identifying them as foreground objects. The process would thus output the complete set of pixels that comprised the object, perhaps as a binary mask. One can see that corner detectors or edge detectors would only identify certain parts of an object. (Steed 1 §143)

Stereo cameras

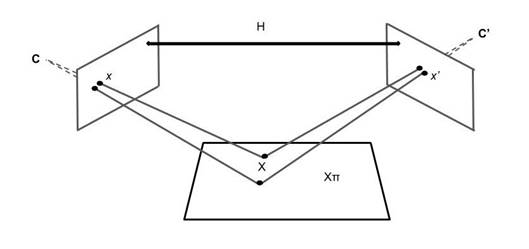

82. There is a strong relationship between two cameras that image a plane in the real world. This is illustrated in the following figure. Given a point X on a plane in the world, the points x and x’ in cameras C and C’ are related by a simple 3x3 matrix, a homography (Steed 1 §109).

83. The homography can be estimated by identifying the correspondence between any four points in the image in camera C in the image of camera C’. The four corners of a rectangle as seen in both images is a common way to do this. (Steed 1 §110

84. If there are two images of the same plane from two different cameras, or two different positions of one camera, then there is a homography that maps both images to ortho-rectified images. Pixels in the plane should be substantially the same. An object that is in front of this plane will be in a different position in these two images, due to motion parallax, or might not even appear in one images, depending on the relative positions (ignoring the case where it is front of the plane, but in neither camera’s view). Thus, this object would occlude the plane, and this occlusion region would be different in the two images. In many cases, this allows the foreground object to detected because the colours of the two images are different at equivalent pixel locations. This can form the basis of a stereo background removal technique. (Steed 1 §114)

Camera motion and optical flow

85. One technique that is common in computer vision is to track the camera’s own motion from an image sequence. Another is to determine the “optical flow” in the image. The two problems are related: if a camera is moving, then from frame to frame in the image sequence, objects will appear to move. From the patterns of movement (or flow) the movement of the observer (i.e. camera) can be estimated. (Steed 1 §129)

Keying

87. Broadcasting applications typically used a chroma-keyer (also referred to as a colour-based segmentation algorithm in computer vision applications). (Thomas 1 §61)

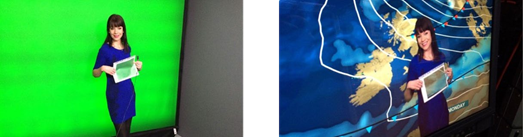

88. In computer vision applications, images are usually considered by reference to red, green and blue values (“RGB”). However, broadcast video usually converts an RGB image of the video feed into luminance (brightness) and chrominance (colour) signals. Chroma-keying is the process of classifying each pixel as either background or foreground using the chrominance of a specific colour. Traditionally, chroma-key used either green or blue as the key signal to identify the background of an image. However, other colours could be used, as well as signals in the non-visible light range (e.g., infrared, which might be useful in low lighting conditions or to avoid problems such as background colour spill). The skilled person would be aware of the main concepts of infrared chroma-keying, which had been developed by the Priority Date. For example, the skilled person would be aware of Paul Debevec's work on the Light Stage, which was a studio-based system that used infrared chroma-keying to composite an actor into a virtual background without affecting the illumination of the actor. (Thomas 1 §62)

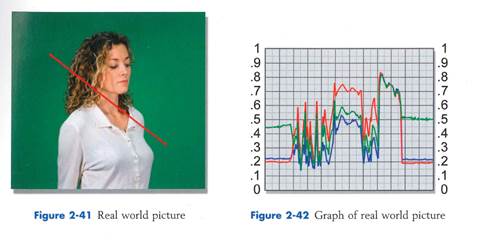

89. Chroma-keying is typically used where the whole of the background is intended to be a virtual overlay, although it can be used for narrower applications. A well-known example of chroma-keying is that of a green screen behind a weather presenter. The green colour of the screen acts as the keying colour/chrominance. In the image of the studio, each pixel in the image is categorised as either the background (e.g. green screen) or as foreground (the weather presenter). The chroma-keyer will not generate a key signal for areas that it identifies as foreground and therefore should sit in front of the virtual object that will be inserted in the background. The weather map is subsequently keyed into the areas of the green screen which are not obstructed by the presenter. (Thomas 1 §63, see also Steed 1 §§87-89). Chroma key is useful not only for removing background, but for inserting graphics anywhere into an image. (Steed 1 §93)

90. Each pixel may have a value for red, green and blue or may be represented in terms of hue, saturation and brightness. For example, the below image of a person against a green screen and the slice-graph representing the pixels along the red axis in the left-hand image.

Figure 4: Taken from “Digital Compositing for Film and Video,” Wright (Focus Press, 2002)

91. Each pixel has certain levels of each of red, green and blue and these levels can affect whether the pixel is classified as foreground or background. An example of a well-known key generation formula for making this determination is:

key = G - MAX(R, B)

This formula outputs: (i) a high value for the key signal in areas of saturated green; (ii) zero in areas of pure white; and (iii) less than zero in areas that predominantly contain red and/or blue. The output value would usually be scaled and clipped to give a key (or mask, or alpha) value ranging from 0 for foreground to 1 for background. It is possible to set certain thresholds to allow for reasonably accurate identification of the background and to allow for natural variations in the colour of the real surface of the background (Thomas 1 §64).

92. In some sports applications at the Priority Date, grass pitches were used as the “green screen” for the chroma key colour. The keyer would work out whether each pixel in the captured image of the pitch was either background (the grass pitch) or foreground (an occluding player or ball). Chroma-keying would often be done manually by a keying technician who would adjust the colours and thresholds throughout the broadcast when the lighting conditions (such as cloudy to sunny) or other conditions changed. Figure 5 illustrates the use of a traditional green chroma-key used in virtual graphics in a rugby match using the Piero sports graphics system (discussed below). (Thomas 1 §65)

Figure 5 - Example of virtual graphics overlaid on a rugby pitch

93. The skilled person would be aware of several considerations when using chroma keying in live broadcasting in a studio:

i) Lighting and maintaining a clean background in the studio - if the presenter casts a shadow, it is difficult to identify a key on the shadow. Shadows and lighting require careful planning regarding the position of lights and camera.

ii) It may be difficult to accurately distinguish the background from transparent or reflective surfaces (such as the presenter’s hair, glasses or shadow) or blurred parts of the image (for example due to camera motion or de-focused edges).

iii) The chroma-key will not work if the foreground is the same colour as the background.

iv) Difficulty achieving a high-quality output image may require additional image-processing to clean up the edges of the foreground, such as removing background colour ‘spill’ through hair. (Thomas 1 §66)

94. Many of these issues could be addressed by careful planning of the lights and camera in a studio and the broadcaster would have control over the clothing/appearance of the programme presenter. Furthermore, it is possible to set certain thresholds for level of saturation and brightness to identify the softer edges between the foreground and background pixels more accurately. (Thomas 1 §67)

95. Live sports broadcasting presents a number of further considerations which could impact the effectiveness of the chroma-key:

i) Much of the external environment is out of the control of the system developer, which includes interference from the light of the scene (including by weather), the background, appearance of players, behaviour of the crowd (e.g. flares, pitch invasions and banners).

ii) Control of the colours in a scene is outside the system developer’s control. For example, it may be that the football shirts of a team are a similar colour to the pitch.

iii) In a fast-paced sports broadcast, blurring of the camera image would have been a more frequent issue which was difficult to fully address with existing chroma-key technology. Rapid movement and blurring could result in small fast-moving objects not being reliably keyed.

iv) The demand for a high-quality and accurately keyed image which is higher for live sports applications compared to the use of virtual graphics in a studio where audience expectations of production values are often lower, and the use of virtual graphics (such as a weather map) are expected. (Thomas 1 §68)

96. In studio-based applications an arbitrary colour could be chosen (not necessarily blue or green) and the key can be adapted so that it accommodates the natural variations in the colour of the background surface by allowing the user to set certain thresholds for aspects such as saturation and brightness. However, in live sports broadcasting the choice of key colour is limited to the colours in the real-world background (and control over that real world background is limited). For example, a football pitch cannot easily be made to appear a uniform, green colour regardless of changing weather conditions, shadows and mud etc. (Thomas 1 §69)

Virtual studio

97. While chroma-key establishes one way of compositing images from multiple sources together, the combination of camera tracking and real-time computer graphics enables computer graphics elements to be composited in a way that it seems that the computer graphics is an element of the real world. (Steed 1 §124)

98. In TV, real-time compositing is sometimes known as a virtual studio. The integration of real-time graphics is more challenging. The camera must be tracked, then a virtual model aligned with the real model using virtual cameras, while the areas to be used from real and virtual footage are identified by a mask. (Steed 1 §126)

Rendering

Virtual Production around the Priority Date

100. Virtual graphics were used in a variety of television applications around the Priority Date. The skilled person would either be familiar with these specific examples of virtual graphics in sports or would have been familiar with the techniques used in these examples. (Thomas 1 §72)

The Piero sports graphics system

101. Piero is a system for producing 3D graphics to help analyse and explain sports events, for use by TV presenters and sports pundits. It has been used by the BBC since 2004 on Match of the Day, where it was initially used to highlight and track football players and draw off-side lines on the pitch. When launched in 2004, the system initially relied on PTZ data from sensors on special camera mounts. In 2005, the system was upgraded to use image processing techniques to track the camera movement by identifying lines on the pitch. (Thomas 1 §73)

102. In 2009, a new image-based camera tracker was implemented that could use arbitrary image features (not just lines) to compute the camera position and orientation, allowing the system to be used on a wider range of sports such as athletics. Further developments before the Priority Date included an intelligent keying system, to improve the ability of the system to distinguish the colour of athletes and background. This was particularly useful for distinguishing between sand and skin when placing graphics on a long-jump pit or in beach volleyball. (Thomas 1 §74)

103. Piero was still in popular use by the Priority Date (and as of 2011 was in use in over 40 countries around the world). (Thomas 1 §75)

‘1st and Ten’ and ‘First Down Line’

104. The skilled person would have been aware of the development of the ‘1st and Ten’ system which used virtual graphics to enhance the audience’s understanding/enjoyment of American football games. The system was primarily used to insert graphics which represented the yard line needed for a touchdown onto the pitch using a traditional chroma-keying method. A 3D model of the pitch would be used to calibrate the cameras before the match. (Thomas 1 §76)

![]()

Figure 6: The yellow line graphic is inserted to illustrate the yard line

105. The typical set up involved cameras with sensors which monitored the camera’s position during the game (i.e. PTZ data). The camera tracking data is used to generate information regarding position of the camera, which in turn would be used to work out where the yard line should be inserted in the incoming video. A chroma-key was used to distinguish the (green) pitch from the players to ensure that the yellow line would align with the background. The yellow line would be inserted into the image and the feed is then sent to the production truck in real-time. (Thomas 1 §77)

106. Known issues with this early system included the inability of the chroma-key to distinguish between foreground and background in certain instances. For example, if the colour of the players’ clothing was similar to the green of the grass, then the chroma-key could not distinguish between the foreground player and the grass and the yellow line would overlay the players. Other issues were if the pitch became very muddy and the “green screen” of the pitch was lost. For example, the muddy areas would be identified by the keyer as foreground. (Thomas 1 §78)

The Patent

107. The priority date is 13 May 2015; entitlement to priority is not challenged.

108. There was little dispute about the main features of the system that the Patent teaches; rather, the disputes centred around detailed points said to go to claim interpretation.

109. The Patent’s system can be understood from Figures 1 and 3:

110. Figure 1 is a block diagram of the system and Figure 3 is a schematic picture of a camera of the system in a stadium with the position of various features such as the corners of the pitch and advertising display boards marked.

111. As is explained at [0009] and [0012], the system uses a model of the real world which includes things such as the corners and boards.

112. At least one camera is required, Figure 1 shows two. It is explained later (at [0022]) that detection of radiation other than visible light, such as near-IR, IR or UV, is contemplated.

113. [0013] explains as follows:

[0013] In operation, the camera 400 captures a series of images and transmits them to the camera image interface 3, which receives them and stores them (at least temporarily) in the storage 4. The camera 400, and/or additional devices cooperating with the camera 400, generate camera parameters, such as X, Y, and Z coordinates of the camera and orientation parameters and zoom parameters, and transmit them to the camera parameter interface 2 which forwards the received camera parameters to the positioner 8, possibly via the storage 4. The positioner 8 positions the overlay surface in the captured image. That is, when an overlay surface 407 is in the field of view 401 of the camera 400, the overlay surface is captured in the captured image and the positioner determines where in the captured image the overlay surface is, based on the coordinates of the overlay surface in the real world model 5 and the camera parameters.

114. Camera parameters such as this were CGK.

115. [0013] goes on to explain that the detector 9 detects whether there is an occluding object in the way of the “overlay surface”, which in the embodiments is a display board. The detail of this description is relevant to the claim interpretation issues and I return to it there. If there is an occluding object then the overlayer 10 works out which parts of the board are not occluded and replaces them with the “overlay image”, such as an alternative advertisement.

116. [0015] makes clear that the system, while explained by reference to a single image, can also be used for a sequence of images, such as video.

117. Following on from this general explanation, the Patent gives further teaching about detecting occluding objects. Again, I will return to this in more detail when I come to claim interpretation, but for present purposes I point out that there are some general matters covered in the passage down to [0021], and then three more detailed sections:

i) “Detection of the occluding object: Stereo image” at [0033], which picks up from a general pointer at [0021];

ii) “Detection of occluding objects using active boards” at [0034]-[0035];

iii) “Detection of Occluding objects using spatial frequency differences” at [0036] to [0043] which covers three different types of algorithm.

Claims in issue

118. The only claim remaining in issue is claim 12. Three versions are in play:

Claim 12 - As Granted

|

12 |

A method of digitally overlaying an image with another image, |

|

12.1 |

comprising creating (200) a model of a real world space, |

|

12.1.1 |

wherein the model includes an overlay surface to be overlaid with an overlay image, |

|

12.1.1.1 |

wherein the overlay surface in the model represents a display device in the real world, |

|

12.1.1.2 |

wherein the display device is configured to display a moving image on the display device in the real world by emitting radiation in one or more pre-determined frequency ranges; |

|

12.2 |

identifying (201) camera parameters, which calibrate at least one camera with respect to coordinates of the model; |

|

12.3 |

capturing (202) at least one image with respective said at least one camera substantially at the same time, said at least one captured image comprising a detection image, |

|

12.3.1 |

wherein the camera used to capture the detection image is configured to detect radiation having a frequency outside all of the one or more predetermined frequency ranges and distinguish the detected radiation outside all of the one or more pre-determined frequency ranges from radiation inside the one or more pre-determined frequency ranges; |

|

12.4 |

positioning (203) the overlay surface within said at least one captured image based on the model and the camera parameters; |

|

12.5 |

detecting (204) an occluding object at least partially occluding the overlay surface in a selected captured image of said at least one captured image based on an image property of the occluding object and the detection image; |

|

12.6 |

overlaying (205) a non-occluded portion of the overlay surface in the selected captured image with the overlay image, by overlaying the moving image displayed on the display device in the real world with the overlay image in the selected captured image. |

Claim 12 - Revised Amendment 1 (conditional)

|

12 |

A method of digitally overlaying an image with another image, |

|

12.1 |

comprising creating (200) a model of a real world space, |

|

12.1.1 |

wherein the model includes an overlay surface to be overlaid with an overlay image, |

|

12.1.1.1 |

wherein the overlay surface in the model represents a display device in the real world, |

|

12.1.1.2 |

wherein the display device is a LED board configured to display a moving image on the display device in the real world by emitting radiation in one or more pre-determined frequency ranges; |

|

12.2 |

identifying (201) camera parameters, which calibrate at least one camera with respect to coordinates of the model; |

|

12.3 |

capturing (202) at least one image with respective said at least one camera substantially at the same time, said at least one captured image comprising a detection image, |

|

12.3.1 |

wherein the camera used to capture the detection image is configured to detect radiation having a frequency outside all of the one or more predetermined frequency ranges and distinguish the detected radiation outside all of the one or more pre-determined frequency ranges from radiation inside the one or more pre-determined frequency ranges; |

|

12.4 |

positioning (203) the overlay surface within said at least one captured image based on the model and the camera parameters; |

|

12.5 |

detecting (204) an occluding object at least partially occluding the overlay surface in a selected captured image of said at least one captured image based on an image property of the occluding object and the detection image; |

|

12.6 |

overlaying (205) a non-occluded portion of the overlay surface in the selected captured image with the overlay image, by overlaying the moving image displayed on the display device in the real world with the overlay image in the selected captured image; |

|

12.7 |

wherein the LED screen has a uniform, monotone distribution as if it was not active on the captured detection image. |

Claim 12 - Revised Amendment 2 (conditional)

|

12 |

A method of digitally overlaying an image with another image, |

|

12.1 |

comprising creating (200) a model of a real world space, |

|

12.1.1 |

wherein the model includes an overlay surface to be overlaid with an overlay image, |

|

12.1.1.1 |

wherein the overlay surface in the model represents a display device in the real world, |

|

12.1.1.2 |

wherein the display device is a LED board configured to display a moving image on the display device in the real world by emitting radiation in one or more pre-determined frequency ranges; |

|

12.2 |

identifying (201) camera parameters, which calibrate at least one camera with respect to coordinates of the model; |

|

12.3 |

capturing (202) at least one image with respective said at least one camera substantially at the same time, said at least one captured image comprising a detection image, |

|

12.3.1 |

wherein the camera used to capture the detection image is configured to detect radiation having a frequency outside all of the one or more predetermined frequency ranges and distinguish the detected radiation outside all of the one or more pre-determined frequency ranges from radiation inside the one or more pre-determined frequency ranges; |

|

12.4 |

positioning (203) the overlay surface within said at least one captured image based on the model and the camera parameters; |

|

12.5 |

detecting (204) an occluding object at least partially occluding the overlay surface in a selected captured image of said at least one captured image based on an image property of the occluding object and the detection image; |

|

12.6 |

overlaying (205) a non-occluded portion of the overlay surface in the selected captured image with the overlay image, by overlaying the moving image displayed on the display device in the real world with the overlay image in the selected captured image; |

|

12.7 |

wherein the LED screen has a uniform, monotone distribution as if it was not active on the captured detection image; and |

|

12.8 |

the occluding object is still visible in the captured detection image. |

119. Claims 1 and 13 will also be relevant to the Promptu point, but I will explain that, and their role, when I come to it in due course. Claim 2 is not in issue as such, but is relied on by AIM in relation to claim interpretation.

Claim Scope

120. The legal principles that apply to “normal” claim interpretation are set out in Saab Seaeye v Atlas Elektronik [2017] EWCA Civ 2175 at [17]-[18], applying Virgin v Premium [2009] EWCA Civ 1062. No issue of equivalence arises in the present case.

Issue 1 - claim feature 12.5

121. Supponor says that this claim feature requires higher order processing. On that basis, the claim feature is not present in the SVB System. I do not think a categorical definition of “higher order” processing was put forward by Supponor or agreed by AIM, and indeed a range of expressions were used in the evidence and argument, but for the purposes of this judgment I can refer to what Prof Steed said and was relied on by Supponor: that an image property of the occluding object requires a descriptor that is a “property of the detection image whose presence can be associated specifically with the presence of an expected type of occluding object, and which can be identified by the detector and used to ascertain the size, shape and location of the area of pixels which correspond to the occluding object with the detection image.”

122. This requires some pre-existing overall appreciation of the occluding object’s likely appearance and does not extend to simply looking at one pixel at a time in isolation to see how bright or dark it is.

123. AIM on the other hand says that while the claim covers higher order processing, it is not so limited and also extends to an assessment of the brightness of individual pixels. The SVB System does have that.

124. However, AIM’s broad construction is in danger of going too far for it, because if the claim extends to identifying a dark occluding object (i.e. one not emitting or reflecting light in the relevant wavelengths) against a light background then Nevatie has that, and the Patent is obvious, subject to AIM’s amendments.

125. The words of central importance are “based on an image property of the occluding object”, but the feature has to be interpreted as a whole and indeed along with the other features of the claim. For reasons I will go on to explain, I think it is relevant to consider features 12.3, 12.3.1 and 12.5 as a whole, especially on the issue of whether the claim extends to dark-on-light.

126. The words are not terms of art or even very technical. They are ordinary words to be interpreted in context.

127. The main relevant context in the Patent is:

i) The section in [0013] at column 4 lines 10-19. This says that a vision model “may include a descriptor of the occluding objects”. Shape, colour and texture characteristics are mentioned. The passage also refers to “image characteristics”, which was not suggested by either side to be different from “image properties”.

ii) [0016] which says that “[t]he image property of the occluding object relates to a descriptor of a neighbourhood of a pixel”.

iii) [0019] which concerns detecting the occluding object by comparing a detection image with a previous detection image.

iv) [0021] which refers to stereo images being used, and contains the words “image property”, it being, in that instance, a disparity between the two stereo images.

v) The three sections from [0033] onwards:

a) The “Detection of the occluding object: Stereo image” section at [0033].

b) The “Detection of occluding objects using active boards” at [0034]-[0035]. This does not refer to “image properties” but does refer to “image” a number of times.

c) The “Detection of Occluding objects using spatial frequency differences” section at [0036] to [0043]. This also refers to “image properties” at [0037].

128. Although not included in claim 12, the word “descriptor” is mentioned in a number of places in the above passages. This does have a technical connotation going beyond its ordinary English meaning, as was set out in the ASCGK at [37], which I have quoted above in the section dealing with spatial frequency analysis.

129. This was relied on by Supponor for the sentence that “An important characteristic of spatial frequency descriptors is that they take into account the neighbourhood of the pixel, rather than just the pixel itself.”

130. Supponor’s argument for a narrow meaning had the following main elements:

i) [0016] teaches that the image property of the occluding object is a descriptor of a neighbourhood of a pixel.

ii) The CGK as to the use of “descriptor”.

iii) The three sections referred to above from [0033] onwards are very different, and it is only the third, relating to spatial frequency differences, that uses image properties and falls within claim 12.

131. Supponor’s position on the third point seemed a little bit changeable, because [0021] in the Patent uses the term “image property” in relation to the use of stereo images. However, it satisfied me in its closing submissions that stereo detection of the kind described does conventionally use a descriptor according to [37] of the CGK and does not work on a simple pixel by pixel basis. So, I think it was argued, the stereo approach could be said to use an “image property” while still being meaningfully distinct from the second approach based on an active board.

132. There was also a subsidiary dispute about whether Algorithm 3 at [0042] could work with “mere brightness” as opposed to using genuinely higher order processing. Based on Prof Steed’s evidence I find that using mere brightness would not be a meaningful way to proceed in the context of Mixture of Gaussians. Algorithms 1 and 2 both, it was not disputed, require reference to surrounding pixels and a model of expected characteristics.

133. Supponor also relied on [0019] which refers to identifying occluding objects by comparing images over time, and said that [0034] at column 10 lines 11-15 was referring to the same thing. As I understood it, the point was said to be that since recognising objects by changes between frames was conventional, as [0019] says, the reader would not think that what was being described at [0034] would be claimed. I do not accept this point in relation to what [0034] says. It is referring to differences between static background and moving foreground which will be reflected in their characteristics within individual images (for example in the event that the techniques of [0034] were not used on video, as Prof Steed accepted was possible). “Change” is loose wording, but the meaning is clear. The reader would not in any event think that the totality of what was described in [0034] was conventional. In any event, this was a minor part of Supponor’s argument.

134. In my view, Supponor’s argument that “image property” is limited to higher order processing is wrong. “Image property” is a broad term and the skilled person’s first impression of the Patent would be that it was very general.

135. The skilled person would know from the CGK what a “descriptor” was, and that in general it could include a spatial frequency descriptor of the kind described in the ASCGK. The skilled person would see how this could be used in the Patent, from teaching including [0013], [0016] and the sections on stereo images and spatial frequency analysis.

136. However, that is not at all the same thing as the skilled person thinking that spatial frequency analysis/descriptors or higher order processing must be used. [0013] is extremely permissive, with the use of “For example” and “may”. [0016] looks more like a direction, but it is well capable of being understood as simply informative, saying that in the context under discussion the image property in fact concerns the neighbourhood of the pixel.

137. Importantly, claim 2 of the Patent is specifically limited to an image property that “relates to a descriptor of a neighbourhood of a pixel, wherein the descriptor comprises a spatial frequency …”; claim 1 just refers to detection based on an image property, as with claim 12. I appreciate of course that claim 1 is not defended over Nevatie, but the reader of the Patent would not know that and the fact that the narrow requirement contended for by Supponor is optional in the parallel situation of that claim family is still material to claim interpretation of claim 12.

138. I also reject the idea that the skilled person would think that the stereo image approach (possibly) and the spatial frequency approach were within claim 12 but the active boards approach was not. There would seem no reason for this. Certainly no technical reason was advanced. It would seem odd to the skilled person that that division was being made (if they thought about it, which I do not think they would), and still odder that, if such a division was to be made, it should be by using the words “image property”.

139. I have recognised above that the active boards section does not refer to “image property” whereas the phrase is used for the stereo image approach and the spatial frequency approach. I do not think there is anything to this. It is a very semantic point. “Image property” just means a property of an image, and [0034] refers to “image” multiple times while discussing the characteristics of what is “captured” by the set up in terms of frequency etc.

140. Finally, Counsel for Supponor argued that if feature 12.5 meant what AIM said then it was redundant and added nothing to feature 12.3.1, which he described as requiring a “frequency-selective camera”. I do not accept this submission. Feature 12.3.1 does indeed specify the camera and I think “frequency-selective” is a fair paraphrase. Feature 12.5 however specifies the processing and so relates to something different. It is true that in a mere brightness active board set-up the processing will be very simple given what the camera does, but that does not make feature 12.5 redundant.

141. So I reach the conclusion that “image property” is broad, as AIM contends. I move on to consider the dark-on-light aspect. Not without some hesitation, I have concluded that AIM is correct on this too. My main reasons are as follows:

i) It is not relevant that rejecting AIM’s argument would run into Nevatie. The skilled person would not have that in mind.

ii) It is a point against AIM that its drive on the first aspect of feature 12.5 was that a broad meaning was intended.

iii) However, both sides agreed that the teaching of the Patent was about processing radiation from the occluding object. That is a consistent thrust of its teaching, common to the fairly general discussion at [0013] to [0021] and the three more specific sections from [0033]ff.

iv) Conversely, there is no teaching about using the absence of radiation from the occluding object.

v) Although I have said that “image property” has a broad meaning, the context also includes “detecting” an occluding object. I do not think it would be a natural use of language to say that something is being “detected” when it cannot be seen at all.

vi) This is fortified by the way that feature 12.3.1 is written concerning the camera. It is to detect radiation outside the one or more predetermined frequency ranges, i.e. not radiation in the range emitted by the display device.

vii) In a dark-on-light situation one would naturally say that the presence of the occluding object was inferred but one would not say that it was detected. This is perhaps just another way of looking at the points above.

142. On this basis, AIM does not need to amend the Patent to avoid dark-on-light being within the claims.

Issue 2 - claim feature 12.3

143. Supponor contends that feature 12.3 is to be interpreted to require one and only one detection image. This is the last vestige of a much wider argument that it was running at the start of the trial that claim 12 is an exact recipe that allows no additions in any respect, which could have supported a non-infringement argument based on the SVB System having the two cameras and its processing approach.

144. That wider argument was always going to be very difficult given the “comprising” language in the opening words of the claim (which conventionally means including but not limited to) so it is no surprise that it is not pursued in its whole breadth.

145. Supponor bases its argument on feature 12.3 firstly on the fact that claim 12 repeatedly uses the words “at least one” in relation to the captured image - see integers 12.3, 12.4 and 12.5 - but does not use the same words in relation to the detection image.

146. Supponor also relies on the fact that integer 12.3 requires capturing the “at least one image”, with the “at least one camera”, “substantially at the same time”.

147. This is inelegant language but in context it is clearly trying to cater for the possibility of more than one captured image, with them all having to be captured at the same time.

148. Indeed, it is not just the “at the same time” language that is inelegant; the whole of the definition of the captured images and their relation to detection images is ugly and rather messy. But it is not especially hard to understand in context, and Supponor did not suggest that it was unclear what the captured image or the detected image was: the captured image is the picture taken by the camera (or one by each camera) which will be fit for use (usually by broadcast) after it has had the occlusion of the overlay surface dealt with, if necessary. The detection image is a product of the processing to achieve the desired result of detecting the occluding object (if there is one).

149. I do not think Supponor had any purposive basis for its approach.

150. Supponor accepted, as it had to, that there can be multiple cameras and each of them can capture an image, in particular at the same time. Integer 12.3 says in terms that (each) “at least one” captured image comprises a detection image. There is no linguistic hook on which to hang an argument that the claim only allows one detection image in the overall method. It would in fact seem very odd if the claim could be avoided because of the use of more complex processing that in some way processed the captured image in stages and created multiple detection images.

151. To try to make sense of this, Counsel for Supponor argued that in a “normal minimal implementation” of the invention of claim 12 there would be two captured images, one of which is used for overlaying and the other of which “forms” the detection image. This involves illegitimately reading the claim down by reference to an idealised single normal implementation.

152. In short, the claim uses deliberately open and non-limiting language in respects relevant to this point. It could be better written but there is nothing in its language to support Supponor’s narrow reading and no purposive support either.

Infringement

153. There is no material dispute about how Supponor’s SVB System works (as I said in the Introduction above, there are multiple versions but the differences do not matter). It is rather fiddly to understand, however, and the verbal description in the PPD is not easy to follow. Supponor made an effort to depict it pictorially in its opening written submissions. This was the subject of complaint by AIM, which criticised the depiction as incomplete in its closing written submissions.

154. I agree that Supponor’s representation did not cover all that was in the PPD; it was not intended to, because it was meant to make matters comprehensible for me. I did not think it was unfair and I found it helpful. So I will use it here. My use of it is to make this judgment accessible to the reader and does not displace the PPD, which remains the definitive account.

155. The diagram has three main parts, shown by the use of three colours.

156. The blue part, shown in the middle, depicts the system’s ability to assess which pixels in an image being processed correspond to where the display board is expected to be. Only those pixels are tested to see whether there is an occluding object. So the output of the blue part feeds into both the other two parts.

157. The system has two IR channels.

158. The black part, uppermost, shows the processing of IR Channel 1. This happens all the time, and in IR Channel 1 the billboard is arranged to be “bright”. The detection algorithm processes each pixel expected to correspond to the position of the board, and compares its brightness to a threshold. If it is “dim” by this measure that indicates an occluding object blocking the IR light from the board which would otherwise have made the pixel “bright”.

159. Supponor says that the black part corresponds at the relevant level of generality to Nevatie Figure 3. I agree and I do not think AIM disputed this.

160. The green part, lowermost, does not work all the time. It is intended to be put into operation when the ambient light is relatively bright, as denoted by the open switch going into the “OR” gate on the right hand side of the figure. It uses IR Channel 2, in which the board appears “dark”.

161. In the green processing, for each pixel expected to correspond to the position of the board, a ratio is assessed of the brightness in IR Channel 1 to the brightness in IR Channel 2. If the ratio is low (i.e. the brightnesses are close to each other) then that indicates an occluding object. This is not completely intuitive, but can be understood by looking at the small diagrams at the top left and bottom left of the diagram that show a stylised silhouette of a football player. Pixels for non-occluded parts of the board have very different brightnesses so the ratio will be large, whereas pixels for the parts of the board occluded by the player have similar brightnesses and the ratio will be small.

162. This pixel-by-pixel processing allows the creation of a mask for overlaying, which is shown just above the OR gate on the left hand side. When only the IR Channel 1 (black) processing is used, each pixel’s status depends on that alone. When the IR Channel 2 processing is also in use, a pixel within the expected bounds of the board is considered to correspond to an occluding object when either the IR Channel 1 threshold or the IR Channel 2 ratio so indicates; hence the OR gate.

163. AIM’s infringement case is based on the IR Channel 2 (green) processing.

164. Supponor made the point that the IR Channel 1 processing is always in operation. This is true. AIM responded that there will be circumstances in which the IR Channel 2 processing “dominates” because the ambient light is very bright and so the reflections from an occluding object are not dim enough for it to be recognised as such; in such a situation the ratio test on IR Channel 2 will still give the “right” answer. I am not in a position to determine how likely or common that is, and I do not need to do so to determine the infringement questions, but the set-up of the system makes clear that the IR Channel 2 processing can be decisive in assigning a pixel as being part of an occluding object when the IR Channel 1 processing would say that it was not.